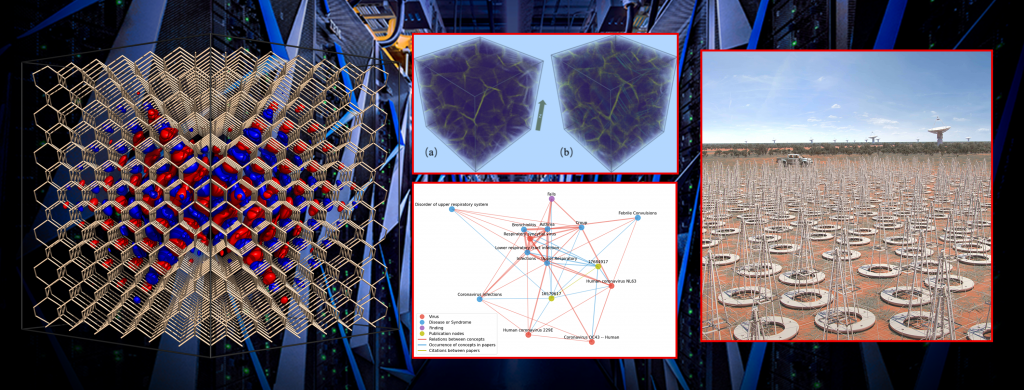

Amid the challenges that the Department of Energy’s Oak Ridge Leadership Computing Facility faced in assembling and launching the world’s first exascale-class (more than a quintillion calculations per second) supercomputer, Frontier, one key component was hitch-free. Integral to Frontier’s functionality is its ability to store the vast amounts of data…

Coury TurczynApril 29, 2024