Managing a supercomputer requires operators to collect and analyze data generated from the machine itself. This data includes everything from job failure rates to power consumption figures. Researchers at the US Department of Energy’s (DOE’s) Oak Ridge National Laboratory (ORNL) have gathered an unprecedented amount of operational data from Summit, the nation’s most powerful supercomputer, in both scale and scope. Insights from this data may lead to innovations in improving the efficiency and reliability of high-performance computing (HPC) data centers as supercomputers enter a new era of exascale capabilities (a billion-billion floating point operations per second).

The research was conducted by the Analytics and AI Methods at Scale (AAIMS) group in the National Center for Computational Sciences at ORNL, which examined Summit’s power consumption at the component level, the node level, and the system level. The team examined a full year’s worth of Summit data from its 4,626 nodes; each node consists of six GPUs, two CPUs, and two power supplies, generating over 100 different metrics at a 1 Hz frequency. This resulted in a dataset of 8.5 terabytes (compressed) for study.

The AAIMS group’s findings will be presented on November 18 at SC21, the International Conference for High Performance Computing, Networking, Storage, and Analysis, where their paper is a finalist for the SC21 Best Paper Award.

To put Summit’s dataset into context, the team also gathered the system’s job allocation history for the same year (2020) and constructed per-job, fine-grained power consumption profiles for over 840,000 jobs. The team then cross-analyzed the impact of these jobs with cooling-supply data from the central energy plant and reliability data from the system itself. Performing such end-to-end cross-analysis of long-term, high-dimensional data required the team to analyze Summit’s power characteristics on another supercomputer, the Andes cluster, both of which are managed by the Oak Ridge Leadership Computing Facility (OLCF), a DOE Office of Science user facility at ORNL.

“The insights and the lessons we learned will not only benefit the ongoing production and operation of the Summit system, but they will also lay the foundation for future system design and acquisition,” said AAIMS Group Leader Feiyi Wang. “Now we know how our power is being consumed, and, to a degree, we know what kind of applications have what kind of energy consumption profiles. This enables us to explore more intelligent job scheduling and work toward more energy-efficient computing and AI-driven facility operations, for example.”

By gathering system data at a higher granularity—every second of operation (1 Hz) rather than every minute—the AAIMS team amassed an unparalleled high-resolution dataset.

“There are some places where, initially, we thought, ‘Isn’t this excessive? Why do you actually need that kind of information while everybody else is doing 1-minute stuff?’” said Woong Shin, an HPC engineer in the AAIMS group who led the project. “This high-resolution data collection was originally engineered to detect or predict swift power level changes and thermal changes in the system to support Summit’s medium-temperature water cooling controls. But it turned out that the data could tell much more about the uniqueness of HPC applications and their increasing physical impact in terms of power consumption.”

AAIMS’s power analytics project is unique in the amount and resolution of the operational data it collected and analyzed as well as in the overall scope of its effort. The team examined Summit’s entire system from end to end, rather than just the machine itself, by including its central energy plant in the analysis.

“We were not just analyzing a single component or a single cluster or just the facility, which is the traditional way this sort of research has been done. We also looked at the entire data center as one piece. This gave us a whole-system picture that inspired us to develop more tools that could be provided to facility operation engineers,” Shin said. “The more we look at the pursuit of energy efficiency as well as reliable operation of HPC systems, we realize that such an end-to-end perspective is important. But to do that, the operators need the tools necessary to keep up with the data.”

ORNL’s AAIMS group, taking a casual stroll in front of the Summit supercomputer’s cooling towers. From left:Vlad Oles, Ahmad M. Karimi, Feiyi Wang, Austin Ellis, Woong Shin. Photo: ORNL/Carlos Jones

Typically, supercomputer operators look at data from much shorter time spans—a single day, or a week, or a month at most—and rely on their own knowledge to parse the metrics being delivered by their analytic dashboards.

“The goal of our project is to enhance the number of data streams that go into any kind of algorithmic technique and systematically provide more information to the operator,” Shin said. “Then, we want to process that data and really dig up power trends for the engineers so they can make more informed decisions and even decisions for the future as well.”

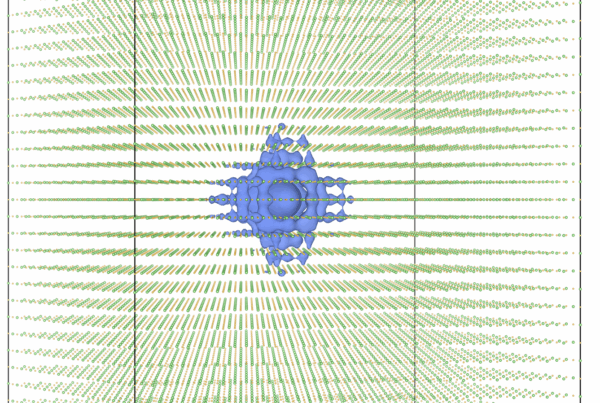

One of the project’s focus areas is the frequency and duration of power spikes. Under normal operations, Summit operates at a few megawatts less than its 13-megawatt peak. During some jobs, Summit will undergo a power swing, with an amplitude as large as 7 megawatts in less than a minute.

“We were able to gain information about how those swings occur: what is the frequency of the swings, what are their bounds, how fast does a swing go up, how fast does a swing go down? Quantification of such envelopes is crucial for designing energy plants that support HPC systems with the necessary power and cooling,” Shin said.

The team was able to observe that large-scale, parallel applications can correlate with large power swings of several megawatts many times in a single job, thereby defining the HPC workload. Despite being a pre-exascale system, Summit’s information can be extrapolated for use with the OLCF’s upcoming Frontier exascale supercomputer.

“Such in-depth information will help engineers design dynamic control systems that decide when to stage or de-stage resources (e.g., chillers, cooling towers, heat pumps) for a given workload and given weather conditions,” Shin said. “The duration of large power swings is less than 2 percent of Summit’s running time for a full year and is highly dependent on application behavior. By leveraging that information, we were able to develop techniques that can identify patterns of what that power fluctuation actually looks like, how the system responded, and what efficiency level we have achieved. This changes the way we support HPC operations with data.”

With the imminent arrival of Frontier, the AAIMS group has begun a new power analytics project that will take their Summit dashboard one step further. The Frontier project will include research on machine learning and apply it to the system in practice. The team’s ultimate goal is to provide ongoing production insight into HPC systems and provide AI-driven suggestions to operators for potential actions.

“That is the holy grail of the whole thing: complete the loop,” Wang said. “Have the data, have the analysis result, have the prediction, and then feed it back to the operational side of things and make an impact. That feedback and intelligent control loop is the holy grail and a hallmark of a smart facility.”

UT-Battelle LLC manages Oak Ridge National Laboratory for DOE’s Office of Science, the single largest supporter of basic research in the physical sciences in the United States. DOE’s Office of Science is working to address some of the most pressing challenges of our time. For more information, visit https://energy.gov/science