Researchers used the world’s fastest supercomputer to train an artificial intelligence model to draw up blueprints for the building blocks of life.

The study earned the multi-institutional team a finalist nomination for the Association for Computing Machinery’s Gordon Bell Prize, which honors innovation in applying high-performance computing to applications in science, engineering and large-scale data analytics.

“Think about a ChatGPT that designs proteins,” said Arvind Ramanathan, a computational biologist at the Department of Energy’s Argonne National Laboratory and the study’s senior author. “The goal is to come up with new designs informed by experimental data that tell us whether a protein combination succeeded in the laboratory and to build on those successes. Ultimately, we could use this approach to design antibodies, vaccines, cancer treatments and even bioremediation for environmental disasters. That’s what we’re enabling here.”

The Gordon Bell Prize will be presented at this year’s International Conference for High Performance Computing, Networking, Storage and Analysis, Nov. 17 to 22. Ramanathan and his team will share the results of their study at the conference Nov. 20.

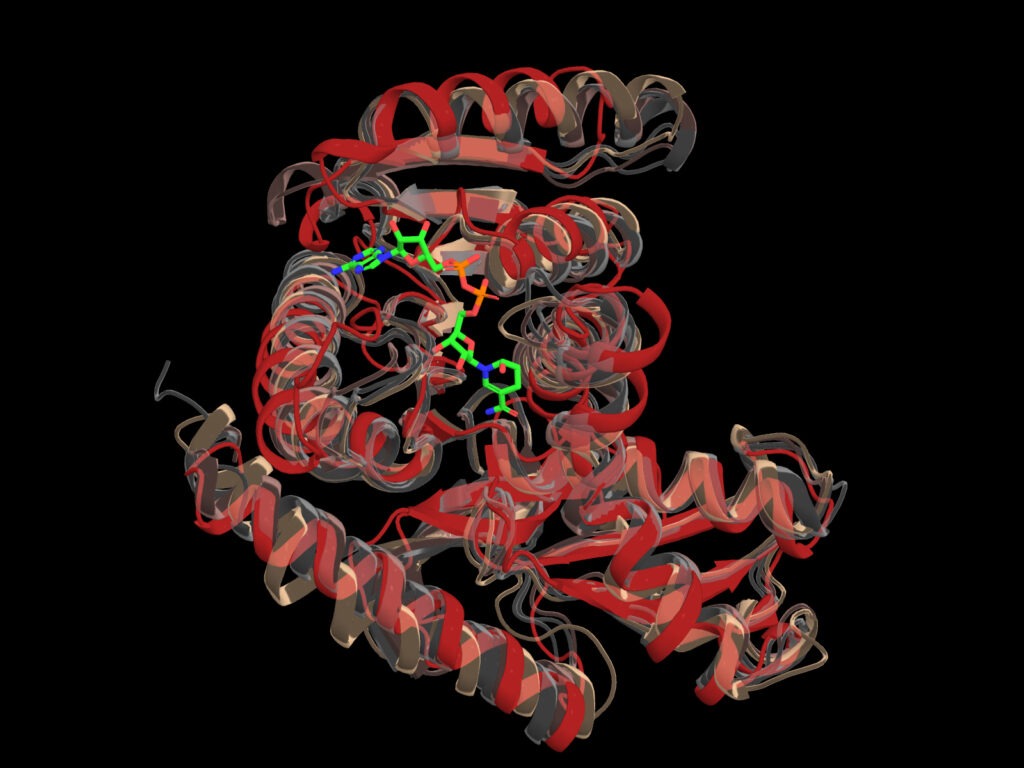

Scientists used Frontier to train an artificial intelligence model that identified synthetic versions of malate dehydrogenase that preserve the protein’s critical structure and key binding areas. Credit: Arvind Ramanathan, Argonne

The study used Oak Ridge National Laboratory’s Frontier, the world’s first exascale supercomputer housed at ORNL’s Oak Ridge Leadership Computing Facility, to train a large language model, or LLM, on proteins as part of a high-speed digital workflow with four other supercomputing systems. Besides Frontier, the workflow included Argonne’s exascale machine Aurora, the Swiss National Supercomputing Centre’s Alps, the CINECA data center’s Leonardo, and NVIDIA corporation’s PDX. The workflow’s simulations broke the exascale barrier at a peak speed of roughly 5.57 exaflops, or 5.57 quintillion calculations per second, at mixed precision.

LLMs can learn how to order and construct new patterns based on vast amounts of training data and sophisticated numerical techniques. The models can improve on that learning over time with additional training.

LLM efforts typically seek to develop a model that can absorb and adjust the lessons learned on training data and apply that knowledge consistently and accurately to new, unfamiliar data and tasks. The larger the model and its training datasets, the better its performance — but also the higher its demand for computational power.

Proteins, the building blocks of life, perform vital functions that can range from transporting molecules to replicating cells to kicking off metabolic reactions. A single, average-sized protein can consist of as many as 300 amino acids strung together in unique sequences. Scientists, including the recipients of the 2024 Nobel Prize for Chemistry, have spent decades classifying those sequences in search of a means to predict a protein’s function based on its structure.

Ramanathan and the team wanted to find out whether an LLM could identify ideal protein sequences for a given biochemical function.

“We’re using methods similar to the natural language processing that allows ChatGPT to form or finish sentences, but this is for protein sequences,” Ramanathan said. “We feed the model data about the constituents that make up proteins, how they’re put together, and their various properties. Then we simulate potential combinations in microscopic detail and gauge the probabilities that this combination leads to that property and so on. We’re teaching the algorithm what makes a good protein sequence, and to do that, we needed machines with the power of Frontier and Aurora.”

To test the model, the team tasked it with designing a protein sequence with familiar properties — those of malate dehydrogenase, a well-studied enzyme and major element in metabolism for most organisms. The twist: the team’s instructions called for a lower activation threshold to make the enzyme more sensitive.

Generative models such as ChatGPT tend to suffer from errors similar to hallucinations when the model makes up data to fill gaps in knowledge. The team sought to head off that danger by designing a workflow that directly incorporates feedback from experimental simulations into the results.

“Tests in the laboratory indicate we were successful,” Ramanathan said. “In this case, we had a protein structure with a lot of data available, so this result was easy to test in the lab. We know we’re not playing a game where we’re going to get a single winning result every time. This is an iterative process where we gradually push the model harder and harder to find its limitations and figure out how to overcome them. For now, we’ve shown it can identify the requirements for a good protein and follow a template to try to produce that. Ultimately, we hope it could be used to design lifesaving cures and treatments, but we have a long way to go.”

Next steps include expanding the model to design new and more complex protein sequences.

Besides Ramanathan, the team included Gautham Dharuman, Kyle Hippe, Alexander Brace, Sam Foreman, Väinö Hatanpää, Varuni K. Sastry, Huiho Zheng, Logan Ward, Servesh Muralidharan, Archit Vasan, Bharat Kale, Carla M. Mann, Heng Ma, Murali Emani, Michael E. Papka, Ian Foster, Venkatram Vishwanath and Rick Stevens of Argonne; Yun-Hsuan Cheng, Yuliana Zamora and Tom Gibbs of NVIDIA; Shengchao Liu of UC Berkeley; Chaowei Xiao of the University of Wisconsin-Madison; Mahidhar Tatineni of the San Diego Supercomputing Center; Deepak Canchi, Jerome Mitchell, Koichi Yamad and Maria Garzaran of Intel; and Anima Anandkumar of the California Institute of Technology.

This research was supported by the National Institutes of Health and by the DOE Office of Science’s Advanced Scientific Computing Research program. The OLCF is an Office of Science user facility at ORNL.

UT-Battelle manages ORNL for DOE’s Office of Science, the single largest supporter of basic research in the physical sciences in the United States. DOE’s Office of Science is working to address some of the most pressing challenges of our time. For more information, visit https://energy.gov/science.