In a farewell nod to Titan, scheduled to be decommissioned in August 2019, we present a short series of features highlighting some of Titan’s impactful contributions to scientific research.

Long before the first computers were invented, intelligent robots appeared in myths, stories, and other works of fiction. In recent years, the idea that machines could someday be capable of thought has been nurtured by increasingly powerful computational resources. Machine learning, a field in which computer algorithms process information and identify patterns without explicit instructions, has grown in popularity with advances in parallel computing.

Originally built for modeling and simulation, the Oak Ridge Leadership Computing Facility’s (OLCF’s) 27-petaflop Cray XK7 Titan supercomputer eventually earned a reputation for solving large-scale problems in data science. As soon as Titan came online in 2012 as the largest GPU-accelerated system in the world, researchers took an interest. Soon, artificial intelligence (AI) projects were earning time on the system.

Starting with being ranked No. 1 in 2012 and staying among the top 10 systems in the world from 2012 to 2019, Titan contributed to several important early developments in machine learning, from training neural networks—artificial compute systems inspired by the way the human brain works—to designing programs to help robots understand their surroundings.

“When AI started taking off through deep learning, around 2013, the use of GPUs was a big part of the successes being realized, and Titan was the biggest GPU-accelerated supercomputer available,” said OLCF Director of Science Jack Wells. “For this reason, people started bringing these kinds of large problems to us, and we started seeing them in our Director’s Discretionary user program in 2015.”

Many teams used Titan to accelerate research projects involving AI, often led by researchers from the US Department of Energy’s (DOE’s) Oak Ridge National Laboratory (ORNL). For example, a team led by ORNL’s Robert Patton used Titan to develop the Multinode Evolutionary Neural Networks for Deep Learning (MENNDL) code, which generates custom neural networks more quickly than and at the same performance level as those created by humans. ORNL’s Gina Tourassi and her team used Titan to apply deep learning to extract useful information from cancer pathology reports. Another team led by ORNL’s Katie Schuman trained neural networks for autonomous robot navigation.

“These teams were able to exploit Titan’s compute power for their large-scale deep learning applications over the years,” said Arjun Shankar, group leader of the Advanced Data and Workflow Group at the OLCF, a DOE Office of Science User Facility at ORNL.

Deep learning is a branch of machine learning that typically involves the use of larger and more sophisticated neural networks.

The AI projects that ran on Titan led to multiple publications, including papers in the Journal of the American Medical Informatics Association, BMC Bioinformatics, and, recently, Nature. Additionally, the AI projects run on Titan paved the way for new projects to run on other hybrid (CPU-GPU) architectures.

Teeing up to Summit

One such architecture is the OLCF’s 200-petaflop IBM AC922 Summit, the world’s smartest and most powerful supercomputer.

After developing MENNDL in 2016, Patton’s team ran the code on Titan to produce optimized neural networks as part of a neutrino scattering experiment called MINERvA, or Main Injector Experiment for v-A. MENNDL evaluated about 500,000 neural networks to find the network that best analyzed images of interactions between neutrinos and neutrino detectors, thereby locating the specific points at which these interactions occur.

The work on Titan prepared the team for the OLCF’s Summit architecture that came online in 2018.

The team ran MENNDL on Summit to automatically create the optimal deep learning neural networks for analyzing electron microscopy images. MENNDL provided a network that performed as well as a human expert performed at analyzing some of these images, and the team became a finalist for the 2018 Association of Computing Machinery Gordon Bell Prize after MENNDL reached a sustained performance of 152.5 petaflops on Summit.

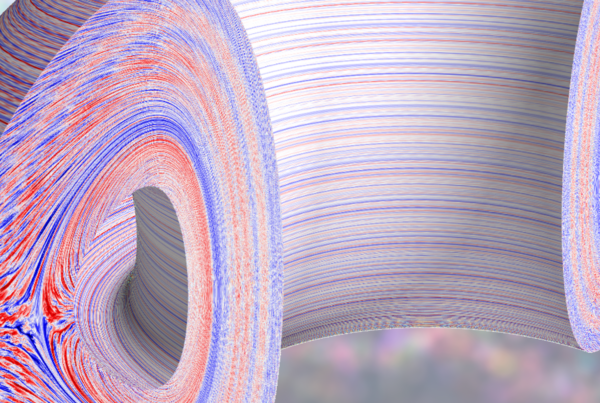

Recently, researchers led by Bill Tang of DOE’s Princeton Plasma Physics Laboratory and Princeton University tested their Fusion Recurrent Neural Network code on both Titan and Summit after porting the code from a Princeton University cluster called Tiger. The code, designed to make predictions about plasma disruptions inside fusion reactors, successfully predicted disruptions on the world’s largest tokamak—the Joint European Torus Facility in the United Kingdom—and the results were published in Nature.

“Titan was invaluable for large scaling tests to see how close we could get to reaching solutions with significantly more computing power,” said Julian Kates-Harbeck, a PhD candidate at Harvard University who worked on the project.

Schuman began using Titan in 2015. Much of her work centered around neuromorphic computing, a type of computing that emulates networks of neurons, or cells, that send electrical signals in the human brain. Schuman, whose work on Titan included training neural networks for robot navigation systems, said working on Titan equipped her for using future machines.

“All of the scalable software frameworks I developed were built and tested on Titan,” Schuman said. “Titan prepared me for working with future high-performance computing systems in general, including Summit.”

The work of other teams on Titan inspired the development of different kinds of neural networks. In 2016, a team led by Tourassi and current Argonne National Laboratory scientist Arvind Ramanathan used Titan to create convolutional neural networks, which use sliding “windows” to look at chunks of textual data for the analysis of cancer pathology reports. The team eventually moved to hierarchical attention networks for their ability to analyze full sequences of words.

“Humans can do this type of learning because we understand the contextual relationships between words,” Tourassi said. “This is what we’re trying to implement with deep learning.”

Where worlds collide

Over the last 7 years, Titan pushed the boundaries of what is possible with modeling and simulation, having been touted as one of the first large-scale hybrid supercomputers. Researchers modeled everything from exploding supernovae to the decomposition of biomass on the gargantuan machine, and those familiar with it would say Titan’s claim to fame undoubtedly was its role in creating high-fidelity simulations to further scientific understanding.

Titan’s role in the AI movement, however, was unique.

“Titan was new at the time when AI started moving into the high-performance computing space, and it wasn’t altogether architected for these kinds of tasks,” Wells said. “But with Titan, it was all about the GPUs. People were getting backed up at their home institutions waiting to use a small number of GPUs locally, but we had Titan deployed within DOE’s user programs.”

Not only are GPUs what positioned Titan as a powerful tool for larger scale AI problems, but they are also the go-to processors for deep learning today. GPUs, especially those as powerful as the NVIDIA Tesla K20 GPUs that were in Titan, are well-suited for multiple kinds of neural networks because they can execute many deep learning operations simultaneously. In fact, they are what make ORNL’s computing environment so well-suited for AI—especially when combined with the abundant data available at the lab.

“We believe AI will take off and grow faster in an environment like a multiprogram national laboratory,” Wells said. “For some of the data produced at large experimental facilities, we have predictive models that already give a fundamental understanding of the phenomena, whereas in the business world, you don’t always have the benefit of having a good model to use. We believe this alone should encourage experts in AI to collaborate with DOE scientists, resulting in accelerated development of AI techniques and capabilities.”

Machine learning depends upon having a rich amount of data, and data-rich projects often require large, powerful computing resources. This is what made Titan an attractive candidate for AI in the first place.

“Titan was good at computationally intensive tasks—tasks that were compute bound,” Wells said. Compute-bound tasks are dependent on a machine’s processing power, whereas bandwidth-bound tasks are dependent on the size of a machine. “The structure of operations in training a deep learning neural network is often compute bound.”

With Titan’s retirement, new developments in AI applications have already begun with Summit, which contains 27,648 NVIDIA Volta GPUs and 9,216 IBM POWER9 CPUs. One deep learning application has reached exascale performance levels—a billion calculations per second—using mixed precision calculations on Summit.

“With Titan, we had a preliminary amount of convergence in modeling and simulation and data science,” Shankar said. “Modeling and simulation generate data, and data can generate a model. Now in Summit, we have a machine that truly does both.”

Related Publications:

H.-J. Yoon, A. Ramanathan, and G. Tourassi, “Multi-Task Deep Neural Networks for Automated Extraction of Primary Site and Laterality Information from Cancer Pathology Reports.” International Neural Network Society Conference on Big Data. Springer International Publishing, 2016, doi:10.1007/978-3-319-47898-2_21.

H.-J. Yoon, J. X. Qiu, J. B. Christian, J. Hinkle, F. Alamudun, and G. Tourassi, “Selective Information Extraction Strategies for Cancer Pathology Reports with Convolutional Neural Networks.” INNS Big Data and Deep Learning Conference 2019: Recent Advances in Big Data and Deep Learning 1 (2019), doi:10.1007/978-3-030-16841-4_9.

Kates-Harbeck, A. Svyatkovskiy, and W. Tang, “Predicting Disruptive Instabilities in Controlled Fusion Plasmas through Deep Learning.” Nature 568 (2019): 526–531, doi:10.1038/s41586-019-1116-4.

P. Mitchell, G. Bruer, M. E. Dean, J. S. Plank, G. S. Rose, C. D. Schuman, “NeoN: Neuromorphic Control for Autonomous Robotic Navigation.” 2017 5th International Symposium on Robotics and Intelligent Sensors (2017), doi:10.1109/IRIS.2017.8250111.

X. Qiu, H.-J. Yoon, K. Srivastava, T. P. Watson, J. B. Christian, A. Ramanathan, X. C. Wu, P. A. Fearn, and G. D. Tourassi, “Scalable Deep Text Comprehension for Cancer Surveillance on High-Performance Computing.” BMC Bioinformatics 19 (2018): 488, doi:10.1186/s12859-018-2511-9.

Gao, M. T. Young, J. X. Qiu, H.-J. Yoon, J. B. Christian, P. A. Fearn, G. D. Tourassi, and A. Ramanathan, “Hierarchical Attention Networks for Information Extraction from Cancer Pathology Reports.” Journal of the American Medical Informatics Association 25, no. 3 (2017), doi:10.1093/jamia/ocx131.

E. Potok, C. Schuman, S. Young, R. Patton, F. Spedalieri, J. Liu, K.-T. Yao, G. Rose, and G. Chakma, “A Study of Complex Deep Learning Networks on High-Performance, Neuromorphic, and Quantum Computers.” ACM Journal on Emerging Technologies in Computing Systems 14, no. 2 (2018): 19, doi:10.1145/3178454.