ORNL research scientists Steven Young (left) and Travis Johnston (middle) with ORNL data scientist Robert Patton (right). A team led by Patton is in the running for the 2018 ACM Gordon Bell Prize after it used the MENNDL code and the Summit supercomputer to create an artificial neural network that analyzed microscopy data as well as a human expert. Image Credit: Carlos Jones, ORNL

This article is part of a series covering the finalists for the 2018 Gordon Bell Prize that used the Summit supercomputer. The prize winner will be announced at SC18 in November in Dallas.

Using the Oak Ridge Leadership Computing Facility’s (OLCF’s) new leadership-class supercomputer, the IBM AC922 Summit, a team from the US Department of Energy’s (DOE’s) Oak Ridge National Laboratory (ORNL) demonstrated the ability to generate intelligent software that could revolutionize how scientists manipulate materials at the atomic scale.

The team’s code, called Multinode Evolutionary Neural Networks for Deep Learning (MENNDL), reached a sustained performance of 152.5 petaflops using mixed precision calculations. Consequently, the project earned a finalist nomination for the Association for Computing Machinery’s Gordon Bell Prize, awarded each year to recognize outstanding achievement in high-performance computing.

MENNDL automatically creates artificial neural networks—computational systems that loosely mimic the human brain—that can tease important information out of scientific datasets. The algorithm has been applied successfully to medical research, satellite images, high-energy physics data, and neutrino research.

A team led by ORNL’s Robert Patton recently used MENNDL and the OLCF’s Summit supercomputer to automatically create a deep-learning network specifically tuned for data produced by advanced microscopes called scanning transmission electron microscopes (STEMs). The resulting network is capable of reducing the human effort needed to analyze STEM images from months to hours.

Such a significant reduction could have huge implications. With advanced microscopes capable of producing hundreds of images per day, real-time feedback from optimized algorithms generated by MENNDL could dramatically accelerate image processing and pave the way for new scientific discoveries in materials science, among other domains. The technology could eventually mature to the point where scientists gain the ability to fabricate materials at the atomic level.

“What we’re essentially doing is going through a big open field trying to find these golden nuggets,” Patton said. “Running MENNDL on Summit helps us increase our search space so that we can cover thousands of these networks at the same time. It helps us munch through the data faster.”

A network for the nanoscale

Mapping the insides of solar cells, semiconductors, batteries, and biological cells requires a big microscope—and not just any microscope. STEM microscopes are specialized to tackle these kinds of tasks, and their ability to zero in on the nanoscale and atomic-scale structure of materials can help scientists explore their properties and behaviors.

To get a look at materials on the atomic scale, STEM microscopes employ a beam of negatively charged particles called electrons that pass through a sample and form images of that sample. Electrons that lose energy as they pass through provide information about the structure of the material. Therefore, STEM microscopes are useful for research involving materials such as the semi-metal graphene and in fields such as quantum computing.

The capabilities of STEM microscopes far surpass those of optical microscopes, but understanding and analyzing defects at the nanoscale can be a challenge. Scientists have a difficult time deciphering microscopy images with high levels of noise or missing structural elements, and they have yet to figure out how to automatically extract structural information from these images. MENNDL, though, holds promise for such tasks.

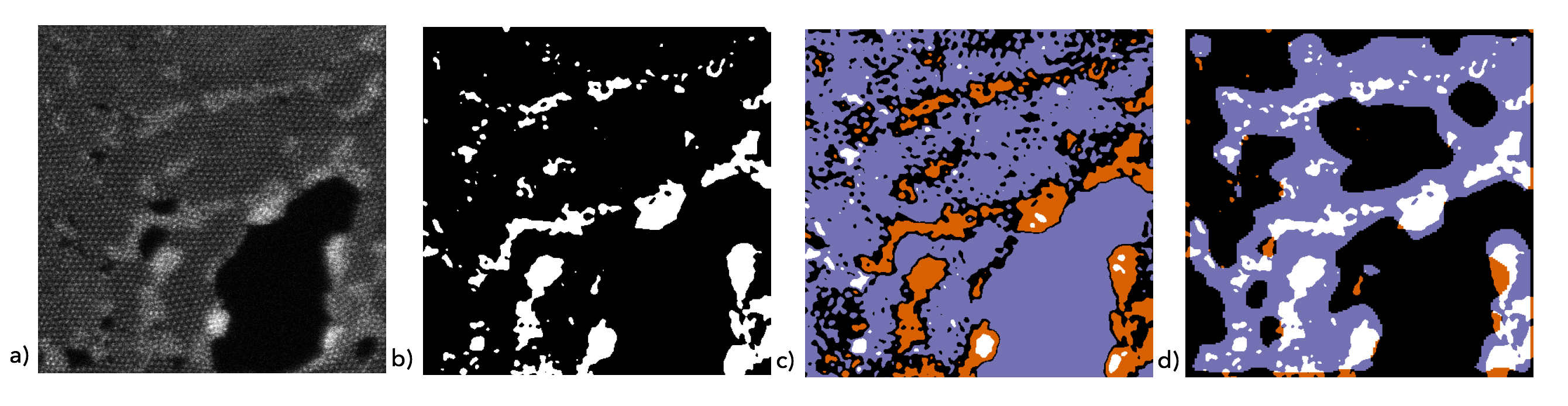

Using MENNDL, the team trained a network to recognize the microscopic defects in one frame of a STEM “movie,” a series of high-resolution images the microscope produces in succession. In this case, the movie showed defects in a single layer of molybdenum-doped tungsten disulphide—a 2D material that has applications in solar cells—under 100 kV electron beam irradiation.

The team took the single frame and divided it into tiles. Then, the network was trained using the tiles as examples of which patterns to look for. Because electron microscopy images contain many repeating elements, dividing an image into tiles offers the network a number of training examples while limiting redundant examples that can eat up computational time. This helps the network more efficiently classify the pixels in the whole image.

MENNDL employs an algorithm to find the network with the most suitable topology (number and type of layers) for classifying these pixels, based on the performance of previous evolving networks.

“Think of a neural network like a sandwich,” Patton said. “You have to figure out which layers are stacked in what order, and different sandwiches are going to have different layers. That’s our biggest struggle in deep learning right now—when you come to a new dataset that no one has touched before, you don’t know what those layers should be.”

As an evolutionary algorithm, MENNDL builds on the “survival of the fittest” principle, in that the neural networks are evolving to “survive.” Initially, the network has an almost infinite number of parameter combinations that are possible—but that doesn’t mean they’re all good at the assigned task. Neural networks that are better at correctly identifying defects in the data may reproduce new combinations of networks that have an increasing chance of being correct as they evolve, whereas the poor performers are excluded from future generations of networks.

Some of the parameters include the size of the kernels—the search filters of the information—and stride, or how much these filters overlap one another to comb more meticulously through the data.

MENNDL analyzed more than 2 million networks over the course of the code’s 4-hour run and eventually provided the team with a rough sketch of the network design that would perform at least as well as a human domain expert would perform. The team used this final, superior network to analyze two subsequent frames in the movie and confirmed the network’s unprecedented ability to detect the defects.

Pictured, the same image shown using different methods of analysis. a) Raw electron microscopy image. b) Image showing defects (white) as labelled by a human expert. c) Image showing defects (white) as labelled by a Fourier transform method, which breaks up an image into its frequency spectrum and requires manual tuning. d) Image showing defects (white) labelled by the optimal neural network. Defects that don’t exist are shown in purple, and defects that weren’t identified are shown in orange. In mere hours, the team created a neural network that performed as well as a human expert, demonstrating MENNDL’s ability to reduce the time to analyze electron microscopy images by months. Image Credit: ORNL

“This is data that had not been looked at before,” Patton said. “The materials scientists whose data we used were previously doing what many others in the deep learning community are currently doing: trying to manually design a neural network. That’s where we came in.”

Populating all of Summit

Not only does MENNDL parallelize well to large HPC architectures, but it also has been developed to allow a rolling population of neural networks to exist on the machine.

“Each GPU evaluates one network at a time, but as soon as a GPU is done, we hand it another network,” Patton said. “The algorithm waits until it has a sufficient number of results back and then runs this evolutionary process where it combines some of these networks and throws out others.”

MENNDL also uses Summit’s burst buffer memory, which allows for the storage of data locally—on the nodes themselves—rather than on the file system. This minimizes data transfer and enhances code performance by increasing GPU utilization.

In 2017, MENNDL ran for 24 hours and analyzed two networks per GPU per hour on the OLCF’s 27-petaflop Cray XK7 Titan supercomputer. On Summit, MENNDL analyzed approximately 37 networks per GPU per hour. The team estimates that the code will achieve a peak performance of 167 petaflops, or 167 quadrillion calculations per second, running on all 27,648 of Summit’s NVIDIA V100 GPUs.

“There were some unknowns, in regard to running on such a new system. It’s like test driving a prototype car on a racetrack, in a sense,” Patton said. “But running on a machine that hasn’t even been accepted yet and getting this kind of result in the end—it’s pretty stunning.”

Now that MENNDL has scaled to Summit, the team members will continue to apply the algorithm to other scientific domains and expand on their work in microscopy.

“We are going to be able to do things on Summit in minutes that would have taken hours on Titan,” Patton said. “Tools like MENNDL give experimentalists hope, because the astronomical amounts of data they’ve generated are now potentially useful to discover something new.”

The OLCF is a DOE Office of Science User Facility located at ORNL.

This material is based upon work supported by the US Department of Energy, Office of Science, Office of Advanced Scientific Computing Research, Robinson Pino, program manager, under contract number DE-AC05-00OR22725.

This research used resources of the OLCF, which is a DOE Office of Science User Facility supported under Contract DE-AC05-00OR22725.

ORNL is managed by UT-Battelle for the Department of Energy’s Office of Science, the single largest supporter of basic research in the physical sciences in the United States. DOE’s Office of Science is working to address some of the most pressing challenges of our time. For more information, please visit https://science.energy.gov.