This article is part of a series covering the finalists for the 2018 Gordon Bell Prize that used the Summit supercomputer. The prize winner will be announced at SC18 in November in Dallas.

Contact: Kathy Kincade, [email protected], +1 510 495 2124

A team of computational scientists from Lawrence Berkeley National Laboratory (Berkeley Lab) and Oak Ridge National Laboratory (ORNL) and engineers from NVIDIA has, for the first time, demonstrated an exascale-class deep learning application that has broken the exaop barrier.

Members of the Gordon Bell team from Berkeley Lab, left to right: Prabhat, Thorsten Kurth, Mayur Mudigonda, Ankur Mahesh and Jack Deslippe. Image: Marilyn Chung, Berkeley Lab

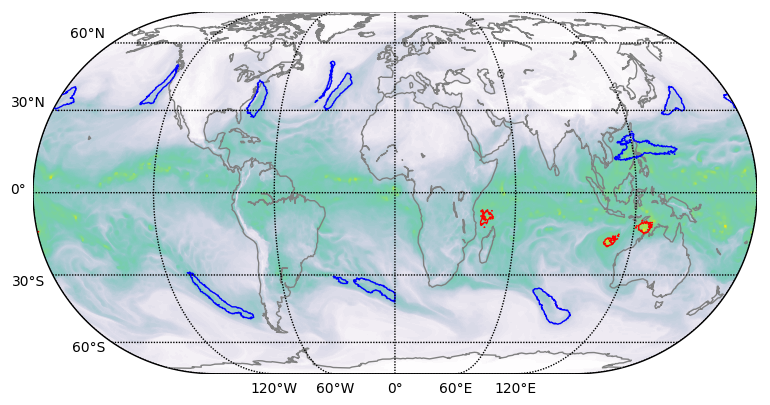

Using a climate dataset from Berkeley Lab on ORNL’s Summit system at the Oak Ridge Leadership Computing Facility (OLCF), they trained a deep neural network to identify extreme weather patterns from high-resolution climate simulations. Summit is an IBM Power Systems AC922 supercomputer powered by more than 9,000 IBM POWER9 CPUs and 27,000 NVIDIA® Tesla® V100 Tensor Core GPUs. By tapping into the specialized NVIDIA Tensor Cores built into the GPUs at scale, the researchers achieved a peak performance of 1.13 exaops and a sustained performance of 0.999 – the fastest deep learning algorithm reported to date and an achievement that earned them a spot on this year’s list of finalists for the Gordon Bell Prize.

“This collaboration has produced a number of unique accomplishments,” said Prabhat, who leads the Data & Analytics Services team at Berkeley Lab’s National Energy Research Scientific Computing Center and is a co-author on the Gordon Bell submission. “It is the first example of deep learning architecture that has been able to solve segmentation problems in climate science, and in the field of deep learning, it is the first example of a real application that has broken the exascale barrier.”

Three members of the Gordon Bell team from NVIDIA, left to right: Joshua Romero, Mike Houston and Sean Treichler.

These achievements were made possible through an innovative blend of hardware and software capabilities. On the hardware side, Summit has been designed to deliver 200 petaflops of high-precision computing performance and was recently named the fastest computer in the world, capable of performing more than three exaops (3 billion billion calculations) per second. The system features a hybrid architecture; each of its 4,608 compute nodes contains two IBM POWER9 CPUs and six NVIDIA Volta Tensor Core GPUs, all connected via the NVIDIA NVLink™ high-speed interconnect.The NVIDIA GPUs are a key factor in Summit’s performance, enabling up to 12 times higher peak teraflops for training and 6 times higher peak teraflops for inference in deep learning applications compared to its predecessor, the Tesla P100.

+ Read the full story: https://www.nersc.gov/news-publications/nersc-news/science-news/2018/berkeley-lab-oak-ridge-nvidia-team-breaks-exaop-barrier-with-deep-learning-application/