In 2012 approximately 14 million new cases of cancer were reported worldwide, and the National Cancer Institute (NCI) predicts that number will rise to 22 million by 2032. With each additional case comes new pathology reports—documents that describe details such as cancer location and cancer grade, the tissue organization that reveals a tumor’s abnormality. To track cancer at the population level, cancer registries depend on annotated versions of these reports.

To help cancer registries keep up with reports of cancer incidence, a research team at the US Department of Energy’s (DOE’s) Oak Ridge National Laboratory (ORNL) is experimenting with artificial neural networks—computing systems that resemble the neural networks in the human brain. The team is currently working with a new kind of artificial neural network that was originally designed to classify restaurant and movie reviews.

This new network, called a hierarchical attention network (HAN), outperforms other artificial neural networks tested by ORNL for this purpose because it analyzes sequences of words rather than simply searching for individual words in a document.

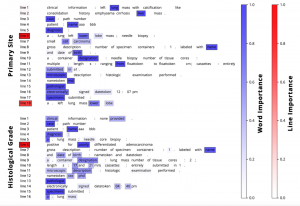

HAN annotations on a sample pathology report. The most important words in each line are highlighted in blue, the most important lines in red. For each task, the HAN can successfully locate the specific lines that identify the primary site or grade of the cancer.

“HANs can capture relationships between words, identifying which sequences are relevant to a particular task,” said Arvind Ramanathan, a member of ORNL’s Biomedical Sciences, Engineering, and Computing (BSEC) Group and ORNL’s technical lead for the CANcer Distributed Learning Environment (CANDLE) project, a joint initiative between DOE and NCI aimed at accelerating the fight against cancer.

Led by Georgia Tourassi, BSEC group leader, ORNL’s CANDLE pilot project focuses on using deep learning—a machine learning technique that loosely mimics the way the human brain assimilates information—for problems that extract information from large cancer datasets. As part of this project, Ramanathan’s team focuses on developing artificial neural networks.

In 2016 the team used the Cray XK7 Titan supercomputer at the Oak Ridge Leadership Computing Facility (OLCF), a DOE Office of Science User Facility at ORNL, to develop the first network, a convolutional neural network (CNN). CNNs have applications in image recognition because they use a sliding “window” to look at tiny chunks of information. These networks, however, fail to account for the relative locations of text, which serves as a barrier to processing sequential information that relies on locational context to capture word importance.

Because some terms may appear multiple times in a document, the words surrounding them can provide clues about their importance. The team turned to HANs because they provide the added benefit of capturing this missed locational context.

“We want to enable automatic text understanding,” Ramanathan said. “Text understanding goes beyond just information extraction—it’s about understanding the context and linguistic patterns in word sequences.”

HANs address this by first breaking down long documents into smaller chunks, such as sentences or lines, and then using a separate RNN on each chunk—like a human reading a textbook and taking notes at the end of each chapter. A final RNN then processes all the “notes” to generate the final label for the entire document.

The team trained its HAN algorithm on multiple GPU-accelerated systems at the OLCF—Summitdev, the early access system for the Summit supercomputer, the OLCF’s next leadership-class supercomputer; Titan, the current flagship machine; and the DGX-1 deep learning system. When applied to two separate information extraction tasks, the HAN model determined both the site and grade of breast and lung cancers noted in 2,505 pathology reports with slightly better accuracy than the team’s previous CNN.

Although the HAN achieved between 80 and 90 percent correctness for both tasks in the study, the team said its usefulness will depend on its application.

“HANs take slightly more time to train than CNNs, but they are also more accurate,” said Shang Gao, a team member and graduate student at the Bredesen Center for Interdisciplinary Research and Graduate Education, a collaboration between ORNL and the University of Tennessee. “We are applying this to a critical health problem, so we value accuracy over faster results.”

The team is currently exploring the use of hierarchical convolutional attention networks, or HCANs, which have shown accuracy comparable to HANs while requiring only half the time to train. The team will soon acquire a more robust dataset for training—approximately 100 times more data than they worked with in previous efforts—that will allow for increased opportunities to deploy networks at scale.

“Understanding context and the ways in which text is being presented are challenging problems that will require training these deep learning models on a very smart machine,” Ramanathan said.

Ramanathan and his team look forward to advancing their work on the deep learning–optimized Summit when it comes on line. With more than 27,000 NVIDIA Volta GPUs, Summit is set to provide scientists with unprecedented potential for artificial intelligence and deep learning research.

“Our project is going to require the computing power of Summit,” Ramanathan said. “Problems like this are what will make Summit truly ‘smart.’”

Related Publication: S. Gao, M. T. Young, J. X. Qiu, H.-J. Yoon, J. B. Christian, P. A. Fearn, G. D. Tourassi, and A. Ramanathan, “Hierarchical Attention Networks for Information Extraction from Cancer Pathology Reports,” Journal of the American Medical Informatics Association (2017). doi:10.1093/jamia/ocx131.

ORNL is managed by UT–Battelle for the Department of Energy’s Office of Science, the single largest supporter of basic research in the physical sciences in the United States. DOE’s Office of Science is working to address some of the most pressing challenges of our time. For more information, please visit https://science.energy.gov/.