A multi-institution team is celebrating the latest software release that supports the use of distributed node-local storage devices for high-performance computing (HPC) applications. The software is an important piece of the hierarchical storage system required on modern, large-scale HPC systems and offers a unified shared namespace over distributed storage resources for the fastest supercomputers in the world.

UnifyFS is a client-level file system that makes individual, node-local devices appear as a parallel file system to an HPC application. The software is designed to enhance the performance of shared parallel file systems by maximizing the use of node-local storage as part of the I/O system. UnifyFS is being developed as part of the Exascale Computing Project’s (ECP’s) ExaIO project. UnifyFS software development is led by senior researchers Kathryn Mohror from Lawrence Livermore National Laboratory (LLNL) and Sarp Oral from Oak Ridge National Laboratory (ORNL). The ORNL team includes technical lead Michael Brim and software developer Ross Miller from the National Center for Computational Sciences (NCCS), as well as software developer Seung-Hwan Lim and computer science researcher Swen Boehm from the Computer Science and Mathematics Division.

HPC has traditionally been supported by parallel file systems, which allow large amounts of data to be shared and retrieved from multiple storage targets simultaneously. These systems have been critical to the speed of modern HPC, but they can become bogged down by demands from multiple jobs. UnifyFS addresses this by creating a shared namespace for the local storage that is connected to individual compute nodes, allowing an application to read and write data in the same way it would to a parallel file system.

“The key benefit of UnifyFS is that users can take advantage of the linear scalability provided by the node-local storage devices as the size of a job grows, while maintaining a shared namespace that HPC applications are used to, and without the detrimental side effects of a global parallel file system and concurrent workloads, which can cause performance disruptions,” explains Brim.

The challenge of using node-local storage was identified some time ago, explains Oral, acting head of the Advanced Technologies Section in NCCS, and both ORNL and LLNL had been trying to find a solution to this problem independently. The labs started collaborating in 2017 through ECP.

“There have been efforts to overcome this problem, and it was only logical to combine our efforts, because we were doing something very similar. We are providing a unified view and building in the software over distributed node-local devices,” says Oral.

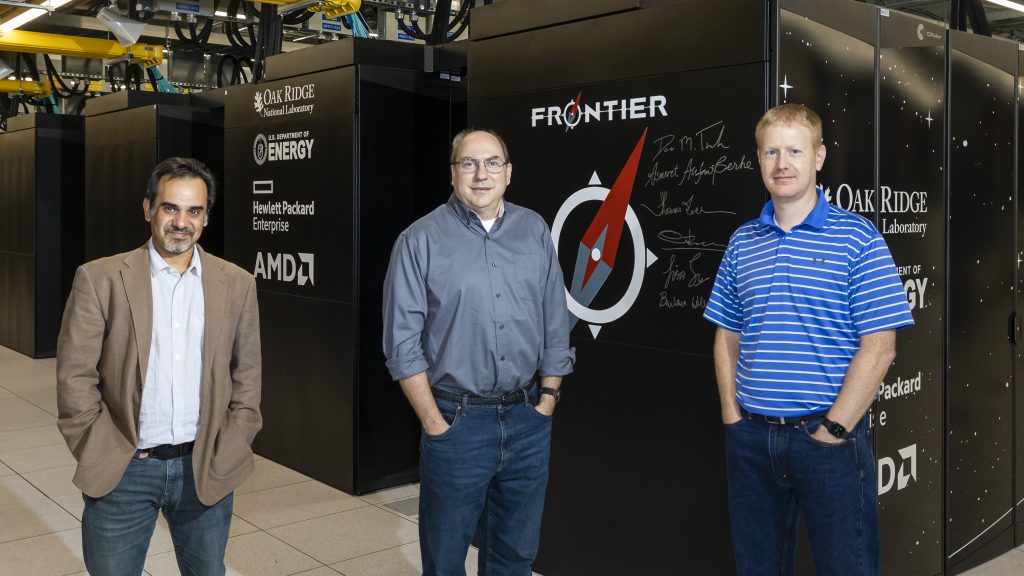

Sarp Oral, Ross Miller, and Mike Brim stand in front of the Frontier supercomputer at ORNL. They are part of the multi-institution team developing UnifyFS. Photo credit: Carlos Jones / ORNL

Throughout the project, both ORNL and LLNL have focused on creating a new software design that finds the common elements of each lab’s prior work and effectively integrates them. Much of the technical design and software development, as well as recent feature development, has been spearheaded by ORNL. LLNL has led work to create and deploy a robust testing infrastructure and to ensure UnifyFS works well with other I/O middleware, such as HDF5 and the ROMIO implementation of MPI‑IO.

“When it comes to productizing and taking something from a research project to production quality code, you need that testing infrastructure, and LLNL has done a lot of work on that,” says Miller.

“It is amazing to see how far UnifyFS has come over the course of the ECP. We started with prototype code that demonstrated the concept but was not at all robust and had limited features,” says Mohror. “Our team worked hard to transform and harden the prototype into UnifyFS 1.0, which today supports multiple systems and I/O libraries and makes using node-local storage quite easy for applications.”

The new UnifyFS release, version 1.0, is the first significant update in more than a year and includes several improvements to previous versions. These include improved scalability in managing metadata, better ability to handle file staging before and after an application run, enhanced user controls for managing data and data synchronization, creation of a library application programming interface, and integrated testing and support for I/O middleware libraries.

“UnifyFS and the larger-scale ExaIO are software technology projects. The end goal for all of them is to deliver usable software artifacts on the exascale systems,” says Oral.

UnifyFS has been tested already on the IBM AC922 Summit supercomputer at ORNL’s Oak Ridge Leadership Computing Facility (OLCF), a US Department of Energy, Office of Science user facility, and version 1.0 will be installed and available to a wider user base soon. Its simple design means users only have to write a file to UnifyFS in order to access the benefits of the node-local storage. The team also has been working on scaling for Frontier, the HPE Cray EX supercomputer that became the world’s fastest computer in May 2022. Testing on Crusher, the Frontier test system, has shown promising results.

“The node-local storage architectures on Summit and Frontier are very similar,” says Brim, “and all indications from Crusher are that we’ll see the same benefits that we are seeing with Summit.”

Support for this research came from the Exascale Computing Project, a collaborative effort of the DOE Office of Science and the National Nuclear Security Administration, and the DOE Office of Science’s Advanced Scientific Computing Research program. The OLCF is a DOE Office of Science user facility.

UT-Battelle LLC manages ORNL for the Department of Energy’s Office of Science, the single largest supporter of basic research in the physical sciences in the United States. The Office of Science is working to address some of the most pressing challenges of our time. For more information, please visit https://energy.gov/science.