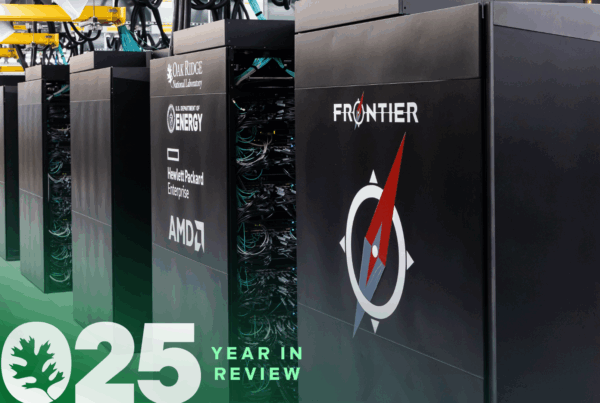

A newly enhanced I/O subsystem will support the nation’s first exascale supercomputer and the Oak Ridge Leadership Computing Facility (OLCF), a US Department of Energy high-performance computing user facility.

The OLCF announced storage specifications for their pioneering HPE Cray Frontier supercomputer, an exascale-class system set to power up by year’s end. The computational might of exascale computing, expected to have top speeds of 1 quintillion—that’s 1018, or a billion billion—calculations per second, promises to enable breakthrough discoveries across the scientific spectrum when Frontier opens to full user operations in 2022, from the basics of building better nuclear reactors to insights into the origins of the universe.

The I/O subsystem will consist of two major components: an in-system storage layer and a center-wide file system. The center-wide file system, called Orion, will use open-source Lustre and ZFS technologies.

“To the best of our knowledge, Orion will be the largest and fastest single file POSIX namespace file system in the world,” said Sarp Oral, Input/Output Working Group lead for Frontier and the Technology Integration Group leader for the National Center for Computational Sciences Division at the OLCF. “This system marks the fourth generation in the OLCF’s long history of developing and deploying large-scale, center-wide file systems to power exploration of grand-challenge scientific problems.”

Frontier’s arrival brings the new Orion file system to the OLCF, home to Summit, the nation’s fastest supercomputer, and to predecessors Titan and Jaguar. Orion employs Lustre, an open-source parallel file regimen that supported Titan and Jaguar and continues to support high-performance computing systems around the world.

Orion will employ Lustre technologies that include distributed name space, data on metadata, and progressive file layouts. Orion will comprise three tiers:

- a flash-based performance tier of 5,400 nonvolatile memory express (NVMe) devices providing 11.5 petabytes (PB) of capacity at peak read-write speeds of 10 TBps with more than 2 million random-read IOPS;

- a hard-disk-based capacity tier of 47,700 perpendicular magnetic recording devices providing 679 PB of capacity at peak read speeds of 5.5 TBps and peak write speeds of 4.6 TBps with more than 2 million random-read IOPS; and

- a flash-based metadata tier of 480 NVMe devices providing an additional capacity of 10 PB.

“Orion is pushing the envelope of what is possible technically due to its extreme scale and hard disk/NVMe hybrid nature,” said Dustin Leverman, leader of the OLCF’s High-Performance Computing Storage and Archive Group. “This is a complex system, but our experience and best practices will help us create a resource that allows our users to push science boundaries using Frontier.”

Orion will have 40 Lustre metadata server nodes and 450 Lustre object storage service (OSS) nodes. Each OSS node will provide one object storage target (OST) device for performance and two OST devices for capacity—a total of 1,350 OSTs systemwide.

An extra 160 nodes will serve as routers to provide peak read-write speeds of 3.2 terabytes to all other OLCF resources and platforms.

The in-system storage layer will employ compute-node local storage devices connected via PCIe Gen4 links to provide peak read speeds of more than 75 terabytes per second (TBps), peak write speeds of more than 35 TBps, and more than 15 billion random-read input/output operations per second (IOPS). OLCF engineers are working on software solutions to provide a distributed per-job name space for the devices.

UT-Battelle LLC manages Oak Ridge National Laboratory for DOE’s Office of Science, the single largest supporter of basic research in the physical sciences in the United States. DOE’s Office of Science is working to address some of the most pressing challenges of our time. For more information, visit https://energy.gov/science.