When scientists pushed the world’s fastest supercomputer to its limits, they found those limits stretched beyond even their biggest expectations.

The Frontier supercomputer at the Department of Energy’s Oak Ridge National Laboratory set a new ceiling in performance when it debuted in 2022 as the first exascale system in history — capable of more than 1 quintillion calculations per second. Now researchers are learning just what heights of scientific discovery Frontier’s computational power can help them achieve.

In the latest milestone, a team of engineers and scientists used Frontier to simulate a system of nearly half a trillion atoms — the largest system ever modeled and more than 400 times the size of the closest competition — in a potential gateway to new insights across the scientific spectrum.

“It’s like test-driving a car with a speedometer that registers 120 miles per hour, but you press the gas pedal and find out it goes past 200,” said Nick Hagerty, a high-performance computing engineer for ORNL’s Oak Ridge Leadership Computing Facility, which houses Frontier. “Nobody runs simulations at a scale this size because nobody’s ever tried. We didn’t know we could go this big.”

A team used Frontier with the Large-scale Atomic and Molecular Massively Parallel Simulator software module, or LAMMPS, to simulate a system of room-temperature water molecules at the atomic level as they gradually increased the number of atoms to more than 466 billion. Credit: ORNL

The results hold promise for scientific studies at a scale and level of detail not yet seen.

“Nobody on Earth has done anything remotely close to this before,” said Dilip Asthagiri, an OLCF senior computational biomedical scientist who helped design the test. “This discovery brings us closer to simulating a stripped-down version of a biological cell, the so-called minimal cell that has the essential components to enable basic life processes.”

Hagerty and his team sought to max out Frontier to set criteria for the supercomputer’s successor machine, still in development. Their mission: Push Frontier as far as it could go and see where it stopped.

The team used Frontier with the Large-scale Atomic and Molecular Massively Parallel Simulator software module, or LAMMPS, to simulate a system of room-temperature water molecules at the atomic level as they gradually increased the number of atoms.

“Water is a great test case for a machine like Frontier because any researcher studying a biological system at the atomic level will likely need to simulate water,” Hagerty said. “We wanted to see how big of a system Frontier could really handle and what limitations are encountered at this scale. As one of the first benchmarking efforts to use more than a billion atoms with long-range interactions, we would periodically find bugs in the LAMMPS source code. We worked with the LAMMPS developers, who were highly engaged and responsive, to resolve those bugs, and this was critical to our scaling success.”

Frontier tackles complex problems via parallelism, which means the supercomputer breaks up the computational workload across its 9,408 nodes, each a self-contained computer capable of around 200 trillion calculations per second. With each increase in problem size, the simulation demanded more memory and processing power. Frontier never blinked.

A team used Frontier with the Large-scale Atomic and Molecular Massively Parallel Simulator software module, or LAMMPS, to simulate a system of room-temperature water molecules at the atomic level as they gradually increased the number of atoms. The simulation ultimately grew to more than 155 billion water molecules – a total of 466 billion atoms – across more than 9,200 of Frontier’s nodes. Credit: Jason Smith/ORNL

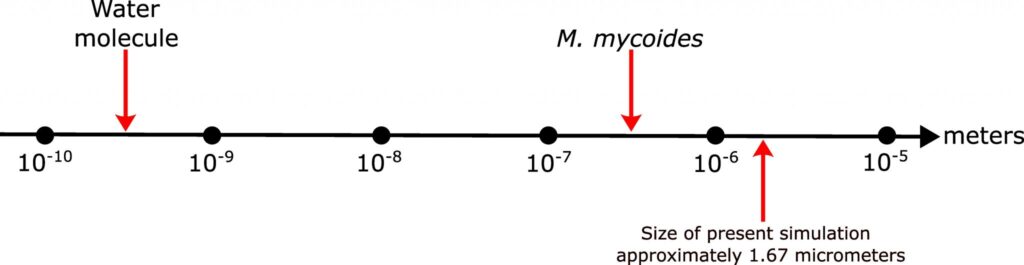

“We’re not talking about a large simulation just in terms of physical size,” Asthagiri said. “After all, a billion water molecules would fit in a cube with edges smaller than a micrometer (a millionth of a meter). We’re talking large in terms of the complexity and detail. These millions and eventually billions and hundreds of billions of atoms interact with every other atom, no matter how far away. These long-range interactions increase significantly with every molecule that’s added. This is the first simulation of this kind at this size.”

The simulation ultimately grew to more than 155 billion water molecules – a total of 466 billion atoms – across more than 9,200 of Frontier’s nodes. The supercomputer kept crunching the numbers, even with 95% of its memory full. The team stopped there.

“We could have gone even higher,” Hagerty said. “The next level would have been 600 billion atoms, and that would have consumed 99% of Frontier’s memory. We stopped because we were already far beyond a size anyone’s ever reached to conduct any meaningful science. But now we know it can be done.”

That capacity for detail could offer the chance to conduct vastly more complex studies than some scientists had hoped for on Frontier.

“This changes the game,” Asthagiri said. “Now we have a way to model these complex systems and their long-range interactions at extremely large sizes and have a hope of seeing emergent phenomena. For example, with this computing power, we could simulate sub-cellular components and eventually the minimal cell in atomic detail. From such explorations, we could learn about the spatial and temporal behavior of these cell structures that are basic to human, animal and plant life as we know it. This kind of opportunity is what an exascale machine like Frontier is for.”

Support for this research came from the DOE Office of Science’s Advanced Scientific Computing Research program. The OLCF is a DOE Office of Science user facility.

UT-Battelle manages ORNL for DOE’s Office of Science, the single largest supporter of basic research in the physical sciences in the United States. DOE’s Office of Science is working to address some of the most pressing challenges of our time. For more information, visit https://energy.gov/science.