Five years ago this month, the Laser Interferometer Gravitational-wave Observatory, or LIGO, made its first detection of gravitational waves, marking a historic event in the astrophysics community. Since then, LIGO has reported more than 50 gravitational wave observations. Often generated by collisions of compact astrophysical objects—such as black holes or neutron stars—gravitational waves may be used to statistically infer the properties of the remnants of such events, giving scientists a glimpse into their origins.

The availability of new data generated by LIGO has added a new cosmic messenger to multi-messenger astrophysics (MMA). MMA takes into account large, heterogenous, and disparate astrophysics data sets coming from multiple instruments and fuses them together to provide comprehensive snapshots of various astrophysical phenomena.

Now, using the Hardware Accelerated Learning (HAL) cluster system at the National Center for Supercomputing Applications (NCSA) and the IBM AC922 Summit supercomputer at the Oak Ridge Leadership Computing Facility (OLCF), a multi-institutional and multi-disciplinary team led by Eliu Huerta, Director of the NCSA’s Center for Artificial Intelligence Innovation, has trained a neural network model to determine the properties of merging black holes to gain a better understanding of the origins of these systems. The results were recently published in Physics Letters B.

The team included researchers from NCSA at the University of Illinois, NVIDIA, the US Department of Energy’s (DOE’s) Oak Ridge National Laboratory (ORNL), DOE’s Argonne National Laboratory (ANL), and the University of Chicago.

“Since coming online in Spring of 2019, HAL, funded by the National Science Foundation’s Major Research Instrumentation program, has become the system of choice for researchers at Illinois to train complex neural network models,” Said Volodymyr Kindratenko, co–principal investigator on the HAL project and Co-Director of the Center for Artificial Intelligence Innovation at NCSA. “HAL provides balanced, matching compute and storage capabilities that enable training jobs with up to 64 GPUs. Huerta’s team managed to scale their original single GPU implementation to the entire size of the cluster, utilizing every available resource. We are very pleased to see that this system is now fulfilling its mission of enabling cutting-edge research by the Illinois research community.”

The team’s use of ORNL’s Summit supercomputer sped up the training of their neural network by 600-fold.

“Summit’s leadership-class capabilities and AI-friendly architecture were ideal for the team to grow and accelerate the exploration,” said Arjun Shankar, group leader for the Advanced Data and Workflow Group at the OLCF, a DOE Office of Science User Facility at ORNL.

An emerging data infrastructure supported by multiple agencies will also allow researchers to easily reuse the results of this work. The Data and Learning Hub for Science (DLHub), powered by supercomputing resources at Argonne Leadership Computing Facility, serves as a nexus for publishing and sharing machine learning models and training data with the scientific community. The infrastructure also includes funcX, a National Science Foundation–funded platform to facilitate distributed computing, and a new DOE-funded project to explore Findable, Accessible, Interoperable, and Reusable (FAIR) models.

“We know that accelerating scientific progress relies on researchers being able to access and reuse prior work, and DLHub makes this process easy for AI tools and models,” said Ben Blaiszik, a researcher at the University of Chicago and ANL.

AI is for astrophysics

The origins of our universe remain one of science’s great mysteries. Until the mid-twentieth century, scientists were only using optical photons to study the universe as they began painting a picture of its beginnings. But over the last few decades, groundbreaking discoveries about the cosmos have been made possible by the use of other cosmic phenomena such as gravitational waves, neutrinos, and cosmic rays.

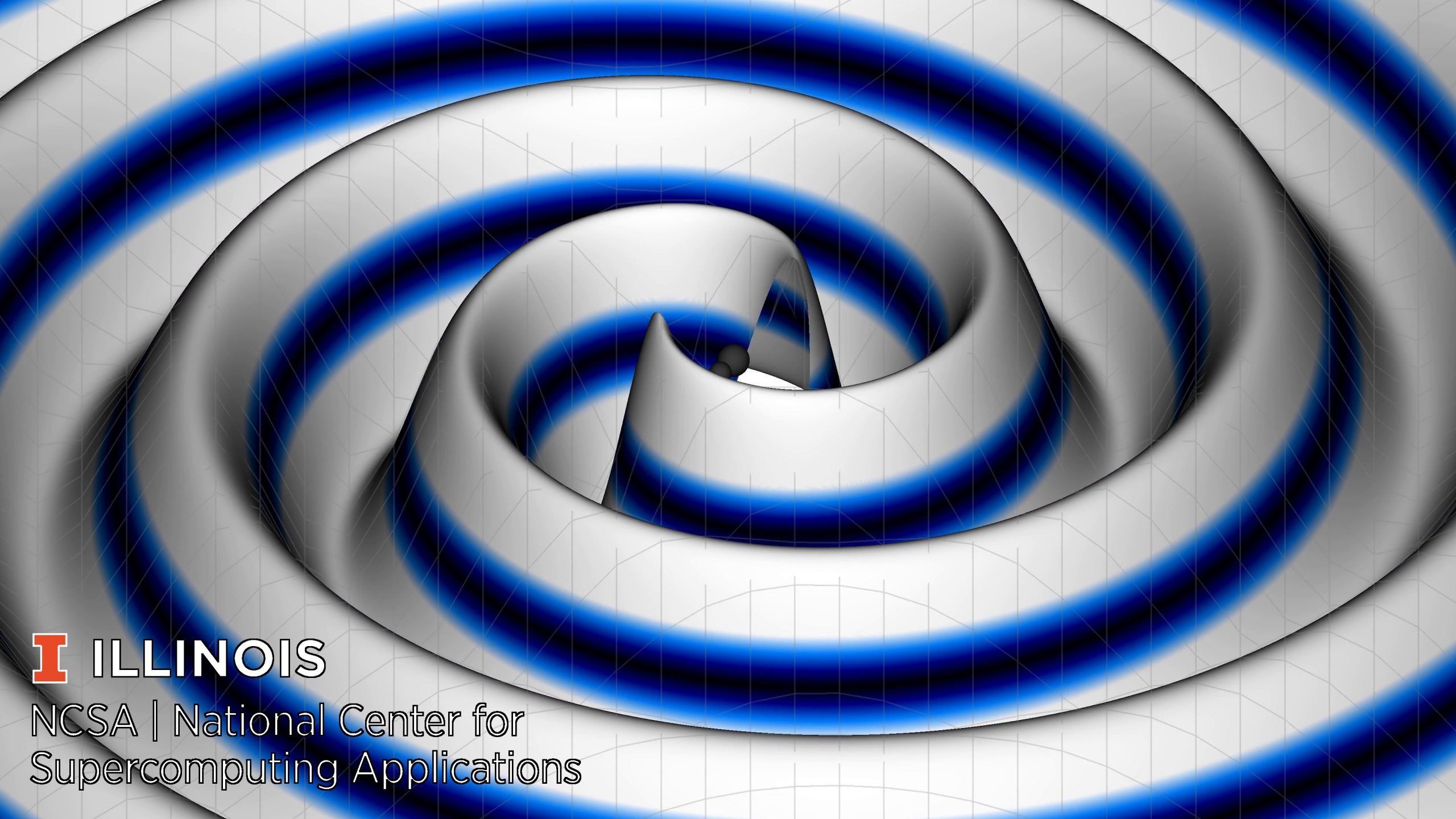

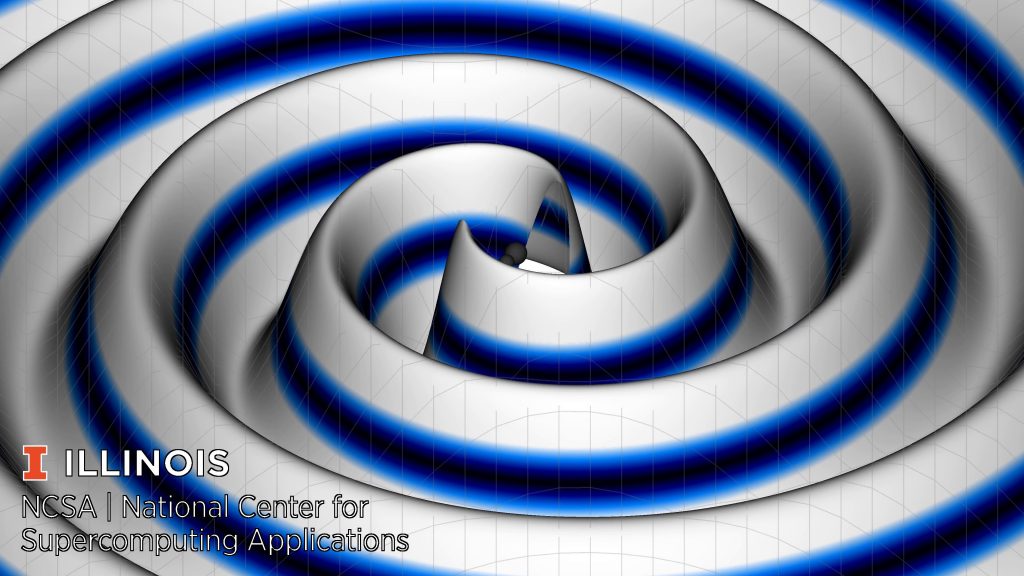

Black hole merger simulation. Credit: Eliu Huerta, NCSA at the University of Illinois Urbana-Champaign

Gravitational waves, a prediction of Einstein’s theory of general relativity, are produced when compact objects such as black holes or neutron stars spiral into each other and eventually collide. These waves of undulating spacetime propagate away from such cataclysmic events, carrying information about their progenitors—the instigators of these waves—and the nature of gravity.

Scientists detected the first gravitational waves in 2015 when two black holes collided. That event provided the first glimpse of the strong and fast-varying gravitational field near the most violent astrophysical phenomena ever known. Because the event did not emit light, it remained concealed to electromagnetic observatories, but it was clearly heard by the twin detectors of LIGO. LIGO has since become a pillar of MMA data, with scientists around the world leveraging its observational data for their own studies of the cosmos.

In 2017 Huerta and his research team at NCSA shared their vision to combine artificial intelligence (AI) and high-performance computing (HPC) to design scalable and computationally efficient algorithms for MMA in two seminalpapers. This novel approach has led to community efforts to accelerate the adoption of AI in MMA.

This year, Huerta assembled an interdisciplinary team of scientists from NCSA, NVIDIA, ORNL, ANL, and the University of Chicago to develop an end-to-end AI framework to produce and release open-source, physics-inspired AI models for MMA, harnessing NCSA’s HAL, the OLCF’s Summit, and DLHub.

In the team’s recent Physical Letters B paper, they showed how they prototyped physics-inspired AI architectures and optimization schemes with HAL and also described how they performed translational research by deploying new optimizers in Summit to reduce the training stage of their AI models from one month using a single NVIDIA V100 GPUto just 1.2 hours—a 600-fold speedup—using 1,536 NVIDIA V100 GPUs in the IBM POWER9–based system. They alsodemonstrated how they can scale the training of their AI models up to 6,144 of the V100 GPUs in Summit.

“Addressing big-data and computational grand challenges in multi-messenger astrophysics requires a community of experts,” Huerta said. “We are thrilled to see our vision to harness AI and HPC to enable discovery in multi-messenger astrophysics gradually materialize into a tangible set of innovative tools that are available to the broader scientific community.”

The first class of AI models for gravitational wave astrophysics developed by Huerta’s team utilized only tens of thousands of waveforms and required only a single NVIDIA GPU to finish the training within 3 hours. Production-scale, physics-inspired AI models require an entirely different computing paradigm, which ideally suits ORNL’s Summit system.

A FAIR distribution of data

To accelerate the adoption and use of AI, the team continues to release their AI models for cosmology, gravitational wave astrophysics, and astronomy, leveraging ANL’s DLHub.

“When people read these papers, they may think it’s too good to be true, so we let them convince themselves using a couple of GPUs,” Huerta said. “This is also to address important aspects in AI research around reproducibility and reusability.”

DLHub gives researchers the ability to reuse the team’s results for their own scientific endeavors.

“Scientists across the globe will be able to find, access, validate, and reuse these models with much less effort,” Blaiszik said.

However, lots of work remains to facilitate the application of machine learning to scientific problems.

“We’re going to be developing the idea of FAIR for models in a new DOE-funded project over the next few years,” said Daniel S. Katz, Assistant Director for Scientific Software and Applications at NCSA. “These models let us start that process.”

When worlds collide

In addition to accelerated AI models for gravitational wave astrophysics, Huerta’s team has been spearheading the convergence of AI and HPC in cosmology, designing neural networks to automate the classification of galaxies observed with large-scale electromagnetic surveys. This work was highlighted by NVIDIA CEO Jensen Huang in his GTC keynote in May. These novel tools have also been applied to automate the classification of star clusters observed by the Hubble Space Telescope.

Another piece of the puzzle concerns the use of AI to accelerate the modeling of multiscale and multiphysics simulations. One of Huerta’s graduate students recently demonstrated that AI can describe the physics of turbulence more accurately and efficiently than previous techniques.

“In this study, we showcase the use of AI to optimally use advanced cyberinfrastructure facilities to accelerate the modeling of complex physical phenomena,” Huerta said.

The combination of AI and HPC holds great promise to maximize the science reach of MMA, bringing about amazing discoveries and unprecedented technological and societal changes.

UT-Battelle LLC manages Oak Ridge National Laboratory for DOE’s Office of Science, the single largest supporter of basic research in the physical sciences in the United States. DOE’s Office of Science is working to address some of the most pressing challenges of our time. For more information, visit https://energy.gov/science.