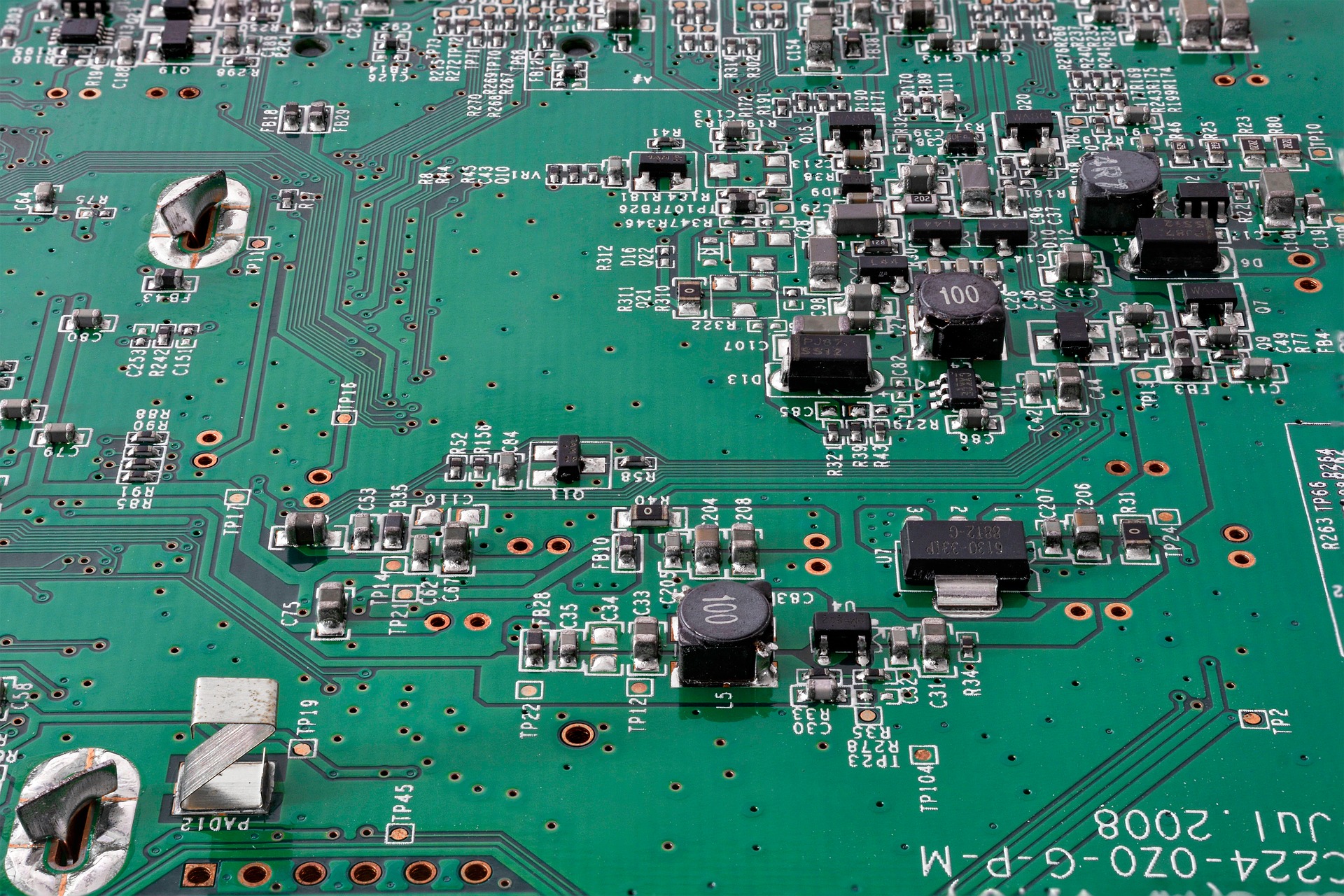

Transistors, tiny semiconductor devices that switch electricity on or off, revolutionized the electronics field when they were discovered in 1947. Originally half-inch-high pieces of germanium, transistors today are mainly composed of germanium’s close relative, silicon, and are about 40 to 60 nanometers in length—more than 1,000 times smaller than the finest grains of sand on Earth. Their size allows electronics manufacturers to fit billions of them on present-day computer chips.

Although today’s electronics are incredibly compact, they don’t come without challenges. As electrons flow through transistors, they generate heat that dissipates into the environment around them. And as transistors get smaller, the density of the heat they dissipate gets bigger.

To better understand this problem, a team led by Torsten Hoefler, associate professor at ETH Zürich, studies transistors by simulating quantum transport, or the transport of electric charge carriers through nanoscale materials such as those in transistors. The team recently performed a 10,000-atom simulation of a 2D slice of a transistor on the Oak Ridge Leadership Computing Facility’s (OLCF’s) IBM AC922 Summit, the world’s most powerful and smartest supercomputer, and developed a map of where heat is produced in a single transistor. Using a new data-centric (DaCe) version of the OMEN nanodevice simulator, the team reached a sustained performance of 85.45 petaflops and earned a 2019 Association for Computing Machinery Gordon Bell Prize finalist nomination.

The results could be used to inform the production of new semiconductors with optimal heat-evacuating properties.

“If we want to mitigate this problem, we need to understand where this heat comes from, how it’s generated, and how we can better evacuate it from the active regions of these transistors,” said Mathieu Luisier, associate professor at ETH Zürich and the main author of OMEN. “Simulations can guide us toward the knowledge of where heat is generated and specifically how it’s dependent on the current that flows.”

With the new code, the team demonstrated a successful new programming model: the DaCe framework, which was developed at ETH. Traditional programming requires line-by-line modification to change the code, but the DaCe framework provides a visual representation of data movement, allowing a programmer to interact with this representation to optimize the code more easily.

“This is a fundamental departure from everything that’s been done before in code optimization, and we’re really hoping to develop the next generation of parallel programming capabilities and techniques,” Hoefler said.

Complexity at the nanoscale

The current transistors in most electronic devices are called FinFETs—Fin for the appearance of the semiconductor channels on the silicon and FET for the transistor type, field-effect transistor. These transistors use electric fields generated by external voltages to control current flow. Current flows through a “source” terminal and out through a “drain” terminal, and the transistor’s “gate” terminal modulates this action.

FinFETs are 3D structures, but simulating a full 3D transistor (about 1 million atoms) would require resources far greater than the OLCF’s 200-petaflop Summit supercomputer. The team instead simulated a 2D slice of a FinFET measuring 5 nanometers in width and 40 nanometers in length—an accurate 2D blueprint that would allow it to easily extrapolate the results to a full 3D version.

“We wanted this to be realistic and reach the dimensions of manufactured transistors,” Luisier said. “This corresponds to what is actually fabricated.”

Using DaCe OMEN, which models quantum transport of electric charge carriers, the researchers simulated a 10,000-atom system 14 times faster than the speed at which they could previously simulate a 1,000-atom system. The team completed the simulation in less than 8 minutes, and the speedup made DaCe OMEN two orders of magnitude faster per atom than the original OMEN code.

Even on machines the same caliber as the OLCF’s former supercomputer, the Cray XK7 Titan, the team would not have achieved these results. The OLCF is a US Department of Energy (DOE) Office of Science User Facility located at DOE’s Oak Ridge National Laboratory.

“We used 45 percent of Summit’s theoretical peak,” Hoefler said. “On top of that, even just the memory requirements of our program require a resource like Summit.”

To maximize their use of the system, the researchers also optimized a piece of DaCe OMEN for mixed-precision calculations—an unusual move for a code that depends on precision and accuracy—and reached a sustained performance of 90.89 petaflops. Mixed-precision calculations are less precise, but the researchers used them for a small portion of their code to demonstrate their ability to take full advantage of Summit’s architecture, including the NVIDIA Tensor Cores on its GPUs. Tensor Cores are specialized processing units for artificial intelligence and deep learning research.

New programming perspectives

One of the team’s most exciting accomplishments is its use of a new programming paradigm based on the way data flows in a simulation.

Timo Schneider, a computer scientist at ETH, built a graphic user interface for the DaCe framework that provides a visualization of data flow rather than a simple textual description.

“When you’re running the code, you can interact with this data and apply your changes directly on the image representation,” Schneider said.

The team believes the new programming model could change how people program. In fact, the concepts could benefit countless scientific domains, not just nanoelectronics.

The team also hopes semiconductor companies will use the code to design better and more efficient transistors. Luisier is currently working with companies that may be interested in selling software based on the algorithms used in the project.

“We want to be ready to model a design if a company comes to us and wants to try something new,” Luisier said. “If we can directly construct a simulation of a design, we could tell them how much the temperature will increase based on this design, and that could help them create better transistors.”

Related Publication: A. Ziogas, T. Ben-Nun, G. Indalecio, T. Schneider, M. Luisier, and T. Hoefler, “A Data-Centric Approach to Extreme-Scale Ab Initio Dissipative Quantum Transport Simulations.” To appear in SC19 Proceedings of the International Conference for High Performance Computing, Networking, Storage, and Analysis, Denver, CO, November 17–22, 2019.

UT-Battelle LLC manages Oak Ridge National Laboratory for DOE’s Office of Science, the single largest supporter of basic research in the physical sciences in the United States. DOE’s Office of Science is working to address some of the most pressing challenges of our time. For more information, visit https://energy.gov/science.