OLCF computational scientist Wayne Joubert successfully exploited the Summit supercomputer’s low-precision capabilities to accelerate a genomics application to exascale speeds.

Since the days of vector supercomputers, computational scientists have relied on high-precision arithmetic to accurately solve a wide range of problems, from modeling nuclear reactors to predicting supernova physics to measuring the forces within an atomic nucleus.

But changes to hardware, spurred by the demand for more computing capability and growth in machine learning, have researchers rethinking the balance between the number of digits needed to perform a given calculation and computational efficiency. For portions of a calculation that do not require 64-bit double-precision arithmetic—the longtime floating-point number standard in high-performance computing (HPC)—lower precision alternatives may provide enough accuracy. The tradeoff could lead to scientific discoveries that would otherwise remain years away.

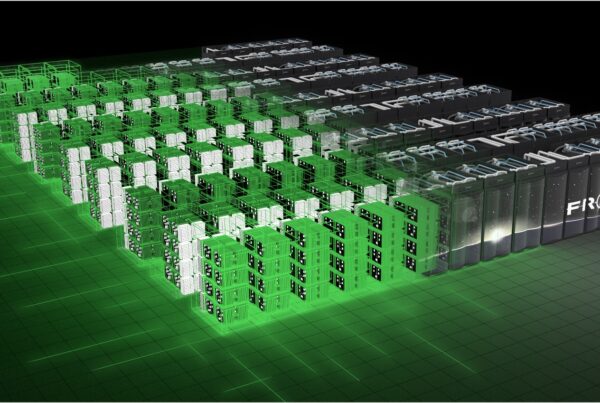

A glimpse into this brave new mixed-precision world was previewed at the launch of the Summit supercomputer at the US Department of Energy’s (DOE’s) Oak Ridge National Laboratory (ORNL) in June 2018. Named the fastest system in the world by the TOP500 list upon its debut, Summit derives 95 percent of its computing power from its more than 27,000 NVIDIA Tesla V100 GPUs. For standard double-precision problems, Summit’s peak performance maxes out at 200 petaflops, or 200 million billion double-precision calculations per second. Application developers who can utilize low-precision arithmetic, however, will find the IBM AC922 system has an extra gear—one that peaks at more than 3 exaops, or 3 billion billion mixed-precision calculations per second.

This capability stems from a specialized NVIDIA integrated circuit called a tensor core designed to boost deep-learning research by executing a simple matrix operation quickly. By building the feature directly into hardware, NVIDIA gifted deep-learning researchers with a technology that can train and run neural networks several times faster than the speed that would otherwise be expected.

Part of the reason tensor cores operate so quickly—around 16 times faster than standard computation—is because they support 16-bit, half-precision arithmetic, a floating-point format that accommodates only a fraction of the digits compared with double precision. A secondary step of tensor cores’ matrix operation runs at 32-bit single precision. On Summit, researchers have already demonstrated the value of tensor cores by obtaining speeds surpassing 1 mixed-precision exaop for distributed neural networks.

Although the usefulness of tensor cores for supercharging low-precision deep learning is obvious, its relevance for flavors of scientific computing that require more accuracy remains less so. However, that hasn’t stopped some computational scientists from experimenting with this new technology.

Search for acceleration

As one of the few researchers with early access to Summit, Oak Ridge Leadership Computing Facility computational scientist Wayne Joubert has gotten a head start in this respect.

Since NVIDIA announced the tensor core GPU architecture in May 2017, Joubert has wondered if the feature could be useful for more than training neural networks. “Whenever hardware has some new feature, scientists are going to ask whether it is useful for their science,” Joubert said.

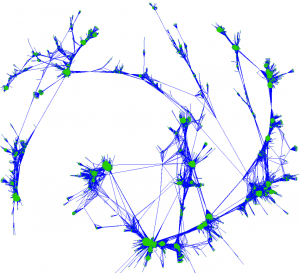

A visualization of a network depicting correlations between genes in a population. These correlations can be used to identify genetic markers linked to complex observable traits.

Working as the lead methods developer for a comparative genomics project called Combinatorial Metrics (CoMet) in 2018, Joubert entertained the idea of accelerating the code to use Summit’s low-precision capabilities to boost analysis of genomic datasets. Just a year earlier, he had ported CoMet to the Titan supercomputer, fine-tuning the application to take advantage of the Cray XK7’s GPU acceleration. The resulting speedup contributed to research led by ORNL computational systems biologist Dan Jacobson on regulatory genes of plant cell walls that can be manipulated to enhance biofuels and bioproducts.

One CoMet algorithm Joubert thought might be particularly well suited for tensor cores was the Custom Correlation Coefficient (CCC) method, which specializes in comparing variations of the same genes, known as alleles, present in a given population. Tensor core-enhanced performance of CCC could potentially allow researchers to analyze datasets composed of millions of genomes—an impossible task for current leadership-class systems—and study variations among all possible combinations of two or three alleles at a time. Scientists could then use this information to uncover hidden networks of genes in plants and animals that contribute to observable traits, such as biomarkers for drought resistance in plants or disease in humans.

In conversations with colleagues on the systems biology team and in HPC circles, Joubert discussed the CCC algorithm in depth. He studied the algorithm’s description in old research papers and considered possible reformulations that could map to low-precision hardware. “It’s going back to mathematics and asking if there is some way to rearrange the pieces and get the same result,” Joubert said.

Achieving exaops

Mixed-precision arithmetic isn’t new to HPC. Scientists have been using it regularly to selectively boost application performance when it makes sense. For example, in 2008 an ORNL team achieved the first sustained petaflop simulation using a materials science application called DCA++ that utilized a combination of single and double precision. In that instance, the team employed single precision for a portion of its code concerning a quantum Monte Carlo calculation that solved embedded material clusters and did not require double precision. The arrangement resulted in a twofold speedup for that portion of the code—the typical performance gain expected in the switch from double to single precision.

New types of accelerators like tensor cores, however, raise the reward substantially for application developers who can successfully recast their problem. Instead of factors of 2, the potential exists for acceleration approaching factors of 10. For Joubert, a deep dive into CCC’s mathematics—examining how the genomic data translates into bits, or sequences of numbers, and how these sequences are compared across a population—proved to be the key.

“The heart of the method is to count the number of occurrences of certain combinations of these two-bit pairs, which represent different alleles within a gene,” Joubert said. “You compare the bits, get counts of the number of occurrences of these combinations across your population, and calculate the result. It occurred to me that we can actually take these two-bit values and insert them into the half-precision format.”

Normally, mapping 2 bits into a 16-bit floating-point number would be inefficient—like ferrying boat passengers two at a time on a vessel that could otherwise hold the entire group. Joubert, however, intuited that the speed of the tensor cores would more than make up for this unconventional data packaging.

From the moment inspiration struck him, Joubert spent 2 weeks writing his idea into code. He spent another month optimizing it. The performance gains on Summit became apparent immediately— delivering a machine-to-machine 37-fold speedup compared with the Titan implementation. Testing the algorithm across 4,000 nodes using a representative dataset, the genomics application team achieved a peak throughput of 1.88 mixed-precision exaops—faster than any previously reported science application. With some additional fine-tuning, the algorithm leaped to 2.36 mixed-precision exaops running on 99 percent of Summit (4,560 nodes). The final result was a rate of science output four to five orders of magnitude beyond the current state of the art. The journey that started with a low-precision puzzle had opened a new frontier in comparative genomics analysis.

Joubert said close collaboration with Jacobson and his systems biology colleagues played a large role in the team’s technical triumph. “We speak different languages,” he said. “At the start of the project I didn’t know anything about their science problem, and they knew very little about GPUs. I think you need to have multidisciplinary interaction to find these new opportunities.”

ORNL is managed by UT-Battelle for the Department of Energy’s Office of Science, the single largest supporter of basic research in the physical sciences in the United States. DOE’s Office of Science is working to address some of the most pressing challenges of our time. For more information, please visit https://science.energy.gov.