“The aim of ALCC is to advance high-risk, high-return computational science projects that are aligned well with the DOE mission and to expand the scope of scientists who make good use of leadership computing facilities.”

–Jack Wells, OLCF Director of Science

2015–16 DOE program features diverse projects at OLCF

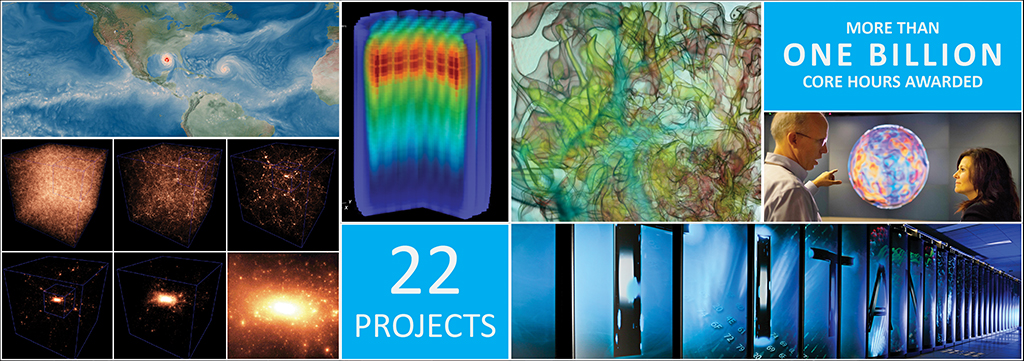

The Oak Ridge Leadership Computing Facility (OLCF), a US Department of Energy Office of Science User Facility, has awarded more than 1 billion processor hours to 22 projects through the DOE Office of Advanced Scientific Computing Research (ASCR) Leadership Computing Challenge (ALCC).

ALCC—the second largest allocation program for Titan—aims to advance high-risk, high-return computational science projects that are important to the DOE mission and to expand the community of scientists using leadership class facilities. The wide variety of projects underscores the multidisciplinary perspective that DOE and the OLCF continue to promote. Project topics range from developing enzymes for renewable energy to creating three-dimensional simulations of supernovae.

OLCF Director of Science Jack Wells said ALCC’s aim is “to advance high-risk, high-return computational science projects that are aligned well with the DOE mission and to broaden the community of researchers capable of using leadership computing resources.”

As in the past, this year’s list is an impressive lineup of innovative projects led by world-class scientists using the facilities of the OLCF, located at DOE’s Oak Ridge National Laboratory (ORNL). Specific awards on Titan—ranging from 5 million to 167 million processor hours—went to projects such as the following:

Nuclear Fusion. The search for clean, abundant energy is one of the biggest challenges of the 21st century. With little radioactive waste and a huge energy payoff, nuclear fusion—the same process that powers the sun—is an attractive option. However, the harsh and unforgiving environment necessary for fusion makes progress difficult. Designing parts that can withstand heat exposure and particle impacts is a major concern for scientists like Brian Wirth, University of Tennessee–ORNL Governor’s Chair for computational nuclear engineering.

Nuclear Fusion. The search for clean, abundant energy is one of the biggest challenges of the 21st century. With little radioactive waste and a huge energy payoff, nuclear fusion—the same process that powers the sun—is an attractive option. However, the harsh and unforgiving environment necessary for fusion makes progress difficult. Designing parts that can withstand heat exposure and particle impacts is a major concern for scientists like Brian Wirth, University of Tennessee–ORNL Governor’s Chair for computational nuclear engineering.

Tungsten is currently the material of choice for divertors, which are an important part of a fusion reactor and basically serve as giant exhaust systems to extract heat, helium ash, and other impurities from the plasma. Wirth and his team received 36 million hours to study how tungsten responds to helium gas atoms in a fusion energy environment.

During fusion reactions, helium is produced in the form of plasma. As the plasma is spun around at high speeds (and resulting high temperatures), a helium atom sometimes will shoot off into the wall of the reactor. Because of the extreme conditions, these helium atoms can become embedded in the wall’s structure. Eventually, helium atoms gather, pushing aside the tungsten atoms that make up the wall’s surface and creating helium bubbles that can trap radioactive tritium, reduce efficacy, and even rupture, releasing heavy tungsten atoms into the plasma.

The computing resources on Titan will enable the team to run long simulations and identify the mechanisms controlling bubble populations, surface topology changes, and overall gas inventory and release. The information from these simulations will be used to create a database that will inform future materials design strategies for the fusion energy environment.

Carbon Compression. Power plants that burn fossil fuels account for more than 40 percent of the world’s energy-related CO2 emissions and will continue to dominate the supply of electricity until the middle of the century, creating an urgent need for cost-effective ways to capture and store power plant carbon emissions.

Carbon capture and sequestration (CCS) is the process of capturing carbon dioxide (CO2), transporting it to a storage site, and depositing it in a place where it will not enter the atmosphere, such as unminable coal seams and declining oil fields. CCS can capture up to 90 percent of the carbon dioxide emissions produced, preventing it from entering the atmosphere; however, CCS currently is too expensive for widespread use.

About one-third of the total cost of CCS is spent on compressing the captured CO2 for transportation. A team led by Ravi Srinivasan, a researcher for Dresser-Rand, received 47 million hours to improve the design and performance of the compressors to bring these costs down.

The 2015 allocation focuses on rotating stall, a disruption of the airflow within the compressor that reduces effectiveness. Stall can also cause fatigue leading to inoperability in severe conditions. The team’s current project focuses on performing fluid dynamic simulations to gain a better understanding of this phenomenon at multiple scales.

Additionally, the researchers aim to support the next generation of CCS compression technology by generating optimized designs of existing compressor technology. Srinivasan’s team will use Titan to dramatically accelerate the design time line and performance of high-pressure single stage compressors, resulting in cost-effective techniques and a more detailed understanding of complex turbomachinery flow physics.

Simulating Quantum Computing. Recent theoretical and experimental advances have generated a lot of excitement in quantum computing. However, there is no conclusive evidence so far that quantum computing is faster overall than current computers, also referred to as classical systems.

Researchers are building special purpose devices, known as quantum annealers, that focus on solving specific types of problems that may be better suited for quantum machines.

Whereas these new machines are exciting, they currently are rare, limited, and prone to errors. Simulations are necessary to gain insight into their nature and potential capabilities.

Itay Hen of the University of Southern California is the principal investigator for a team that received an ALCC allocation of 20 million hours. The team’s project will simulate quantum annealers and develop engineering benchmarks to analyze how these machines solve optimization problems differently from classical systems.

An optimization problem is one that requires the best of many possible solutions with diverse constraints. A variety of industries rely on optimization to do everything from shipping goods quickly and cheaply to using raw materials in a way that minimizes waste to selecting the best location for a new factory.

Hen’s team will simulate quantum annealers and develop engineering benchmarks to understand better how to exploit the unique properties of quantum annealers in solving optimization problems. These benchmarks will help isolate groups of problems that are classically hard but not necessarily quantum-hard. Exploring novel ways to use quantum annealers will have immediate practical applications and may provide access to solutions that wouldn’t be found by classical systems. —Christie Thiessen

Oak Ridge National Laboratory is supported by the US Department of Energy’s Office of Science. The single largest supporter of basic research in the physical sciences in the United States, the Office of Science is working to address some of the most pressing challenges of our time. For more information, please visit science.energy.gov.