Supercomputing cuts time and risk in new drug development

Drugmakers invest heavily in research and development every year in hopes of finding the next big money maker, but the failure rate of new drug candidates is staggering. In fact, less than 1 percent of drugs starting in the lab make it to market.

“We work toward delivering new drugs to the market at a fraction of the cost and time that it takes now,” says Jerome Baudry, an assistant professor at the University of Tennessee (UT) and member of the Center for Molecular Biophysics at Oak Ridge National Laboratory (ORNL).

The industry responsible for keeping Americans healthy is itself in need of a prescription. UT and ORNL researchers believe that injecting the pharmaceutical industry with a strong dose of supercomputing might just be the remedy.

The problem, Baudry explains, is that the high cost of drugs is due in part to the fact that manufacturers have to recoup the cost of the drugs that fail.

The average cost of developing and bringing one drug to market can range from a few hundred million dollars to more than a billion. And it’s not out of the ordinary for drug development to take from 10 to 15 years before patients get the meds they need. The simple reason is that it takes a really long time to test pharmaceuticals.

The Food and Drug Administration (FDA) has an extensive series of phases that must be followed to a T for a drug to be approved.

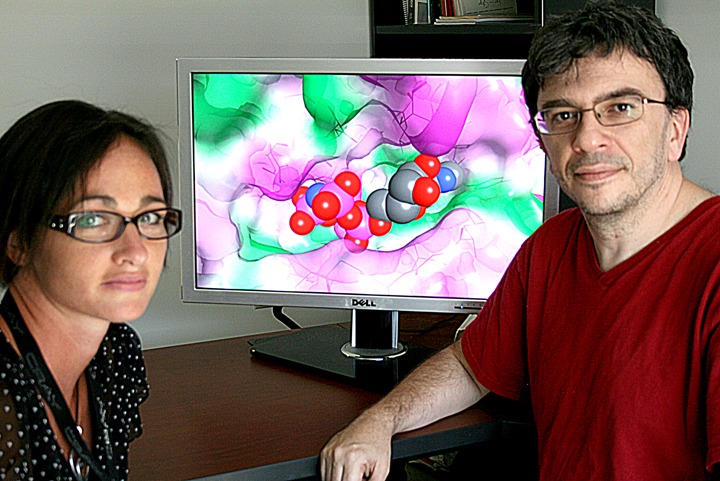

Biophysicists Sally Ellingson (left) and Jerome Baudry use simulations to find the best fit between drug candidates and their targeted receptors within the body. Image credit: Jeremy Rumsey, ORNL.

First off, developers must conduct a slew of preclinical research involving petri dishes, cultures, test tubes, animal testing, and more before they can even apply for FDA approval. Drugs spend, on average, 3 years in this stage.

After the drug developer submits an Investigational New Drug application, the FDA carefully reviews and approves it. Within 30 days, the FDA determines whether the drug will proceed to phase 1 of the testing process.

Phase 1 consists of administering the drug candidate to 20 to 80 volunteers to determine its efficiency in binding to its intended target and its levels of toxicity. Phase 1 typically lasts about a year. If the results are positive, testing moves into phase 2.

In the second phase of testing the drug is given to 100 to 300 volunteers. Proper dosages are calculated, and the effectiveness is continuously monitored for approximately 2 years. If all goes well, the drug makes it to third-phase testing.

Phase 3 is where most drugs fail. Hunting for both desired effects and undesired side effects, physicians give the drug to 1,000 to 3,000 volunteers with specific illnesses. Three more years could go by before the drug is declared a success.

“If drugs are going to fail, you want them to fail fast, fail cheap,” says ORNL researcher and UT Governor’s Chair Jeremy Smith, a collaborator of Baudry’s.

If the drug makes it this far—and approximately 99 percent do not—the manufacturer submits a New Drug Application to the FDA, which includes a full report of major findings to date as well as all scientific data related to the study. It can take the FDA 6 months or longer to review the submission.

So, worst-case scenario, 15 years and $1.8 billion later consumers may have a pill for their ill. Of course, at this point long-term side effects continue to be documented, so if waiting for the new medicine hasn’t killed the patient, the treatment still might. And that is precisely why computational biophysicists have turned to ORNL supercomputers to more rapidly concoct a remedy.

The computational elixir

The cure-all calls for 1 part petaflop—a quadrillion calculations per second—and 65,000 processing cores. In fact, the Jaguar supercomputer at ORNL has already tested 2 million different compounds against a targeted receptor—and did so amazingly quickly. Comparatively speaking, what would have taken cluster computing systems months to accomplish, and conventional test-tube methods even longer, Jaguar did in less than a couple of days.

Baudry and his team of computational biophysicists use computers much like other scientists use microscopes. After making alterations to publicly licensed virtual high-throughput (VHT) software known as Autodock4 and AutodockVina from the Scripps Research Institute, they were able to create 3D biological simulations of compounds docking with receptors in the body.

The codes were scaled to run on Jaguar, which was the job of UT graduate student and computational scientist Sally Ellingson. She developed a parallelization of AutodockVina that renders the code efficient on supercomputers. Without this parallelization, data could not travel freely between processors and would have created inevitable traffic jams among the CPUs.

The simulations the team created are based upon the process by which molecular compounds function within the body. Pharmaceuticals work because they bind specifically to certain cellular receptors that play roles in health and disease; similar to the way a key fits a lock. When that key opens too many locks, however, side effects occur.

Baudry and his collaborators want to be able to predict the specific binding of a drug to a receptor to avoid cross-reactivity. Knowing this behavior will help researchers generate drug candidates likely to survive clinical trials.

With so many different combinations to screen, “it’s like trying to find a needle in a haystack,” Baudry says. Finding the needle becomes much easier, though, if the haystack is smaller.

Thanks to the efficient and massive computations possible using Jaguar, Baudry and his collaborators can screen drug candidates against multiple receptors and the dynamic structural variations of those receptors. The ability to run simulations greatly reduces the sample size as poor drug candidates get eliminated and ultimately produces a more specifically binding, and therefore more efficient, drug.

The potential for virtual high-throughput simulations is practically limitless. Not only is it a tremendous tool for finding matches between compounds and their targeted receptors, but VHT modeling also works for repurposing, or finding alternative uses for drugs, another aspect that greatly excites Baudry. Many drugs investigated for one ailment can also be effective against other diseases or conditions. That means a collaborator can bring a molecule to Baudry and say, “We want to know what else this compound can do.”

At ORNL not only are the methods to screen these new drug candidates improving, but so too are the technologies.

The next time virtual screenings are run for new drug candidates, it will not be on Jaguar but on Titan, an even more powerful supercomputer resulting from an upgrade to Jaguar that adds accelerated NVIDIA graphics processing units to speed up the math and take some of the load off the central processing units.

ORNL scientists hope to be running the codes around 20 petaflops by next year, a nearly tenfold increase in computational power. At that rate, Titan would be able to screen 20 million compounds against a targeted receptor in just one day.

“In the field of new drug discovery, Titan will be the equivalent of the Saturn V rocket. We are going to be able to fly to the moon,” Baudry says. “We will be able to simulate going inside patients and inside their cells instead of the test tube, and that’s a revolution.”—by Jeremy Rumsey