Supercomputers help optimize engines, turbines, and other technologies for clean energy

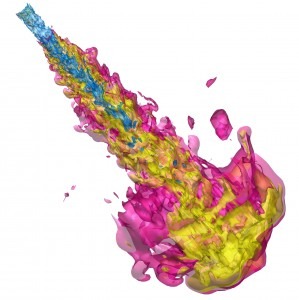

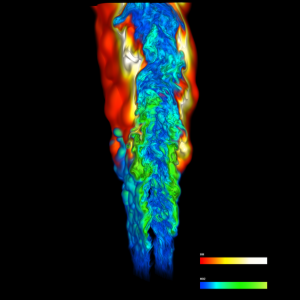

High-fidelity large eddy simulation (LES) of direct-injection processes in internal-combustion engines provides an essential component for development of high-efficiency, low-emissions vehicles. Here LES reveals how fuel from a state-of-the-art injector mixes with air inside an engine cylinder. Image credit: Joseph Oefelein and Daniel Strong, Sandia National Laboratories.

Air and fuel mix violently during turbulent combustion. The ferocious mixing needed to ignite fuel and sustain its burning is governed by the same fluid dynamics equations that depict smoke swirling lazily from a chimney. Large swirls spin off smaller swirls and so on. The multiple scales of swirls pose a challenge to the supercomputers that solve those equations to simulate turbulent combustion. Researchers rely on these simulations to develop clean-energy technologies for power and propulsion.

A team led by mechanical engineers Joseph Oefelein and Jacqueline Chen of Sandia National Laboratories (Sandia) simulates turbulent combustion at different scales. A burning flame can manifest chemical properties on small scales from billionths of a meter up to thousandths of a meter, whereas the motion of an engine valve can exert effects at large scales from hundredths of a meter down to millionths of a meter. This multiscale complexity is common across all combustion applications—internal combustion engines, rockets, turbines for airplanes and power plants, and industrial boilers and furnaces.

Chen and Oefelein were allocated 113 million hours on Oak Ridge Leadership Computing Facility’s Jaguar supercomputer in 2008, 2009, and 2010 to simulate autoignition and injection processes with alternative fuels. For 2011 they received 60 million processor hours for high-fidelity simulations of combustion in advanced engines. Their team uses simulations to develop predictive models validated against benchmark experiments. These models are then used in engineering-grade simulations, which run on desktops and clusters to optimize designs of combustion devices using diverse fuels. Because industrial researchers must conduct thousands of calculations around a single parameter to optimize a part design, calculations need to be inexpensive.

“Supercomputers are used for expensive benchmark calculations that are important to the research community,” Oefelein said. “We [researchers at national labs] use the Oak Ridge Leadership Computing Facility to do calculations that industry and academia don’t have the time or resources to do.”

The goal is a shorter, cheaper design cycle for U.S. industry. The work addresses Department of Energy (DOE) mission objectives to maintain a vibrant science and engineering effort as a cornerstone of American economic prosperity and lead the research, development, demonstration, and deployment of technologies to improve energy security and efficiency. The research was funded by DOE through the Office of Science’s Advanced Scientific Computing Research and Basic Energy Sciences programs, the Office of Energy Efficiency and Renewable Energy’s Vehicle Technologies program, and the ARRA-funded Combustion Energy Frontier Research Center.

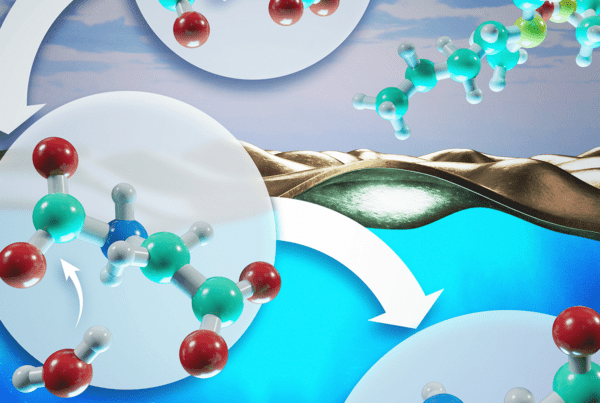

Making combustion more efficient would have major consequences, given our reliance on natural gas and oil. Americans use two-thirds of their petroleum for transportation and one-third for heating buildings and generating electricity. “If low-temperature compression ignition concepts employing dilute fuel mixtures at high pressure are widely adopted in next-generation autos, fuel efficiency could increase by as much as 25 to 50 percent,” Chen said.

Complementary codes

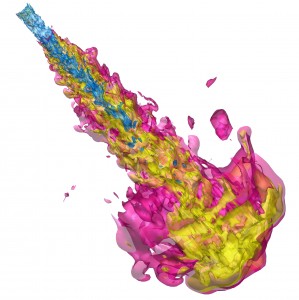

Direct numerical simulation reveals turbulent combustion of a lifted ethylene/air jet flame in a heated coflow of air. Computation by Chun Sang Yoo and Jackie Chen of Sandia National Laboratories, rendering by Hongfeng Yu of SNL. Reference: C. S. Yoo, E. S. Richardson, R. Sankaran, and J. H. Chen, “A DNS Study of the Stabilization Mechanism of a Turbulent Lifted Ethylene Jet Flame in Highly-Heated Coflow,” Proc. Combust. Inst. 33 (2011)1619–1627.

Chen uses a direct numerical simulation (DNS) code called S3D to simulate the finest microscales of turbulent combustion on a three-dimensional virtual grid. The code models combustion unhindered by the shape of a device. These canonical cases emulate physics important in both fast ignition events and slower eddy turbulence and provide insight into how flames stabilize, extinguish, and reignite.

Oefelein, on the other hand, uses a large eddy simulation (LES) code called RAPTOR to model processes in laboratory-scale burners and engines. LES captures large-scale mixing and combustion processes dominated by geometric features, such as the centimeter scale on which an engine valve opening might perturb air and fuel as they are sucked into a cylinder, compressed, mixed, burned to generate power, and pushed out as exhaust.

Whereas DNS depicts the fine-grained detail, LES starts at large scales and works its way down. The combination of LES and DNS running on petascale computers can provide a nearly complete picture of combustion processes in engines.

“Overlap between DNS and LES means we’ll be able to come up with truly predictive simulation techniques,” Oefelein said. Today experimentalists burn fuel, collect data about the flame, and provide the experimental conditions to computational scientists, who plug the equations into a supercomputer that simulates a flame with the same characteristics as the observed flame. Researchers compare simulation with observation to improve their models. The goal is to gain confidence that simulations will predict what happens in the experiments. New products could then be designed with inexpensive simulations and fewer prototypes.

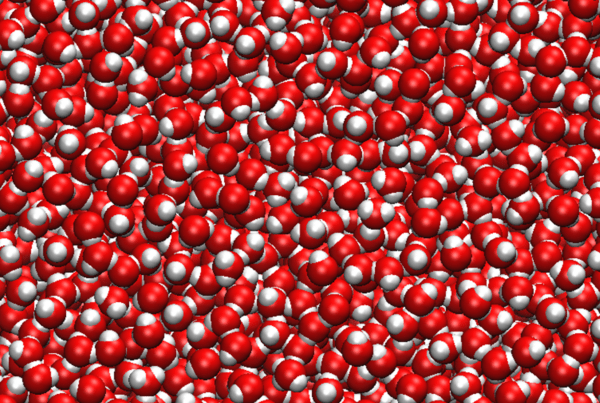

In a high-fidelity DNS with cells just 5 millionths of a meter wide, Chen’s team modeled combustion in a canonical high-pressure, low-temperature domain to investigate processes relevant to homogeneous charge compression ignition. Another simulation, using 7 billion grid points, explored syngas, a mix of carbon monoxide and hydrogen that burns more cleanly than coal and other carbonaceous fuels. It used 120,000 (approximately half) of Jaguar’s processors and generated three-quarters of a petabyte of data.

Since their 2008 simulation of a hydrogen flame—the first to fully resolve detailed chemical interactions such as species composition and temperature in a turbulent flow environment—they have simulated fuels of increasing complexity. Because real-world fuels are mixtures, simulations use surrogates to represent an important fuel component. Current simulations focus on dimethyl ether (an oxygenated fuel) and n-heptane (a diesel surrogate). Simulations are planned for ethanol and iso-octane (surrogates for biofuel and gasoline, respectively).

Oefelein uses RAPTOR to investigate turbulent reacting flows in engines. In a GM-funded experimental engine designed at the University of Michigan and used by the research community as a benchmark, he explores phenomena such as cycle-to-cycle variations, which can cause megaknock—an extreme form of the “bouncing marbles” sound sometimes heard in older engines. The fuel injection, air delivery, and exhaust systems are interconnected, and pressure oscillations have huge effects on engine performance. “Megaknock can do significant damage to an engine in one shot,” said Oefelein. He noted that combustion instabilities also plague power plants, where fear forces designers of gas turbines to make conservative choices that lessen performance. “Combustion instabilities can destroy a multimillion dollar system in milliseconds,” Oefelein said.

Petascale simulations will light the way for clean-energy devices and fuel blends that lessen combustion instabilities. The data they generate will inform and accelerate next-generation technologies that increase energy security, create green jobs, and strengthen the economy.

Chen and Oefelein’s collaborators include Gaurav Bansal, Hemanth Kolla, Bing Hu, Guilhem Lacaze, Rainer Dahms, Hongfeng Yu, and Ajith Mascarenhas of Sandia; Ramanan Sankaran of ORNL; Evatt Hawkes of the University of New South Wales; Ray Grout of the National Renewable Energy Laboratory; and Andrea Gruber of SINTEF (the Foundation for Scientific and Industrial Research at the Norwegian Institute of Technology).—by Dawn Levy