PI: Mark Taylor,

Sandia National Laboratories

In 2016, the Department of Energy’s Exascale Computing Project (ECP) set out to develop advanced software for the arrival of exascale-class supercomputers capable of a quintillion (1018) or more calculations per second. That meant rethinking, reinventing, and optimizing dozens of scientific applications and software tools to leverage exascale’s thousandfold increase in computing power. That time has arrived as the first DOE exascale computer – the Oak Ridge Leadership Computing Facility’s (OLCF’s) Frontier – opens to users around the world. “Exascale’s New Frontier” explores the applications and software technology for driving scientific discoveries in the exascale era.

The Scientific Challenge

Gauging the likely impact of a warming climate on global and regional water cycles poses one of the top challenges in climate change prediction. Scientists need climate models that span decades and include detailed atmospheric, oceanic, and ice conditions in order to make useful predictions. But 3D models of the complicated interactions between these elements, particularly the churning, convective motion behind cloud formation, have remained computationally expensive and outside the reach of even the largest, most powerful supercomputers — until now.

Why Exascale?

The Energy, Exascale and Earth System Model-Multiscale Modeling Framework – or E3SM-MMF – project overcomes these obstacles by combining a new cloud physics model with massive exascale throughput to enable climate simulations that run at unprecedented speed and scale. The E3SM-MMF team has spent five years optimizing these codes for exascale supercomputers to provide the highest resolution of climate simulations that can span decades.

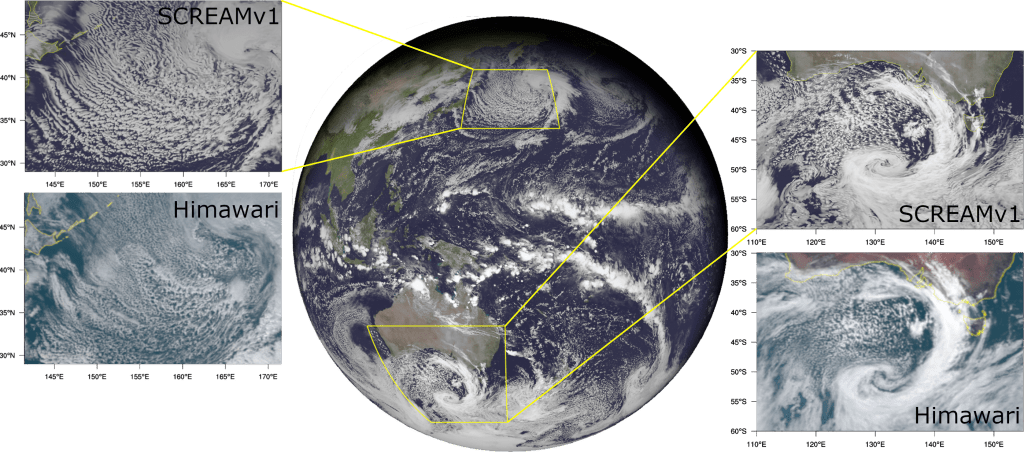

Frontier’s exascale power enables the Simple Cloud-Resolving E3SM Atmosphere Model to run years’ worth of climate simulations at unprecedented speed and scale. Credit: Ben Hillman/Sandia National Laboratories, U.S. Dept. of Energy

“Exascale will be a real boon for this class of model,” said Mark Taylor, E3SM-MMF project leader and a distinguished technical staff member at Sandia National Laboratories. “Frontier’s unique architecture of CPUs and GPUs makes possible things we couldn’t do before.”

Frontier Success

Adapting the project’s codes to run on GPUs brought immense gains in performance. E3SM-MMF has run across 5,000 of Frontier’s 9,000 nodes, simulating conditions at resolutions as high as 1 km grid spacing at speeds that surpass initial goals. Researchers had hoped to achieve just a year’s worth of simulations over a day’s run; Frontier routinely delivers more than five years’ worth of simulations in the same time. The overall peak performance on Frontier reflects a 2.7x improvement over the best E3SM-MMF results on Summit, Frontier’s 200-petaflop predecessor. That’s 10 times faster than CPU-only attempts.

“A visualization of the model can now be directly compared to satellite pictures of the same area, and the resemblance is striking,” Taylor said. “You can basically overlay them and see comparable quality. We put in the work on our codes, and the GPUs are definitely delivering.”

One element of the project — the Simple Cloud-Resolving E3SM Atmosphere Model, or SCREAM, which focuses on cloud formations — received the 2023 Gordon Bell Special Prize for Climate Modeling.

What’s Next?

Taylor expects the massive speedup enabled by Frontier to ultimately make possible the kind of long-range climate modeling scientists have dreamed of, complete with detailed insights into the possible consequences of droughts, floods and other calamities.

Frontier’s exascale power enables the Simple Cloud-Resolving E3SM Atmosphere Model to run years’ worth of climate simulations at unprecedented speed and scale. Credit: Paul Ullrich/Lawrence Livermore National Laboratory, U.S. Dept. of Energy

“Now that we can afford to run the model at scale, we can start using it for long-term climate simulations over the 40-year cycles we need and at higher fidelity than was ever available before,” he said. “This kind of scale allows us to predict the impact of climate change on severe events and on freshwater supplies and how those events can affect energy production.”

Support for this research came from the Energy Exascale Earth System Model project, funded by the DOE Office of Science’s Office of Biological and Environmental Research; from the Exascale Computing Project, a collaborative effort of the DOE Office of Science and the National Nuclear Security Administration; and from the DOE Office of Science’s Advanced Scientific Computing Research program. The OLCF is a DOE Office of Science user facility.

Related publication: Mark Taylor, et al., “The Simple Cloud-Resolving E3SM Atmosphere Model Running on the Frontier Exascale System,” The International Conference for High Performance Computing, Networking, Storage and Analysis (2023), DOI: https://dl.acm.org/doi/10.1145/3581784.3627044

UT-Battelle LLC manages ORNL for DOE’s Office of Science, the single largest supporter of basic research in the physical sciences in the United States. DOE’s Office of Science is working to address some of the most pressing challenges of our time. For more information, visit https://energy.gov/science.