The world’s first exascale supercomputer will help scientists peer into the future of global climate change and open a window into weather patterns that could affect the world a generation from now.

The research team used Frontier, the 1.14-exaflop HPE Cray EX supercomputer at the Department of Energy’s Oak Ridge National Laboratory, to achieve record speeds in modeling worldwide cloud formations in 3D. The computational power of Frontier, the fastest computer in the world, shrinks the work of years into days to bring detailed estimates of the long-range consequences of climate change and extreme weather within reach.

“This is the new gold standard for climate modeling,” said Mark Taylor, a distinguished scientist at Sandia National Laboratories and lead author of the study. “Frontier’s unique computing architecture makes possible things we couldn’t do before.”

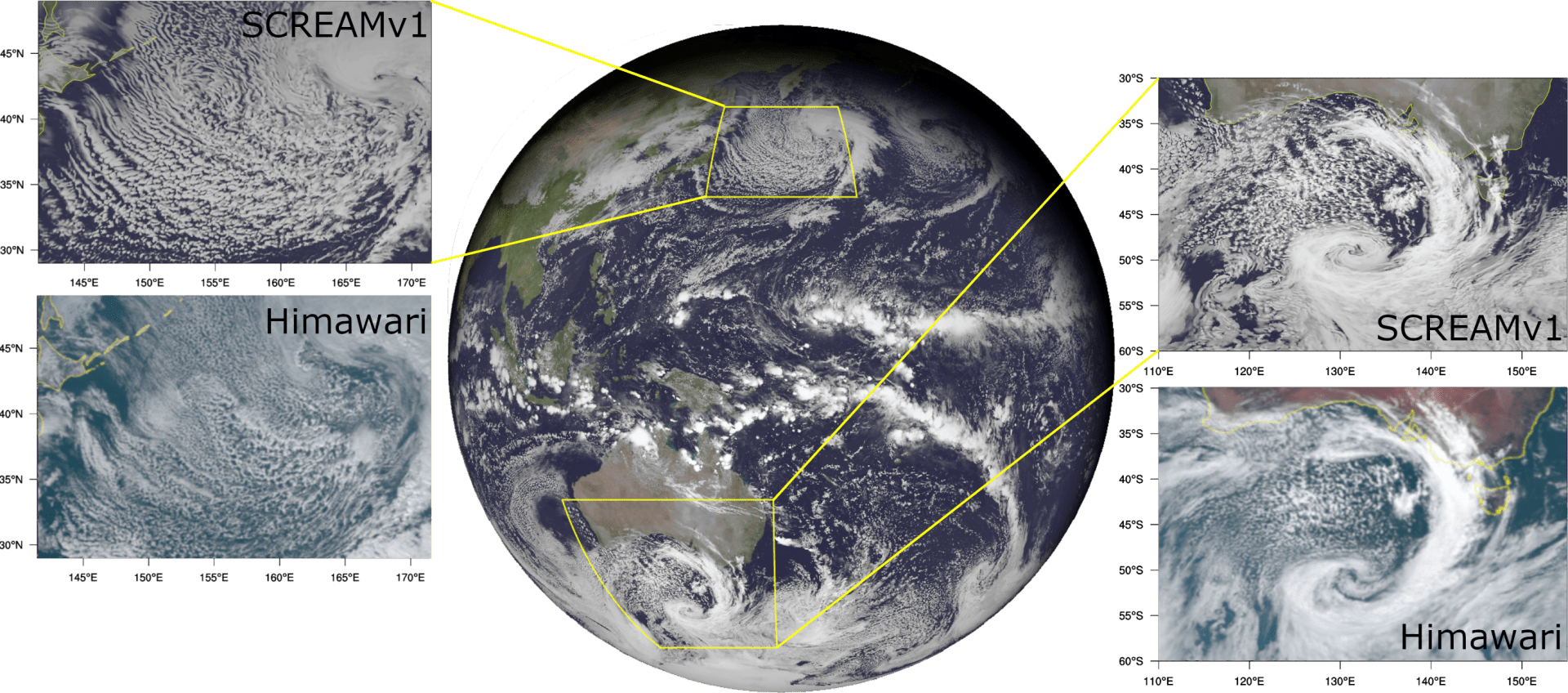

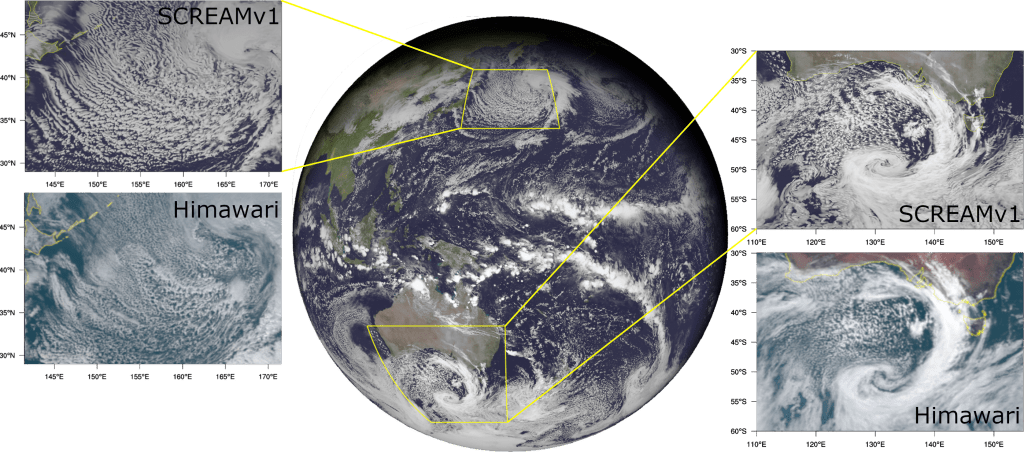

Frontier’s exascale power enables the Simple Cloud-Resolving E3SM Atmosphere Model to run years’ worth of climate simulations at unprecedented speed and scale. Credit: Ben Hillman/Sandia National Laboratories, U.S. Dept. of Energy

The study earned the team a finalist nomination for the Association of Computing Machinery Gordon Bell Special Prize for Climate Modeling. The prize will be presented at this year’s International Conference for High Performance Computing, Networking, Storage and Analysis, or SC23, in Denver where the team will present their results on Nov. 15.

Gauging the likely impact of a warming climate on global and regional water cycles poses one of the top challenges in climate change prediction. Scientists need climate models that span decades and include detailed atmospheric, oceanic and ice conditions to make useful predictions.

But 3D models that can resolve the complicated interactions between these elements, particularly the churning, convective motion behind cloud formation, have remained computationally expensive, leaving climate-length simulations outside the reach of even the largest, most powerful supercomputers — until now.

The Energy Exascale Earth System Model, or E3SM, project overcomes these obstacles by combining a new software approach with massive exascale throughput to enable climate simulations that run at unprecedented speed and scale. The Simple Cloud-Resolving E3SM Atmosphere Model, or SCREAM, focuses on cloud formations as part of the overall project.

Frontier’s exascale power enables the Simple Cloud-Resolving E3SM Atmosphere Model to run years’ worth of climate simulations at unprecedented speed and scale. Credit: Paul Ullrich/Lawrence Livermore National Laboratory, U.S. Dept. of Energy

“It’s long been a dream for the climate modeling community to run kilometer-scale models at sufficient speed to facilitate forecasts over a span of decades, and now that has become reality,” said Sarat Sreepathi, a co-author of the study and performance coordinator for the project. “These kinds of simulations were computationally demanding enough to warrant an exascale supercomputer. Without Frontier, it doesn’t happen.”

Peter Caldwell, a climate scientist at Lawrence Livermore National Laboratory, and his team spent the past five years building a new cloud model from scratch targeted to run on the graphics processing units, or GPUs, that power Frontier and other top supercomputers.

“Most climate and weather models are struggling to take advantage of GPUs,” Caldwell said. “SCREAM is of huge interest to other modeling centers as a successful example of how to make this transition.”

HPE, Frontier’s maker, assisted in the optimization effort through the Frontier Center of Excellence at the Oak Ridge Leadership Computing Facility, which houses Frontier.

“The performance-portable codebase of SCREAM gave us a great vantage point for early deployment on Frontier, and further optimizations enhanced our computational performance,” Sreepathi said. “The overall effort is a nice exemplar of interdisciplinary collaboration between climate and computer scientists.”

Adapting the code to run on GPUs brought immense gains in performance. SCREAM can run across 8,192 of Frontier’s nodes to simulate more than a year’s worth of global cloud formations – 1.25 years total – in a single 24-hour run, a feat beyond the reach of any previous machine.

“That means these long-range simulations of 30 or 40 years are now doable in a matter of weeks,” Sreepathi said. “This was practically infeasible before.”

The simulations at 3-kilometer resolution allow a level of detail that enables researchers to overlay the results on satellite images for comparison.

“The resemblance is striking,” Taylor said. “This kind of resolution and scale allows us to predict the impact of climate change on severe weather events, on freshwater supplies, on energy production.”

Sreepathi said he expects further success stories on Frontier and on the next generation of exascale supercomputers.

“We want to achieve even higher resolutions and to couple the atmospheric model with other earth system components such as oceanographic data for a holistic view,” he said. “Thanks to the work we’ve put in, SCREAM is portable across diverse computer architectures – not just Frontier’s – so we’re well-positioned to take advantage of not only current but also future exascale machines.”

Support for this research came from the Energy Exascale Earth System Model project, funded by the DOE Office of Science’s Office of Biological and Environmental Research; from the Exascale Computing Project, a collaborative effort of the DOE Office of Science and the National Nuclear Security Administration; and from the DOE Office of Science’s Advanced Scientific Computing Research program. The OLCF is a DOE Office of Science user facility.

Related publication: Mark Taylor, et al., “The Simple Cloud-Resolving E3SM Atmosphere Model Running on the Frontier Exascale System,” The International Conference for High Performance Computing, Networking, Storage and Analysis (2023), DOI: https://dl.acm.org/doi/10.1145/3581784.3627044

UT-Battelle manages ORNL for DOE’s Office of Science, the single largest supporter of basic research in the physical sciences in the United States. DOE’s Office of Science is working to address some of the most pressing challenges of our time. For more information, visit https://energy.gov/science.