Using the Oak Ridge Leadership Computing Facility’s (OLCF’s) IBM AC922 Summit—the nation’s most powerful supercomputer devoted to open science, located at the US Department of Energy’s (DOE’s) Oak Ridge National Laboratory (ORNL)—a team of researchers from the European Centre for Medium-Range Weather Forecasts (ECMWF) and ORNL achieved a computational first: a global simulation of the Earth’s atmosphere at a 1-square-kilometer average grid-spacing for a full 4-month season.

Completed in June, the milestone marks a big improvement in resolution for the Integrated Forecasting System (IFS) code, also known as the “European Model,” which currently operates at 9-kilometer grid-spacing for routine weather forecast operations. It also serves as the first step in an effort to create multi-season atmospheric simulations at high resolution, pointing toward the future of weather forecasting—one powered by exascale supercomputers.

“In this project, we have now shown for the first time that simulations at this resolution can be sustained over a long time span—a full season—and that the large amount of data that are produced can be handled on a supercomputer such as Summit,” said Nils Wedi, head of Earth System Modelling at ECMWF. “We provide factual numbers for what it takes to realize these simulations, and what one may expect both in terms of data volumes and scientific results. We therefore provide a baseline against which future research may be evaluated.”

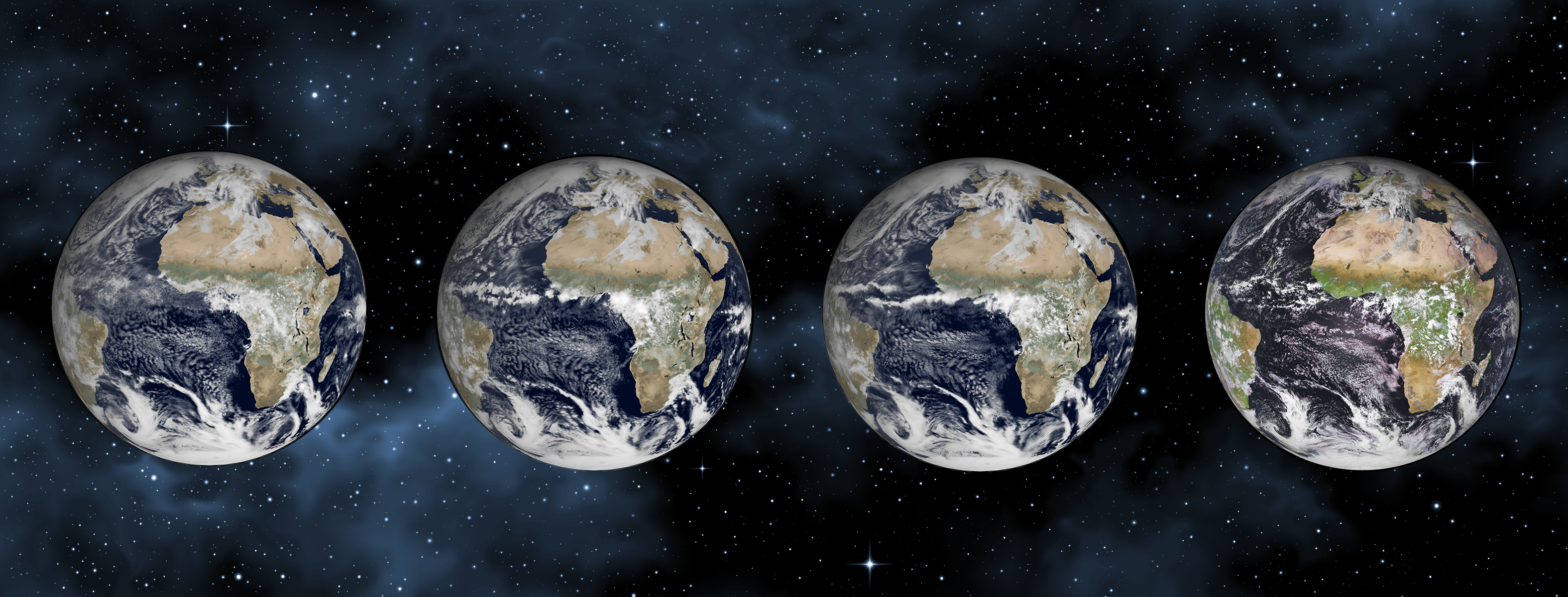

Animation of 3-hourly accumulated radiative fluxes at the top of the atmosphere in the visible (colors, day time) and infrared (grey scale, night time) spectrum as would be seen from a satellite looking down on the Americas, such as NOAA’s Geostationary Operational Environmental Satellite (GOES). The globe on the left shows a segment of the seasonal simulation from 1 November 2018 to 28 February 2019 derived from the Summit simulation at 1.4 km grid spacing. The globe in the middle shows the state-of-the-art of operational numerical weather prediction (“The European model”) at 9 km grid spacing. A key difference between the two apart from the horizontal resolution is that the vertical redistribution of heat, caused by a process called deep convection (well known examples are thunderstorms with cumulonimbus clouds) is explicitly simulated at 1.4 km, but only described through its bulk statistical effect in the 9 km simulation, because the process of convection happens in nature at scales much smaller than the 9x9km grid boxes of the model. The globe on the right illustrates what happens in the 9 km simulation when the deep convection process is explicitly simulated regardless, typically leading to thunderstorm clusters and events at too large scale. Animation courtesy of Philippe Lopez/ECMWF.

Established in 1975, the ECMWF is an intergovernmental organization composed of 34 member and cooperating countries, providing numerical weather predictions to its members while also selling forecast data to commercial weather services. The original IFS code was written some 30 years ago and has been one of the leading global weather-forecast systems in the world, continually updated and optimized for use on many generations of supercomputers at ECMWF. However, to advance its performance and scalability, ECMWF researchers also test their code on external high-performance computing systems, including the OLCF’s decommissioned Titan and now on Summit.

Armed with a 2020 allocation of compute time from the DOE’s Innovative and Novel Computational Impact on Theory and Experiment (INCITE) program, Wedi and his team aim to deliver a “quantum leap” forward in simulating and understanding the Earth’s weather and climate. Although results from the 4 months of simulated weather are currently being analyzed, the team expects a bounty of information leading to more precise forecasting.

With the higher resolution of 1-kilometer grid-spacing—enabled partly by special adaptations of the I/O scheme exploiting Summit’s memory hierarchy and network, and further accelerated by recoding IFS to use Summit’s GPU accelerators—the team’s simulations are able to represent variations in topography with finer detail. For example, IFS’s current operational modeling simulates the Himalayan mountains at heights of about 6,000 meters; its elevations are averaged out over the 9-square-kilometer grids, resulting in “smoothed out” peaks. By zooming into smaller areas with 1-square-kilometer grids, the averaged topography is much closer to reality—the Himalayas’ peaks are now presented near their true heights of 8,000 meters with much better resolved gradients representing the slopes. This increased detail results in better airflow characteristics that help determine global circulation patterns.

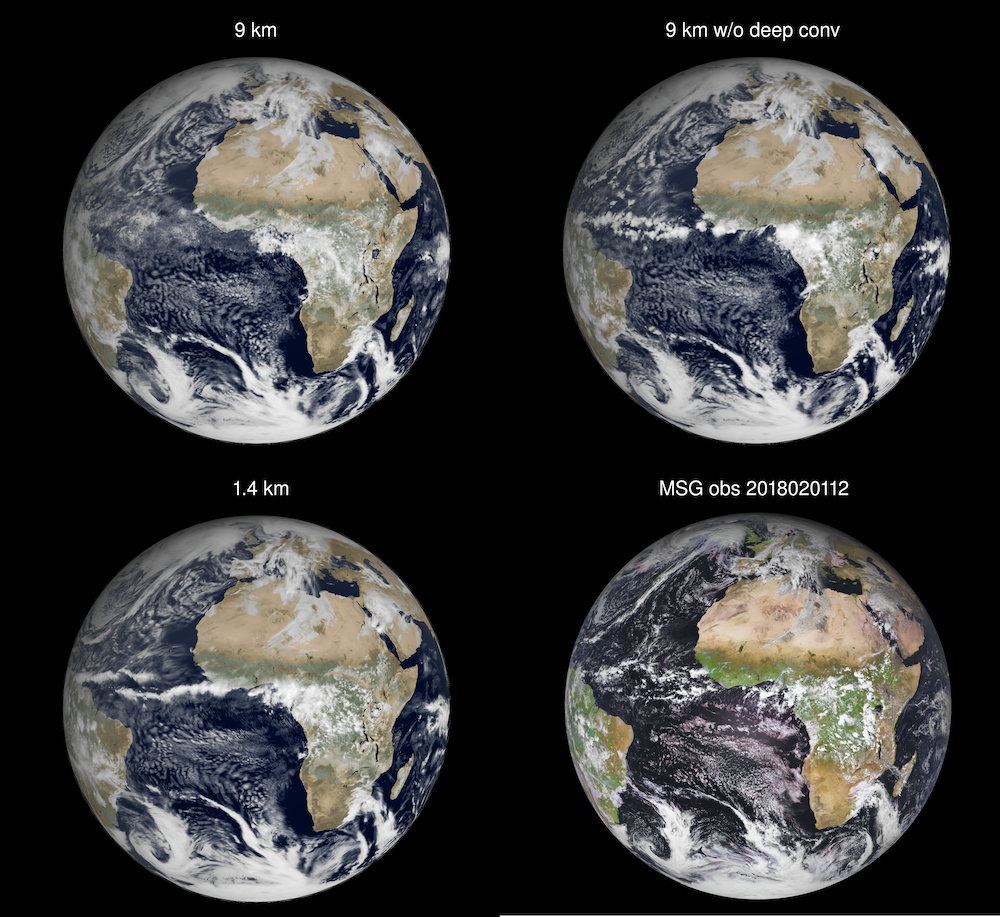

What’s more, the 1-kilometer grid-spacing also enables the representation of processes in the atmosphere that were previously too small to be accounted for with the 9-kilometer spacing. Now, tropical thunderstorms—which can move warm, moist air into the upper atmosphere in a process called deep convection—can be simulated explicitly in the models, providing a better look at tropical atmospheric motions, which in turn affect the circulation patterns in the rest of the world.

“We are not simply looking at whether we can make an improvement at any given locality, but ideally all these things should translate into a global circulation system,” said Val Anantharaj, an ORNL computational scientist who serves as data liaison on the project. “If we can resolve the global atmospheric circulation pattern better, then we should be able to produce better forecasts.”

These simulated satellite images of the Earth show the improvement in resolution of the ECMWF Integrated Forecasting System from 9-kilometer grid-spacing with parametrized deep convection (top left), 9-kilometer grid-spacing without parametrized deep convection (top right), and the 1-kilometer grid-spacing (bottom left). On the bottom right is a Meteosat Second Generation satellite image at the same verifying time. Image courtesy ECMWF/EUMETSAT.

Once their paper is published, the team will make the simulation’s data available to the international science community. By eliminating some of the fundamental modelling assumptions prevalent in conventional simulations, the high-resolution data may help to improve model simulations at coarser resolutions.

Anantharaj said the project’s long-term goal is to simulate the remaining seasons in order to have one full year modeled—or to least cover an Atlantic hurricane season. But the ECMWF team also has an eye on the future of weather itself, as well as its forecasting. Such high-resolution models can provide virtual laboratories to further our understanding on how the climate is evolving and how weather may look in such a future climate.

The output and compute rate of these simulations can also be used to predict the impact of future high-resolution models employed on emerging HPC platforms as scientific computing enters the exascale era with new systems, such as the upcoming 1.5 exaflops Frontier, on track to be deployed in 2021 at the OLCF, a DOE Office of Science User Facility.

“The handling and data challenges that we have overcome during this project are still very large, and our simulations are at the edge of what is achievable today,” Wedi said. “However, I believe that it will be possible to run simulations with 1-kilometer grid-spacing routinely in the future—in the same way we are running simulations with 9-kilometer grid-spacing today. To continue to push these boundaries is important.”

UT-Battelle LLC manages Oak Ridge National Laboratory for DOE’s Office of Science, the single largest supporter of basic research in the physical sciences in the United States. DOE’s Office of Science is working to address some of the most pressing challenges of our time. For more information, visit https://energy.gov/science.