General Electric (GE)—a company well-known for its contributions to aviation, power, renewable energy, and more—aims to design gas turbine jet engines that are world leading in efficiency, emissions, and durability. The stakes are high—every few minutes, an airplane carrying one of GE’s engines takes off somewhere in the world. Competition is fierce and customers are demanding.

One of the key requirements to successfully designing these engines is the ability to accurately predict the temperature and motion of the fluids in the high-pressure turbines that power them. Internal turbine temperatures become so high during operation that turbine components will melt if they are not cooled properly.

A team from GE Research and GE Aviation using the Summit supercomputer at the US Department of Energy’s (DOE’s) Oak Ridge National Laboratory (ORNL) has recently completed unprecedented simulations that are providing breakthrough insights about these fluid flows and revealing how to better cool engine parts for more efficient and durable turbine jet engines. Results are already changing the way GE thinks about turbine design.

An unprecedented level of detail

If you ask the engineers developing these complex turbines to describe nirvana, it would be “to have a computer model of the entire gas turbine, be able to hit go, and simulate the whole thing at once,” according to Michal Osusky, Senior Engineer in Computational Fluid Dynamics & Methods at GE Research. Although this isn’t reality yet, the GE Research team is simulating smaller pieces of the turbine—the rotating part of the engine that converts thermal energy into rotational mechanical energy—and still getting big results.

“We are trying to design the most efficient, most durable gas turbines that we can, because that forms the core of the engines that power airplanes, as well as heavy duty gas turbines that are used for power generation,” said Sriram Shankaran, Consulting Engineer at GE Aviation. “One of the key challenges is being able to accurately predict the flow and temperature of the gases through the turbine. Temperatures are extreme and can even melt the components.”

Adding cooling flow on the surface of these components can solve this melting problem, but knowing just where to add that flow is complex and requires a deep understanding of the physics involved.

Since the 1990s, scientists have relied on computer models to simulate the fluid flows using techniques called Reynolds-Averaged Navier-Stokes (RANS) and large eddy simulation (LES). RANS is less computationally intensive, but simulation results are less detailed. LES produces much higher fidelity but is very computationally intensive. As a result, RANS has been the dominant way of studying turbine flows, while LES has been used for smaller representative problems because it is able to capture nuanced details more easily than other methods.

Using LES has meant gaining more detailed simulations at the expense of reasonable turnaround times. Because of the time required to do these calculations even on large supercomputers, engineers have been constrained to applying LES on representative problems, such as a small part of a turbine blade.

“The need to push to larger problems where we can capture the full set of 3D effects led us to Summit,” Shankaran said.

Summit takes the heat off design engineers

Through DOE’s Innovative and Novel Computational Impact on Theory and Experiment, or INCITE, program, GE received a grant of time on the Summit supercomputer at the Oak Ridge Leadership Computing Facility (OLCF), a DOE Office of Science User Facility at ORNL.

“Over the past decade, GE has invested in building relationships and engagements with the DOE Leadership Computing Facilities when faced with our toughest challenges,” noted Rick Arthur, GE’s Senior Director of Computational Methods Research. “The capability, expertise, and experience we can access through these peer-reviewed grant programs and technical forums deliver insight, impact, and inspiration to push the state of the art of engineering.”

In the current project, the team used GE’s GPU-based GENESIS code to simulate a rotor/stator combination of the high-pressure turbine that is directly downstream of the engine’s combustor. The components experience extreme heat from the burnt fuel in the combustor. These heat surges result in significant differences in temperature among the different components of the turbine. Cool fluid is injected to mitigate the temperature variance before cracking occurs.

“Summit allows us to perform LES with extraordinarily fast turnaround, which in turn enables us to solve the underlying equations of motion with far less approximations than we’ve done before. This particular problem was solved with hardly any approximations at all,” Osusky said. “We are getting closer and closer to reality with this computation.”

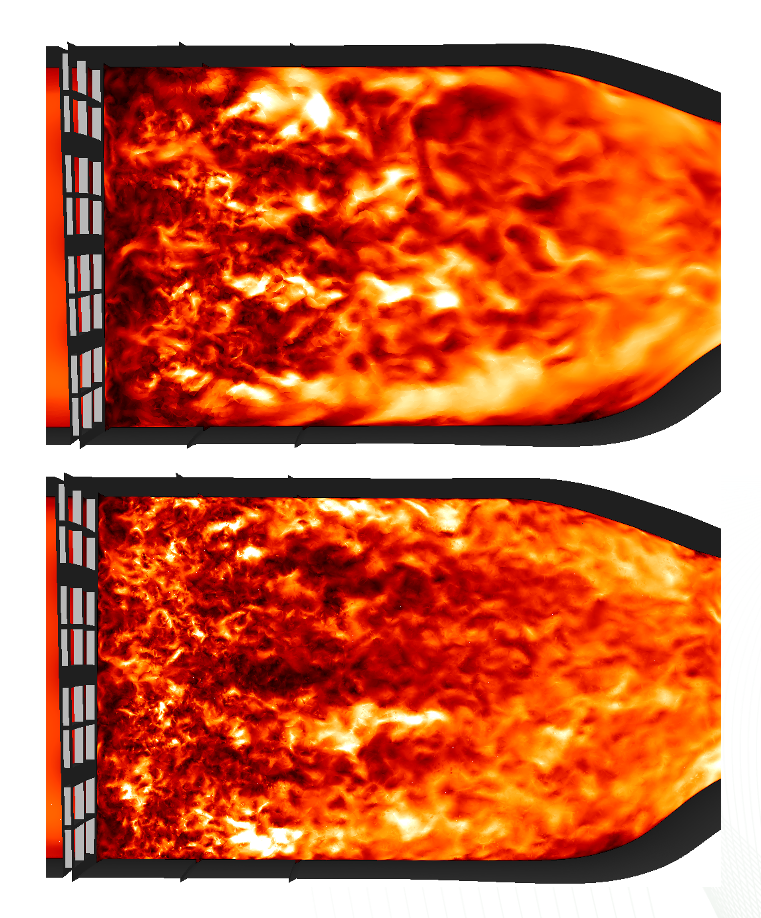

Osusky, who performed the simulations on Summit, further explained that “this is the first time we were able to look at larger 3D flow interactions with this level of detail.”

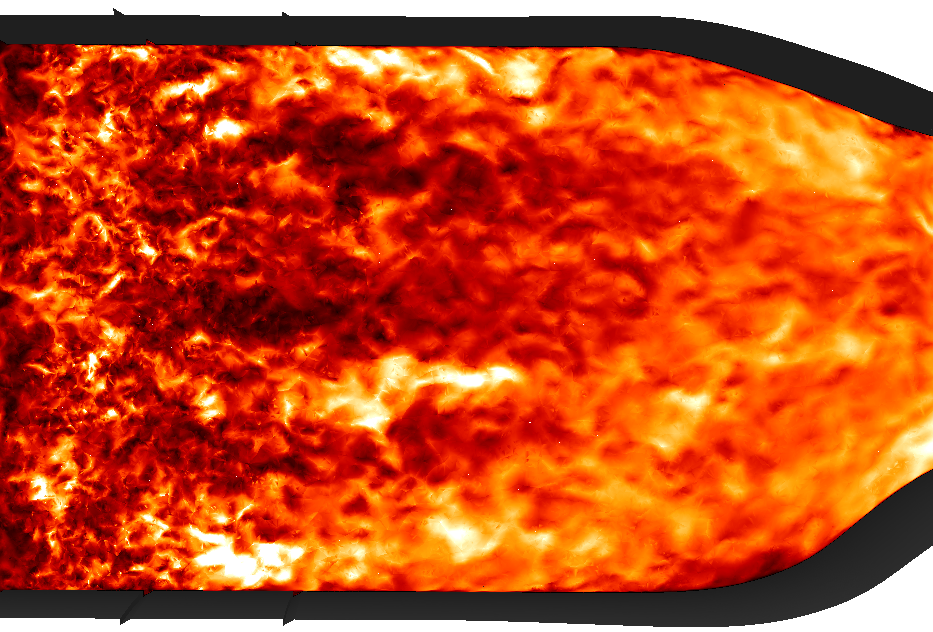

GE visualizations of a lower-resolution model of turbulent flow (top) compared with a higher-resolution model of turbulent flow (bottom) only made possible using the Summit supercomputer. Image Credit: GE Research

He also explained that the simulations also more closely matched results of actual engine tests. “The improved match to physical tests is a huge step forward in our predictive capability,” Osusky said.

The GE Research team performed these large eddy simulations of turbine components so quickly that the technique has the potential to influence the design process instead of being used solely in a research environment.

“Calculations that previously took weeks or months were completed in design-cycle time on Summit. This opens opportunities for LES to become a design tool for GE’s engineering work,” Shankaran said.

GE, customers reap benefits

GE’s customers as well as GE are realizing cost savings benefits from these computational advances.

For example, predicting the temperature distribution over blades in a turbine engine is critical for helping improve fuel efficiency, because allowing fuel to burn at the highest temperatures possible leads to less wasted fuel. Additionally, these predictions can lead to improved engine design and durability, thereby reducing unplanned downtime.

“Even small improvements in fuel efficiency or durability translated across a fleet of tens of thousands of airplanes carrying the same engine and multiplied over the 30 year life of an engine can produce enormous cost savings for our customers. The numbers start becoming staggering,” Shankaran said.

These new predictive capabilities also will help engineers perform the efficiently targeted physical tests GE needs to conduct, saving GE time and money.

They are also providing GE researchers with a rich dataset that machine learning algorithms can use to train lower-fidelity models to more closely match higher fidelity results. The same simulation techniques can be applied to other models as well, generating even more training data that researchers can use for even larger machine learning studies.

The work also gives GE Research guidance in the direction and configuration of new hardware it hopes to install.

“Access to the Summit platform and the deep expertise at OLCF has been crucial in developing GE’s computing strategy,” said Dave Kepczynski, Chief Information Officer at GE Research. “As we move forward, projects like this inform decisions for future investments in hardware and software architectures to maintain a competitive edge in the global marketplace.”

UT-Battelle LLC manages Oak Ridge National Laboratory for DOE’s Office of Science, the single largest supporter of basic research in the physical sciences in the United States. DOE’s Office of Science is working to address some of the most pressing challenges of our time. For more information, visit https://energy.gov/science.