In pursuit of numerical predictions for exotic particles, researchers are simulating atom-building quark and gluon particles over 70 times faster on Summit, the world’s most powerful scientific supercomputer, than on its predecessor Titan at the US Department of Energy’s (DOE’s) Oak Ridge National Laboratory (ORNL). The interactions of quarks and gluons are computed using lattice quantum chromodynamics (QCD)—a computer-friendly version of the mathematical framework that describes these strong-force interactions.

With new algorithms and optimizations for GPU-based systems like Summit, computational physicists Balint Joo of DOE’s Jefferson Lab and Kate Clark of GPU developer NVIDIA are combining two open-source QCD codes, Chroma and the QUDA library for GPUs, on Summit. Located at the Oak Ridge Leadership Computing Facility (OLCF), Summit is a 200-petaflop, IBM AC922 system that launched in June as the top-ranking system on the Top500 list.

QCD calculations can help reveal elusive, short-lived particles that are difficult to capture in experiment. Advances in QCD applications for this new generation of supercomputing will benefit the team, led by physicist Robert Edwards of Jefferson Lab, in its quest to discover the properties of exotic particles.

“We get predictions from QCD,” Joo said. “Where there are theoretical unknowns, computational calculations can give us energy states and particle decays to look for in experiments.”

Edwards and Joo work closely with a particle accelerator experiment at Jefferson Lab called GlueX that is bridging theoretical predictions from QCD and experimental evidence.

“GlueX is a flagship experiment of the recently completed $338 million upgrade of the CEBAF Accelerator of Jefferson Lab. The experiment in the new Hall D of the lab is using the electron beam to create an intense polarized photon beam to produce particles, including possibly exotic mesons,” Edwards said. “Our QCD computations are informing and guiding these experimental searches.”

Full speed ahead

The team received early access to Summit to test the performance of their code on the system’s architecture. Summit has roughly one-fourth the number of nodes of the 27-petaflop Titan supercomputer. However, Summit’s nodes—comprising two IBM Power9 CPUs and six NVIDIA Tesla V100 GPUs—are exceptionally fast and memory-dense, including 42 teraflops of performance and 512 gigabytes of memory per node.

Through a combination of hardware advancements and software optimizations, the team increased throughput on Summit nine times compared to their previous Titan simulations, while compressing their original problem size to use eight times fewer GPUs for a total performance speedup of about 72 times.

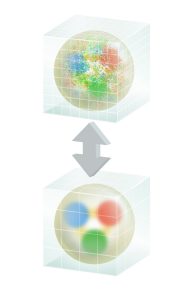

A conceptual illustration of the multigrid method for lattice QCD shows both fine and coarse grids. The high-frequency energy modes of a proton appear as fuzz on a fine grid (top). The multigrid process projects smoother, longer wavelength modes that can be captured with a coarser grid, which requires less work to solve (bottom). The multigrid process cycles between the grids to optimally solve the problem. Image credit: Joanna Griffin, Jefferson Lab

In lattice QCD simulations, space-time is represented by a lattice, and scientists generate snapshots of the strong-force field on the links of this lattice, known as gauge configurations. This initial step is called gauge generation. Then, in a step known as the quark propagator calculation, researchers introduce a charge into the gauge field and solve a large system of equations that represents how a quark would move through space and time. In a final analysis step, these quark propagators are combined into initial and final particle states, from which energy spectra can be computed and related to experiment.

To prepare their code for Summit, the team made algorithmic improvements to increase efficiency. First, they advanced an adaptive multigrid solver in the QUDA library that generates coarse and fine grids based on low- and high-energy energy states, respectively. The multigrid process involves a setup phase, which is then used in solution steps.

“Summit GPUs are very well tailored for this multigrid algorithm, and we saw speedup potential there,” Clark said.

Previously, the solution steps were optimized for Titan’s GPUs, and the multigrid solver was used for the quark propagation phase of calculations carried out for each gauge configuration. For Summit, the team integrated the multigrid solver into the initial gauge generation phase.

“In the gauge generation phase, gauge configurations change rapidly and require the setup process to be repeated frequently,” Joo said. “Therefore, a crucial optimization step was moving this setup phase entirely onto the GPUs.”

The team saw another opportunity to speed up gauge configuration generation by incorporating other algorithmic and software improvements alongside the multigrid solver.

First, to reduce the amount of work required to change from one gauge configuration to the next, the team implemented a force-gradient integrator that utilizes a molecular dynamics method previously adapted for QCD.

“The process is mathematically similar to simulating molecules of a gas, so a molecular dynamics procedure is repurposed to generate each new gauge configuration from the previous one,” Joo said.

Second, whereas the QUDA library automatically runs calculations needed for gauge configuration generation on GPUs, the full algorithm has many other pieces of code that can cause a performance bottleneck if not also GPU-accelerated. To avoid this bottleneck and improve performance, the team used the QDP-Just-in-Time (JIT) version of the QDP++ software layer underlying Chroma to target all mathematical expressions to run fully on GPUs.

“The improvements in speedup from these optimizations allowed us to start a run of simulations we simply could not contemplate performing before,” Joo said. “On Titan, we have already begun a new run through the ASCR Leadership Computing Challenge program with quarks that have masses more closely resembling those in nature, which is aimed directly at our spectroscopy program at Jefferson Lab.”

Team members include Boram Yoon of Los Alamos National Laboratory; Frank Winter, the author and developer of QDP-JIT, of Jefferson Lab; and Mathias Wagner and Evan Weinberg of NVIDIA. Arjun Gambhir, former William & Mary graduate student researcher at Jefferson Lab, now at Lawrence Livermore National Laboratory, assisted in the integration of the QUDA multigrid solver. This research was supported with funding through DOE’s Scientific Discovery through Advanced Computing program.

ORNL is managed by UT-Battelle for DOE’s Office of Science. The Office of Science is the single largest supporter of basic research in the physical sciences in the United States and is working to address some of the most pressing challenges of our time. For more information, please visit https://science.energy.gov/.