When astronomers peer into the universe, what they see often exceeds the limits of human understanding. Such is the case with low-mass galaxies—galaxies a fraction of the size of our own Milky Way.

These small, faint systems made up of millions or billions of stars, dust, and gas constitute the most common type of galaxy observed in the universe. But according to astrophysicists’ most advanced models, low-mass galaxies should contain many more stars than they appear to contain.

A leading theory for this discrepancy hinges on the fountain-like outflows of gas observed exiting some galaxies. These outflows are driven by the life and death of stars, specifically stellar winds and supernova explosions, which collectively give rise to a phenomenon known as galactic wind. As star activity expels gas into intergalactic space, galaxies lose precious raw material to make new stars. The physics and forces at play during this process, however, remain something of a mystery.

To better understand how galactic wind affects star formation in galaxies, a two-person team led by the University of California, Santa Cruz, turned to high-performance computing at the Oak Ridge Leadership Computing Facility (OLCF), a US Department of Energy (DOE) Office of Science User Facility located at DOE’s Oak Ridge National Laboratory (ORNL). Specifically, UC Santa Cruz astrophysicist Brant Robertson and University of Arizona graduate student Evan Schneider (now a Hubble Fellow at Princeton University) scaled up their Cholla hydrodynamics code on the OLCF’s Cray XK7 Titan supercomputer to create highly detailed simulations of galactic wind.

“The process of generating galactic winds is something that requires exquisite resolution over a large volume to understand—much better resolution than other cosmological simulations that model populations of galaxies,” Robertson said. “This is something you really need a machine like Titan to do.”

After earning an allocation on Titan through DOE’s INCITE program, Robertson and Schneider started small, simulating a hot, supernova-driven wind colliding with a cool cloud of gas across 300 light years of space. (A light year equals the distance light travels in 1 year.) The results allowed the team to rule out a potential mechanism for galactic wind.

Now the team is setting its sights higher, aiming to generate nearly a trillion-cell simulation of an entire galaxy, which would be the largest simulation of a galaxy ever. Beyond breaking records, Robertson and Schneider are striving to uncover new details about galactic wind and the forces that regulate galaxies, insights that could improve our understanding of low-mass galaxies, dark matter, and the evolution of the universe.

Simulating cold clouds

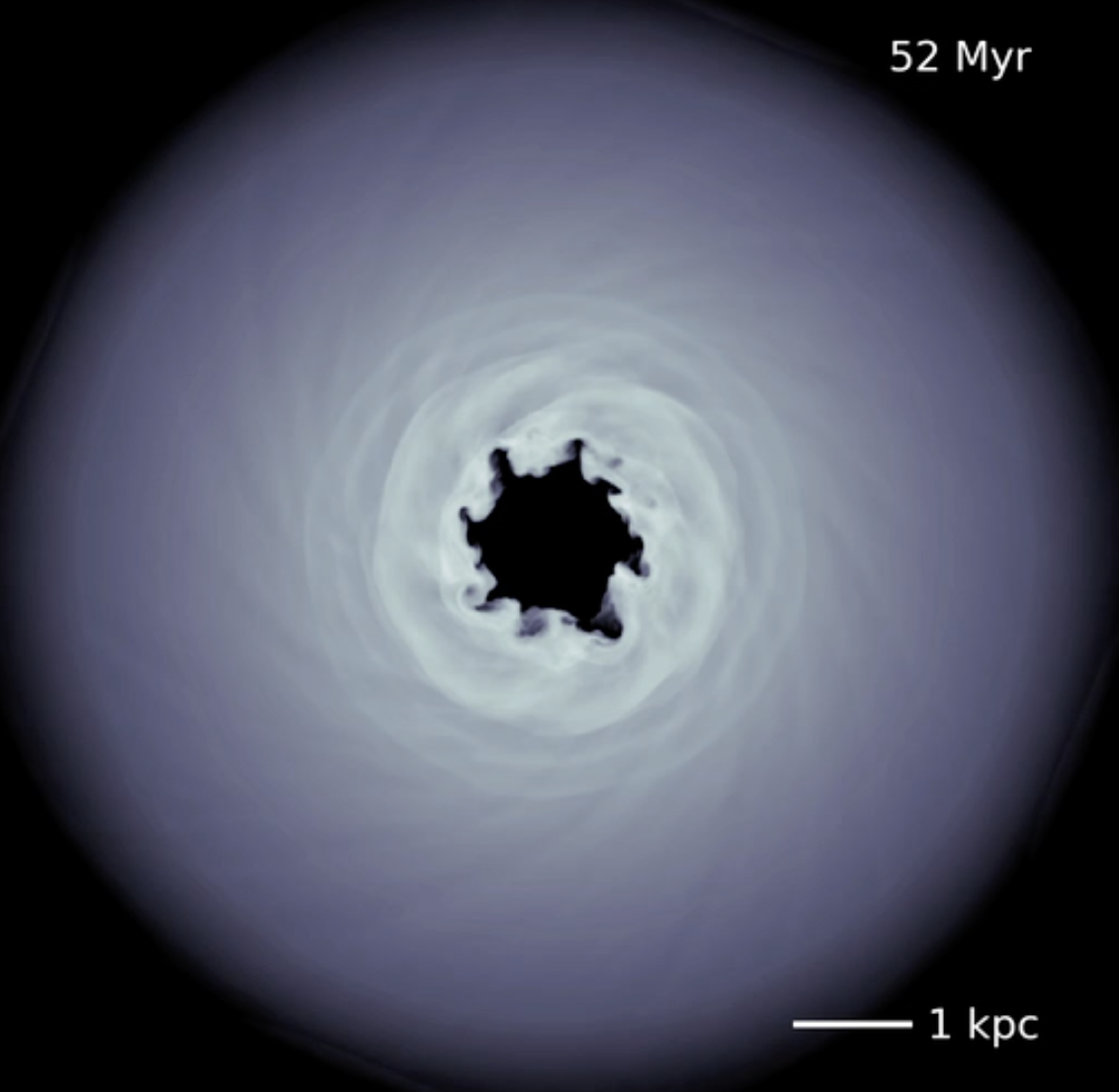

A density projection derived from a preliminary full-galaxy simulation of galactic winds being driven by a hot outflow in a starburst galaxy. The top panel looks “down-the-barrel” of the outflow, while the bottom panel shows the disk galaxy at the edge.

About 12 million light years from Earth resides one of the Milky Way’s closest neighbors, a disk galaxy called Messier 82 (M82). Smaller than the Milky Way, M82’s cigar shape underscores a volatile personality. The galaxy produces new stars about five times faster than our own galaxy’s rate of star production. This star-making frenzy gives rise to galactic wind that pushes out more gas than the system keeps in, leading astronomers to estimate that M82 will run out of fuel in just 8 million years.

Analyzing images from NASA’s Hubble Space Telescope, scientists can observe this slow-developing exodus of gas and dust. Data gathered from such observations can help Robertson and Schneider gauge if they are on the right track when simulating galactic wind.

“With galaxies like M82, you see a lot of cold material at large radius that’s flowing out very fast. We wanted to see, if you took a realistic cloud of cold gas and hit it with a hot, fast-flowing, supernova-driven outflow, if you could accelerate that cold material to velocities like what are observed,” Robertson said.

Answering this question in high resolution required an efficient code that could solve the problem based on well-known physics, such as the motion of liquids. Robertson and Schneider developed Cholla to carry out hydrodynamics calculations entirely on GPUs, highly parallelized accelerators that excel at simple number crunching, thus achieving high-resolution results.

In Titan, a 27-petaflop system containing more than 18,000 GPUs, Cholla found its match. After testing the code on a GPU cluster at the University of Arizona, Robertson and Schneider benchmarked Cholla under two small OLCF Director’s Discretionary awards before letting the code loose under INCITE. In test runs, the code has maintained scaling across more than 16,000 GPUs.

“We can use all of Titan,” Robertson said, “which is kind of amazing because the vast majority of the power of that system is in GPUs.”

The pairing of code and computer gave Robertson and Schneider the tools needed to produce high-fidelity simulations of gas clouds measuring more than 15 light years in diameter. Furthermore, the team can zoom in on parts of the simulation to study phases and properties of galactic wind in isolation. This capability helped the team to rule out a theory that posited cold clouds close to the galaxy’s center could be pushed out by fast-moving, hot wind from supernovas.

“The answer is it isn’t possible,” Robertson said. “The hot wind actually shreds the clouds and the clouds become sheared and very narrow. They’re like little ribbons that are very difficult to push on.”

Galactic goals

Having proven Cholla’s computing chops, Robertson and Schneider are now planning a full-galaxy simulation about 10 to 20 times larger than their previous effort. Expanding the size of the simulation will allow the team to test an alternate theory for the emergence of galactic wind in disk galaxies like M82. The theory suggests that clouds of cold gas condense out of the hot outflow as they expand and cool.

“That’s something that’s been posited in analytical models but not tested in simulation,” Robertson said. “You have to model the whole galaxy to capture this process because the dynamics of the outflows are such that you need a global simulation of the disk.”

The full-galaxy simulation will likely be composed of hundreds of billions of cells representing more than 30,000 light years of space. To cover this expanse, the team must sacrifice resolution. It can rely on its detailed gas cloud simulations, however, to bridge scales and inform unresolved physics within the larger simulation.

“That’s what’s interesting about doing these simulations at widely different scales,” Robertson said. “We can calibrate after the fact to inform ourselves in how we might be getting the story wrong with the coarser, larger simulation.”

Related Publication: Evan E. Schneider and Brant E. Robertson, “Hydrodynamical Coupling of Mass and Momentum in Multiphase Galactic Winds.” The Astrophysical Journal 834, no. 2 (2017): 144, https://iopscience.iop.org/article/10.3847/1538-4357/834/2/144/meta.

The research was supported in part by the National Science Foundation. Computer time on Titan was provided under the Innovative and Novel Computational Impact on Theory and Experiment (INCITE) program supported by DOE’s Office of Science. UT-Battelle manages ORNL for the DOE Office of Science. The Office of Science is the single largest supporter of basic research in the physical sciences in the United States and is working to address some of the most pressing challenges of our time. For more information, please visit https://science.energy.gov.