The Deep Learning Users Group, organized by the OLCF’s Advanced Data and Workflow Group, gathered this summer to discuss topics related to deep learning, a fast-growing offshoot of machine learning with potential for automating knowledge discovery.

Though they sprout from the same family tree, scientific computing and artificial intelligence (AI) make up their own distinct branches of computing. Recent advances in an offshoot of AI called deep learning, however, is attracting the attention of scientists who see potential in the machine-learning technique for discovering new knowledge in their respective domains.

To galvanize interest and share expertise in this emerging field, the Oak Ridge Leadership Computing Facility’s (OLCF’s) Advanced Data and Workflow Group has created a forum for staff at Oak Ridge National Laboratory to discuss, learn, and collaborate on deep learning topics. More than 40 people attended the inaugural Deep Learning Users Group meeting in July and the tutorial session in August.

“The buzzword is out there,” said OLCF data management scientist John Harney. “Scientists are figuring out what deep learning is, why it’s effective, and how it helps explain the science behind observations. Our goal is to promote awareness and increase the adoption of deep learning among scientists, particularly on problems that require scale and OLCF compute resources.”

Unlike machine learning algorithms, which require extensive guided training for a predefined purpose, deep learning algorithms attempt to emulate human intelligence by automatically adjusting model parameters to accomplish a specific goal.

“Practitioners use it like a black box,” said OLCF group leader Sreenivas Sukumar, meaning the mathematical details between providing an input and receiving an output remain hidden. With a couple of command lines, a researcher can train a deep learning algorithm, using selected examples to guide model predictions about new data. The process is akin to a child taking its first steps. The difference, however, is that well-trained deep learning software can execute tasks much more efficiently than any human.

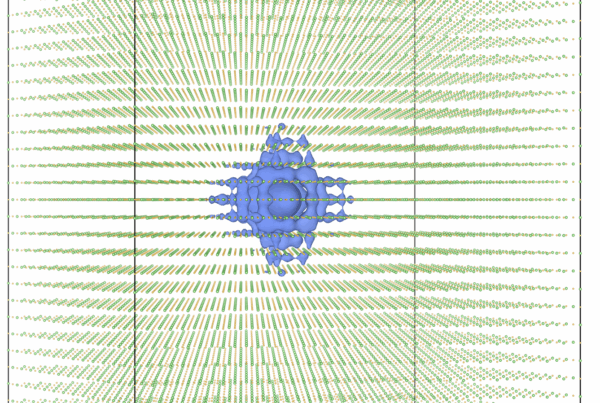

Recent successes in the field, such as Google Deepmind’s AlphaGo, have brought increased attention to deep learning. The approach, first pioneered in the 1960s, has also shown promise in a wide range of areas, including speech and image recognition and natural language processing. GPUs, processors designed to quickly execute many calculations simultaneously, have played a prominent role in deep learning gains by accelerating the training of neural networks, the complex mathematical models that control algorithmic decision-making.

As host to multiple GPU-accelerated systems, including the Cray XK7 Titan, the OLCF is well positioned to assist users who want to explore deep learning techniques to automate and speed up scientific discovery. “With all the GPUs at our disposal—there are more than 18,000 in Titan alone—we have the opportunity to take on big problems,” Harney said.

One current OLCF project leveraging deep learning techniques is an effort by ORNL’s Health Data Sciences Institute to extract cancer insights from text-based medical reports using natural language processing. Another project called MINERvA, based at the Fermi National Accelerator Laboratory, is interested in leveraging deep learning algorithms to identify and categorize data from neutrino experiments.

“The MINERvA team needs an algorithm that categorizes these events fast, and they don’t want to have to spend hours training the algorithm every time a new batch of data is ready for analysis,” Sukumar said.

The OLCF’s recent purchase of an NVIDIA DGX-1, the first supercomputer engineered specifically for deep learning, should help lab staff and users take scientific deep learning applications to the next level. Using multiple GPUs, the DGX-1’s optimized hardware and software make it easier for researchers to build and refine their models. According to NVIDIA, the machine, set to arrive in the fall, can accelerate the training process seventy-fivefold compared to a conventional computer.

“This system not only simplifies the development of deep learning algorithms but also cuts down on the time needed to optimize and port code, freeing up scientists to focus more on knowledge discovery,” said OLCF data and visualization expert Jamison Daniel.

Oak Ridge National Laboratory is supported by the US Department of Energy’s Office of Science. The single largest supporter of basic research in the physical sciences in the United States, the Office of Science is working to address some of the most pressing challenges of our time. For more information, please visit science.energy.gov.