When Mike Warren was a graduate student 20 years ago, computational astrophysicists were conducting simulations of the universe’s structure with a million particles representing large masses of matter like galaxy clusters. Simulations of galaxy formation were beginning to supplement the maps and catalogs researchers were creating with telescope and detector data.

Today, computer simulations are a pillar of cosmology research, bridging theory and observation at unprecedented levels of accuracy. In fact, researchers estimate that our model of the large-scale structure of the universe is accurate to about 1 percent, yet significant and fundamental questions remain about what we think forms the bulk of our Universe. The properties of dark matter and energy still elude scientists who are trying to gather information from the single vantage point of Earth.

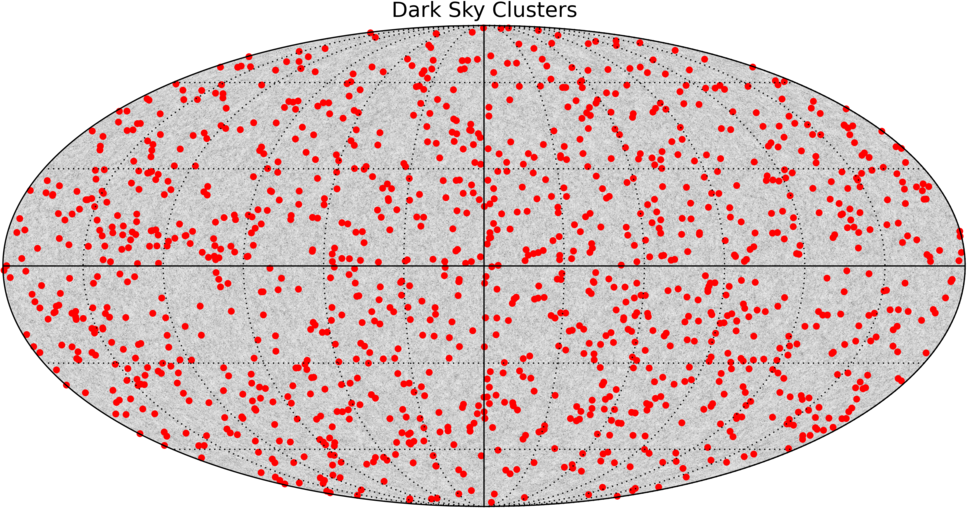

Working with team members from Los Alamos National Laboratory, the University of Chicago, the Paris Institute of Astrophysics, Stanford University, the National Center for Supercomputing Applications, and Kavli Institute for Particle Astrophysics and Cosmology, Warren is principal investigator of the 2014 Innovative and Novel Computational Impact on Theory and Experiment project Probing Dark Matter at Extreme Scales on the Titan supercomputer at the Department of Energy’s (DOE’s) Oak Ridge National Laboratory (ORNL). The team, however, refers to the Titan simulations as the Dark Sky Simulations because the large-scale models of the universe that these simulations generate are important tools for a range of dark matter and dark energy studies.

In April 2014, the team simulated galaxy formation over billions of years using one trillion particles in a simulation called ds14_a (ds stands for Dark Sky). Managed by the Oak Ridge Leadership Computing Facility (OLCF), a DOE Office of Science User Facility, Titan is a 27-petaflop, hybrid CPU–GPU Cray XK7 computer that relies on extreme parallelism to push the boundaries of large-scale scientific applications. To that end, the Dark Sky Simulations are the first trillion-particle cosmological structure simulations that produce data at resolutions useful to a variety of observational surveys of galaxy distribution.

Following the April ds14_a simulation, the team ran a second trillion-particle simulation at a finer resolution on Titan in January 2015 known as ds14_b.

“The size of these simulations has gone up a factor of a million over the last 20 years,” Warren said. “Moore’s Law is driving the amount of data being collected by telescopes at the same time that supercomputers are becoming faster, so it has been a race over the last two decades as observations and simulations are trying to keep pace with one another.”

While humans have been observing space for millennia, advances in telescopes and imaging and detector technologies are allowing scientists to catalogue galaxies and their properties (like speed, distance, mass, etc.) at a rapid pace. In the last couple of decades, the amount of observational data has expanded such that researchers can now test cosmological theories (such as the Standard Model) through numerical prediction models that require a great deal of accurate data to verify the computational models and program the initial conditions, or starting point, of model simulations.

“What is revolutionizing cosmology is the amount of data we’re gathering about the universe,” Warren said. “Our discoveries through observation and simulation may look incremental up until the point where something suddenly does not agree with the physics we thought was true. There’s no way to predict when that point will be.”

The team hopes to use Dark Sky Simulation data to test observational measurements such as cluster abundance and void statistics, which describe where galaxies are in large numbers and where they are significantly absent, respectively; baryon acoustic oscillations, which help researchers estimate the acceleration rate of the universe; redshift-space distortions, with which researchers can refine how they measure redshift to determine astronomical distances and velocities; and gravitational lensing, which describes how matter is distributed in a galaxy and is used to identify regions of dark matter; among others. The simulations will also provide mock halo and galaxy catalogs that can be used to help guide the planning and data analysis of observational survey projects searching for distant galaxies and halos of dark matter that encapsulate galaxies.

The code the team used on Titan is a second version of the Hashed Oct-Tree (HOT) N-body code, a cosmology code Warren and colleagues developed in the ’90s. An N-body code calculates the force between two particles and uses Newton’s laws of motion to update their positions over time.

“The trouble with that for a trillion particles is that the number of force interactions you’d have to compute would be a trillion squared,” Warren said. (A trillion squared is about nine too many zeros for even Titan to compute at a reasonable pace.)

HOT uses a treecode algorithm to group N-body calculations into local calculations. Groups of particles that are too far away from the particle being resolved are treated as one particle rather than many, and interactions with nearby particles are calculated using the N-body method. This method reduces computational demand but maintains a high degree of accuracy in the simulation.

“The treecode is something even Newton understood in the fifteenth century when he calculated the orbit of the moon around the Earth, using the centers of mass of the moon and Earth as two points to calculate the orbit,” Warren said.

The team developed its second version of the code, 2HOT, to run on parallel architectures, improving the efficiency of the treecode algorithm and reducing the number of floating point operations required per particle to 200,000 with a relative accuracy of 0.1 percent. Similar cosmology codes require anywhere from just over 100,000 to nearly 1 million floating point operations per particle.

But a fast code is not the only thing that makes the team’s trillion-particle simulation significant. The team sought feedback from astronomers and physicists working on observational projects, such as the Planck space observatory and Sloan Digital Sky Survey, to refine the cosmological parameters in the model so the initial conditions of their simulation will spawn a virtual universe structured as close as possible to our own.

This was a particularly important step moving from ds14_a to ds14_b. The team’s April 2014 simulation on Titan simulated a large volume of 8 gigaparsecs (Gpc)/h, where h accounts for the uncertainty in the expansion rate of the universe. But, basically, Gpc is the distance at which astronomers measure distant galaxies, meaning the simulation represents the structure formation in much of the visible universe.

“The large computational volume helps us understand large-scale structure formation over the last 10 billion years or so,” Warren said.

However, the ds14_b trillion-particle simulation the team conducted on Titan in January 2015 was only 1 Gpc/h, covering a smaller space but at higher resolution.

“This simulation allowed us to not just find galaxies but measure the internal properties of galaxy halos,” Warren said.

Examples of these internal properties include the angular momentum and spin parameter—both properties that pertain to a galaxy’s rotation and contribute to its shape and the distribution of matter within. Smaller scale properties like these are of great interest to observational researchers because these are often the scales on which they’re working to collect data using instruments.

To maximize the value of the Dark Sky Simulations, following ds14_a, Warren’s team discussed the large-scale simulation with observational teams to learn what they could best use from the higher resolution ds14_b simulation.

“Once the initial data was released, we had discussions about the most interesting questions for each of the galactic surveys,” Warren said. “We talked to various observational teams and described the capabilities of the simulations and asked how the data from these simulations could help their research. Then we began setting up the parameters for our second simulation on Titan to better address some of these questions.”

Warren said that computation and instrumentation data needs to be shared as quickly as possible to keep up the pace of scientific discovery. One of the major goals of the Dark Sky Simulations was to make some of the data publicly available so that anyone working on an astrophysics or related problem could make use of the one-of-a-kind simulations performed on Titan.

“We are producing raw data on Titan and converting it to a form that can be provided as a public catalogue of galaxies,” Warren said. “This will let scientists around the world, some of whom are collecting observational data and others who are studying cosmological theory, use this data to understand the underlying physics.”

In July 2014, the team released 55 terabytes of data to the public through their Stanford Linear Accelerator Center National Accelerator Laboratory website, darksky.slac.standford.edu. To make the data easy to use and visualize, they incorporated some datasets into Python yt data visualization packages, commonly used by the astrophysics community, so that users can interact with the data through a visual field.

Dark Sky Simulation Data Portal: https://darksky.slac.stanford.edu/

Related Publication: M. Warren. “2HOT: An Improved Parallel Hashed Oct-Tree N-Body Algorithm for Cosmological Simulation,” Scientific Programming 22, no. 2 (2014), doi: 10.3233/SPR-140385.

Note: Eric Gedenk is a contributing writer on this feature.

Oak Ridge National Laboratory is supported by the US Department of Energy’s Office of Science. The single largest supporter of basic research in the physical sciences in the United States, the Office of Science is working to address some of the most pressing challenges of our time. For more information, please visit science.energy.gov.