Ongoing upgrades to the OLCF’s data storage services are expanding users’ opportunities to extract science from large datasets.

The life of a dataset at the Oak Ridge Leadership Computing Facility (OLCF), a US Department of Energy (DOE) Office of Science User Facility, can be fast and fleeting. That’s partly by design (computational scientists often run analyses to weed out extraneous simulation data) and partly by necessity (transferring and retrieving data from long-term storage can be slow and cumbersome).

Ongoing upgrades to the OLCF’s data storage services, however, are expanding users’ opportunities to extract science from large datasets. In the last year, the OLCF, located at DOE’s Oak Ridge National Laboratory, has more than quadrupled the disk cache of its High-Performance Storage System (HPSS) and has increased its data intake rate by a factor of five, making it easier for users to migrate, preserve, and access data in long-term storage.

When the upgrades are completed later this year, data storage will total nearly 20 petabytes of disk cache, and transfer rates will approach 200 gigabytes per second. The expansions are impacting users for both placing data in the HPSS archive for long-term storage and retrieving that data for their simulation work.

“We’ve significantly reduced the time it takes to ingest a petabyte of data—almost by two-thirds,” OLCF staff member Jason Hill said. “These improvements not only help users place data in the archive, they make retrieving a dataset much faster, too. The sooner you get your data, the sooner your computational job can start.”

Data-intensive users of the OLCF’s Cray XK7 Titan supercomputer are already noticing the benefits of having an accessible place to let high-value data live for as long as they need it.

That’s very different from how long-term storage for high-performance computing systems was thought of 10 years ago, said Katrin Heitmann, an OLCF user and computational scientist at Argonne National Laboratory (ANL). “It was almost like a black hole,” she said. “You would put data on it. You would hope you could retrieve it at some point, but if you actually had to, it was almost impossible because it was so slow.”

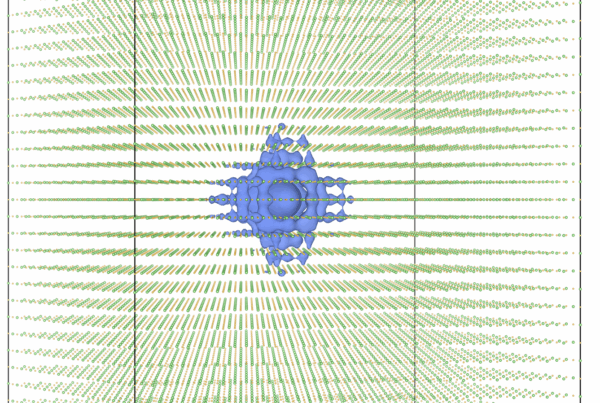

Heitmann is part of an ANL-based team producing high-resolution cosmological simulations that are helping scientists understand the large-scale structure distribution in the universe and its evolution. The group’s work also produces a lot of data; for example, the large, high-fidelity Q Continuum Simulation of the expanding universe nets around two petabytes, much of it valuable to other research projects.

“We are not trying to simulate one experiment,” Heitmann said. “We are trying to create a simulation that can be used to produce a lot of different experiments and cosmological probes by doing different analyses on top of the raw simulation. The data we produce is very science rich.

“The upgrades at the OLCF make HPSS really useful for production, so we can actually move data in and out of storage. There are so many different things we can do with the raw data that it’s important to hold on to it for as long as possible,” Heitmann said.

In the first week of 2016, the OLCF took 860 terabytes of data into HPSS—about three times the typical amount before the upgrades. Such a large influx may have raised eyebrows in the past, but now it has become the norm, said OLCF staffer Jason Anderson.

“We’re able to ingest in excess of 100 terabytes a day with these upgrades,” Anderson said. “Titan is obviously picking up steam as users embrace GPU-accelerated computing. If the data is valuable, we need to have a solid place to keep it and allow it to be consumed by the greater science community.”

Oak Ridge National Laboratory is supported by the US Department of Energy’s Office of Science. The single largest supporter of basic research in the physical sciences in the United States, the Office of Science is working to address some of the most pressing challenges of our time. For more information, please visit science.energy.gov.