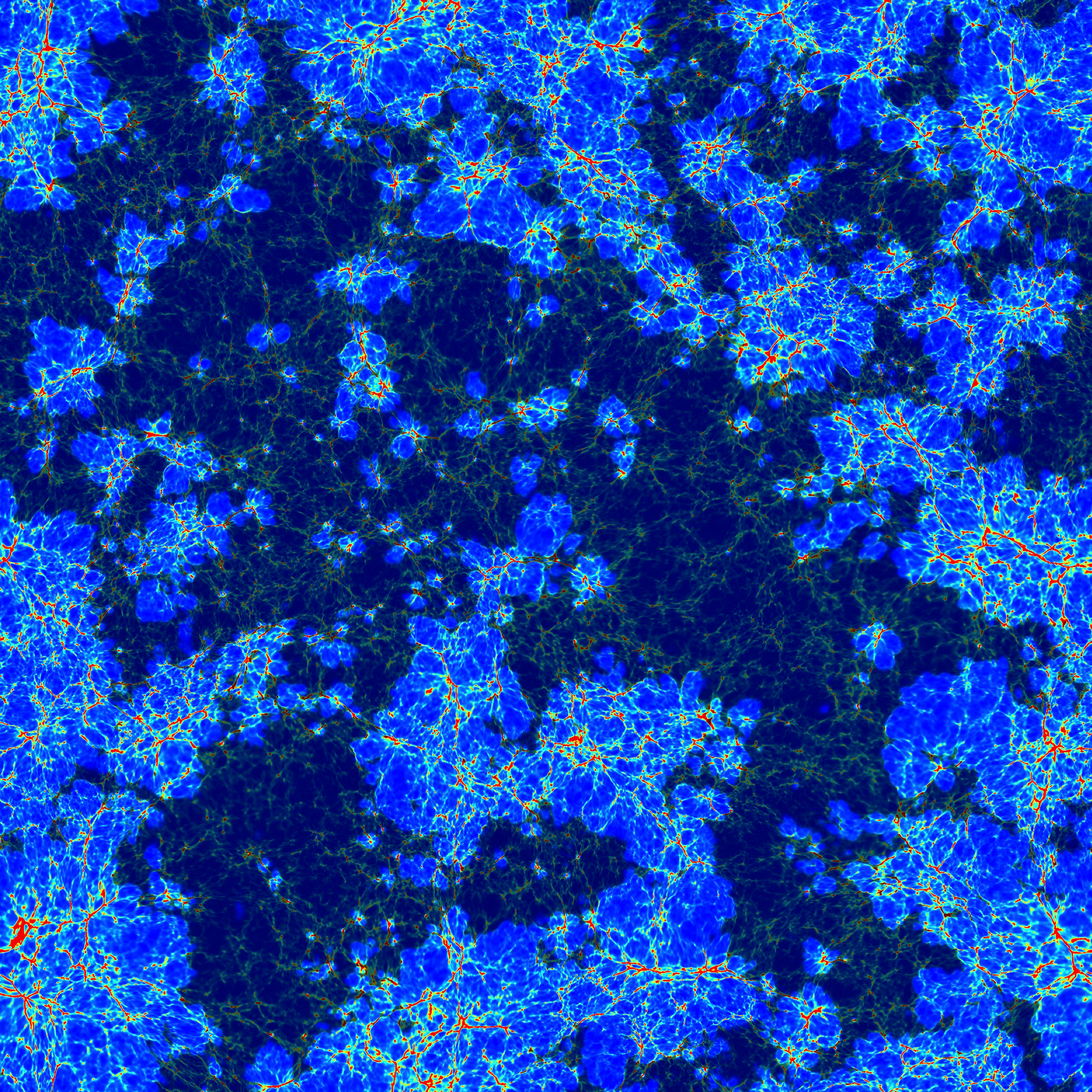

This video shows a snapshot of how hot, ionized gas (bright patches) created by millions of galaxies that clustered in space along the threads of a cosmic web, might have been distributed within a piece of the expanding universe 300 million light-years across today, centered on our Milky Way galaxy, when half the volume was still occupied by cold and neutral gas (dark regions).

Image credit: Hyunbae Park, Paul Shapiro, Junhwan Choi, and Anson D’Aloisio, University of Texas, Austin using data provided by Pierre Ocvirk, University of Strasbourg

Multi-institution team simulates reionization of the universe in unprecedented detail

Drive away from city lights on a clear night and you can see innumerable stars. Several hundred thousand years after the big bang, though, the universe looked very different—and much darker.

Minutes after the big bang, the universe was filled with radiation and a nearly uniform, featureless atomic gas of hydrogen and helium so hot that every atom was ionized—or stripped of its electrons, which roamed freely. By the time 400,000 years elapsed, expansion of the universe had cooled so much that every hydrogen atom was able to capture an electron to neutralize it, and the radiation had shifted its colors to invisible wavelengths.

What came next is called the “cosmic dark ages,” before gravity managed to pull any of that gas in to make stars. The total density of matter then, as now, was everywhere dominated by dark matter—a mysterious form of matter invisible to the human eye that scientists only know exists based on its gravitational pull.

This dark matter was smoothly distributed, too, but in some places, there was a bit extra. That extra dark matter made gravity stronger there, causing those places to collapse both dark and atomic matter inward to make galaxies and, inside them, stars—lighting up the universe and ending the dark ages.

“When the first galaxies and stars formed about 100 million years after the big bang, starlight leaked into the intergalactic medium, where most of the hydrogen and helium created in the big bang was still waiting, not yet formed into galaxies,” said Paul Shapiro, the Frank N. Edmonds, Jr. Regents Professor of Astronomy at the University of Texas at Austin. “As ultraviolet photons of starlight streamed across the universe, they struck these intergalactic atoms and photoionized [meaning light served as the catalyst for ionization] them, releasing fast-moving electrons that heated the gas to 10,000 kelvin.”

Shapiro noted that as this process continued, galaxies and groups of galaxies formed, zones of hot, ionized intergalactic gas grew up around them, filling the universe with a patchwork quilt of ionized and neutral zones, until the ionized zones became large enough to overlap entirely.“By the end of the first billion years, the whole universe was filled with ionized gas—reionized, actually, since atoms were born in an ionized state a billion years before that but had since then recombined,” Shapiro said. “We call this transformation the ‘epoch of reionization.’ It was a kind of ‘second coming’ of ionization—a giant backlash by the first galaxies on the universe that created them.”

Shapiro can speak confidently on this topic because he and a multi-institutional research team have been using supercomputing resources at the US Department of Energy’s (DOE’s) Oak Ridge National Laboratory (ORNL) to simulate this phenomenon with unparalleled detail and accuracy.

The team, which includes collaborators from the Universities of Texas, Strasbourg (France), Zurich (Switzerland), Sussex (United Kingdom), Madrid (Spain), and Potsdam (Germany), simulated the reionization of the universe with a focus on how it happened in our own neighborhood—the Milky Way and Andromeda galaxies along with a number of smaller ones that comprise the Local Group.

The researchers’ goal was to learn about reionization across the universe, while predicting its imprints that can be observed today on these nearby celestial objects. Astronomers think that when dwarf galaxies much smaller than the Milky Way were engulfed by reionization, for example, their star formation was suppressed. If the team’s newest simulation confirmed this, it would solve a major puzzle in the standard theory of cosmology. Its first results are soon to be published in the Monthly Notices of the Royal Astronomical Society.

To model global reionization accurately, the team needed to simulate a very large volume that would expand with the general expansion of the universe, would be large enough to contain millions of galaxies as possible sources of radiation, and would have fine enough spatial detail to resolve the smallest galaxies. This required an extremely large computational grid of 4,096 by 4,096 by 4,096 spatial cells for the gas and radiation and the same number, 69 billion, of particles of dark matter, in a cubic volume 300 million light-years per side of the grid today. To model the local universe, the team also had to create initial conditions that would guarantee the formation, 13 billion years later, of all the well-known local structures observed today, from the Milky Way and Andromeda galaxies of the Local Group to the giant clusters of galaxies called Virgo and Fornax.

Shapiro noted that these simulations were the first to have all three components—large enough volume, fine enough spatial grid, and conditions just right to model the local universe—while calculating all the major physical processes involved in reionizing the universe, in a “fully coupled” way. This meant that, in addition to calculating the gravitational pull of dark matter, atomic gas, and stars on each other, researchers had to simulate the hydrodynamics of hydrogen gas flowing within and between galaxies and the transport of the radiation that helped simultaneously complete the universe’s ionization and heating.

“You can’t simulate the radiation as an afterthought,” Shapiro said. “You can’t just simulate the gravitational collapse of galaxies and then ‘paint on’ the radiation and let it ionize. That isn’t good enough, because there is feedback. The radiation influences how galaxies form and release the starlight that causes reionization—so we need to do it simultaneously.”

There was a barrier to doing this, however, which even some of the fastest supercomputers could not overcome previously. To solve the equations describing the time history of reionization, researchers needed to compute “snapshots” of the moving gas, stars, dark matter, and radiation to track the expanding surfaces of the ionized zones (called “ionization fronts”) without blurring them.

The radiation that causes these surfaces to expand travels at the speed of light, much faster than the gas, dark matter, and stars. To follow the radiation and ionization fronts accurately, while also tracking the slower-moving gas, stars, and dark matter, Shapiro’s team programmed the ionization and radiation snapshots to run on GPUs. The team also used CPUs on the same parallel computer nodes to compute the motions of gas, dark matter, and stars separately.

By making the GPUs perform hundreds of finer-spaced “frames per second” of the reionization time history while the CPUs performed just one of their “frames per second,” the team greatly reduced the total number of computations required to advance the simulation.

Such a multi-faceted simulation needed not only a powerful supercomputer, but also one that had plenty of GPUs so the team could offload its radiation and ionization calculations. Thankfully, the Oak Ridge Leadership Computing Facility’s (OLCF’s) Cray XK7 Titan supercomputer fit the description.

The OLCF, a DOE Office of Science User Facility located at ORNL, built Titan with a GPU for every one of its 18,688 compute nodes. The Shapiro team needed 8,192 GPUs to run its simulations.

“Titan is special in having such a large numbers of GPUs, and that is something that made our simulations a Titan-specific milestone,” Shapiro said.

Simulating our cosmic dawn

The resulting fully coupled simulation performed during the Shapiro team’s INCITE 2013 grant is called CoDa, short for “Cosmic Dawn.” It is based upon the group’s flagship code, RAMSES-CUDATON, developed by team members Romain Teyssier (Zurich) and Dominique Aubert (Strasbourg), and run on Titan by Pierre Ocvirk (Strasbourg). Team members from the Constrained Local Universe Simulations, led by Gustavo Yepes (Madrid), Stefan Gottloeber (Potsdam), and Yehuda Hoffman (Jerusalem), provided the initial framework for the simulation.

The RAMSES-CUDATON code is actually several different coupled numerical methods. The RAMSES component treats gravitational dynamics by an N-body calculation—a term describing matter that moves under the action of gravitational forces computed by discretizing the dark matter and stars as “N” point-mass particles and the atomic gas as a uniform cubic lattice of cells. Because the gas also experiences pressure forces, an additional hydrodynamics calculation is performed on the same cubic lattice, coupled to the gravity calculation.

The second part of the code computes the transfer of radiation and the ionization of the gas, also using the same cubic lattice, by the method called ATON. The GPU version of ATON, called CUDATON, benefitted enormously from Titan’s GPUs—the team was able to accelerate its simulations by a factor of roughly 100.

Cosmic dawn, cosmic datasets

To simulate such fine spatial detail over such a large volume, the researchers generated enormous data sets—over 2 petabytes (2 million gigabytes), in fact.

The team stored 138 snapshots, one for every 10 million years of cosmic time, starting at a time about 10 million years after the big bang. Each snapshot created about 16 terabytes of raw data. Because saving this much data indefinitely was not possible, the team also created a greatly reduced data set on the order of 100 terabytes for further analysis and a more permanent archival record.

To analyze this particularly large, challenging set of raw data, the team subsequently was awarded additional computing hours to continue using the OLCF’s analysis clusters Eos and Rhea. The analysis clusters allowed the researchers to scan each “snapshot” for the positions of the N-body particles so they could identify the locations and masses of dense, quasi-spherical clumps of particles called “halos,” using a “halo finder” program. Each halo is the scaffolding within which a galaxy formed, with gas, dark matter, and star particles held together by their mutual gravitational attraction. With every snapshot, the number and location of galactic halos changed.

By matching this catalog of galactic halos located by the halo finder to the properties of the gas and stars within them in each snapshot, the team could track the evolving properties of all the galaxies in their simulation for comparison with observations of such early galaxies.

These OLCF clusters were necessary to enable a parallel computing analysis of the raw data sets that were otherwise too large to be analyzed by lesser computers.

After analyzing CoDa simulation snapshots, Shapiro’s team found that the simulated reionization of the local universe ended a bit later than observations suggest for the universe-at-large, but the predicted properties of galaxies, intergalactic gas, and the radiation backgrounds that filled the universe at such early times agreed with those observed once an adjustment was made for this late reionization. The team also found that star formation in the dwarf galaxies—those of total mass below a few billion times the mass of the Sun—was severely suppressed by reionization, as hypothesized. The bulk of the star formation in the universe during the epoch of reionization was dominated, instead, by galaxies with masses between 10 billion and 100 billion times that of the Sun.

Most importantly, CoDa will now serve as a pathfinder for a follow-up simulation, CoDa II, already planned, in which parameters that control the efficiency for converting cold, dense gas into stars will, with the hindsight provided by CoDa I, be adjusted to make reionization end a bit earlier.

Despite the extreme scale of the CoDa simulation, Shapiro said there are still other ways to improve. Even with the future of exascale computing—computers capable of a thousandfold speedup over current petascale machines—he noted that completely simulating the universe in all macro- and microscopic detail would still demand more power.

However, as next-generation supercomputers add more GPUs and memory, researchers will be able to zoom in on small patches of the universe, and within these, zoom in further on individual galaxies, offering a more detailed look at star and galaxy formation. “We may be able to say, ‘I can’t follow the formation of all the stars, but maybe I can zoom in on one galaxy, then zoom in on one cloud of molecular gas within it, which might wind up forming several thousand stars,’” Shapiro said.

Related Resources:

- https://news.developer.nvidia.com/share-your-science-simulating-reionization-of-the-universe-witnessing-our-own-cosmic-dawn/

- https://arxiv.org/abs/1511.00011

- https://images.nvidia.com/events/sc15/SC5124-simulating-reionization-witnessing-cosmic-dawn.html

Oak Ridge National Laboratory is supported by the US Department of Energy’s Office of Science. The single largest supporter of basic research in the physical sciences in the United States, the Office of Science is working to address some of the most pressing challenges of our time. For more information, please visit science.energy.gov.