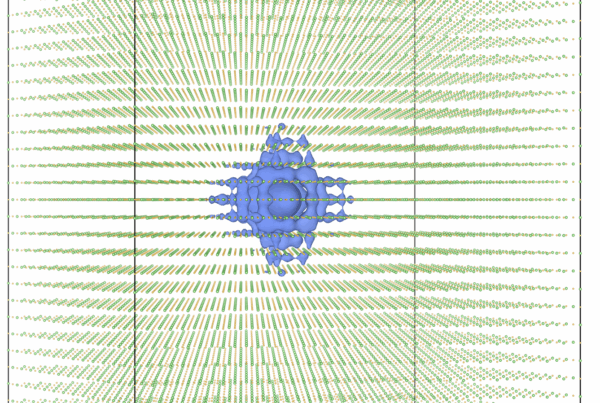

David Pugmire of the OLCF has developed ASDF, a parallel data reader, that is being used inside VisIt, a free interactive parallel visualization and graphical analysis tool for viewing scientific data. Pictured here: Volume rendering of shear-wave perturbations computed in the seismology simulation code SPECFEM3D_GLOBE.

OLCF’s SciComp Team provides valuable assist for Princeton researcher

There is a lot more to the OLCF than Titan. The center is equipped with a staff of experts to help users with everything from porting their codes to Titan’s hybrid architecture to data analysis and visualization to enhanced I/O.

For many researchers, Titan is only part of the picture; managing and understanding data are quickly becoming as important as the simulations that create it.

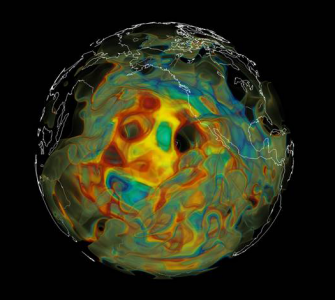

Professor Jeroen Tromp of Princeton University leads a team using the Titan supercomputer, located at Department of Energy’s Oak Ridge National Laboratory (ORNL), to reveal the Earth’s inner workings via adjoint tomography simulations. Adjoint tomography involves monitoring the interaction of a forward wavefield, in which the waves travel from the source to the receivers, and an “adjoint” wavefield in which the waves travel inversely from the receivers to the source. “We want to image the physical state of the Earth’s interior,” he said.

Tromp’s team is made up of domain scientists, ones who are grounded in their specialty: in this case the Earth and its inner workings. But when it comes to their familiarity with software development and data management and analysis, however, they need people like those at the OLCF to effectively utilize a machine on the scale of Titan.

To get up and running, Tromp’s team made a preliminary trip to the OLCF, a DOE Office of Science User Facility, in December 2012 and visited with Judy Hill, a liaison in the Scientific Computing Group at the center. Hill helped the group compile its SPECFEM3D_GLOBE spectral element wave propagation code and took the researchers through the basics of running on Titan.

Now the group’s workflow is up to par with that of other Titan users, running large-scale simulations that scale well to Titan’s enormous architecture. In fact, the team is now running “ensemble” simulations that will further improve its model of the Earth beneath our feet. “Now we’re trying to automate the workflow and data analysis,” said Hill.

That, too, is the realm of the Scientific Computing Group.

Enter ADIOS, a parallel I/O library that provides a simple, flexible way for scientists to describe the data in their code that may need to be written, read, or processed outside of the running simulation. The middleware, developed at ORNL with partners from academia, was chosen to create a new data format for this project to aggregate a large amount of information into large files and improve the I/O performance of the workflow.

“The amount of I/O here is phenomenal,” said Tromp, adding that in traditional seismology, every seismogram is one file, and each earthquake involves thousands of seismograms, with the team eventually studying thousands of earthquakes. The sheer number of files could negatively impact Titan’s file system, one of the world’s biggest.

“The main bottlenecks in the adjoint tomography workflow stem from the number of files to be read and written, which significantly reduces performance and creates problems on large-scale parallel file systems due to heavy I/O traffic,” said the OLCF’s Norbert Podhorszki, adding that classical seismic data formats, which describe each seismic trace as a single file, exacerbate this problem.

Necessity is the mother of invention

Systems of linked seismographs, known as seismic arrays, are greatly increasing the amount of seismic data available for analysis. In fact, the datasets produced by USArray and China Array are so large that they are no longer suited for traditional data formats. Industry datasets are likewise growing in size; 3D marine surveys can have 5,000 shots and 50,000 recorders. Archaic file structures make analyzing this petascale data difficult, if not at times impossible.

Furthermore, the I/O bottleneck could be addressed if the data was stored by combining all time series for a single shot or earthquake into one file and taking advantage of parallel processing capabilities.

New methods are limited by the fixed structure of older data formats that were meant for specific applications utilizing much more limited computing power. In addition, seismologists often ignore standards because adherence increases development time. The new adaptable seismic data format, known as ASDF, (https://asdf.readthedocs.org) is being developed by Princeton University and the University of Munich. It features an open, modular design that will be able to evolve and handle future advances in seismology. ASDF is using ADIOS to provide an open, scalable data format for the seismic community.

By taking advantage of open-source software and the Internet, the team has developed a novel, modular data format that can evolve and adapt to problems in the future, and an open wiki for development that allows for contributions from the community will help grow seismology as a science. The new and improved format contains flexible provenance that lets the user know where the data comes from and what has been done to it.

The team’s SPECFEM3D_GLOBE simulation now uses ADIOS to write its output for checkpointing and visualization. Furthermore, David Pugmire of the OLCF has developed a parallel data reader for SPECFEM3D_GLOBE simulation output that is being used inside VisIt, a free interactive parallel visualization and graphical analysis tool for viewing scientific data on Unix and PC platforms. In it each ADIOS file contains data about the three layers of the Earth that are being simulated (crust-mantle, outer core, and inner core).

Using the new ADIOS data reader, the OLCF has produced visualizations of these recent simulation runs, giving Tromp’s team yet another major advantage in its quest to find out exactly what goes on in the planet’s underworld.

Even with systems as powerful as Titan, however, there are still limitations. For instance, Tromp would love to increase the frequency of the waves being simulated. While his team is currently resolving waves with periods of 9 seconds—or the time it takes particles to move up and down—the researchers would like to reach a period of 1 second, the shortest-period waves, which can travel clear across our planet. “The good news is that data are available for those shorter periods,” said Tromp, “and we have things to work toward, but they require gradual improvements in our modeling.”

And as the team’s work progresses, as will the data challenges. Thanks to the work of the OLCF, however, they are one step ahead of the curve.

The Oak Ridge National Laboratory is supported by the Office of Science of the U.S. Department of Energy. The Office of Science is the single largest supporter of basic research in the physical sciences in the United States, and is working to address some of the most pressing challenges of our time. For more information, please visit science.energy.gov