Credit: Dave Pugmire, ORNL.

Titan blazes trail for ITER reactor via DIII-D

Few problems have vexed physicists like fusion, the process by which stars fuel themselves and by which researchers on Earth hope to create the energy source of the future.

By heating the hydrogen isotopes tritium and deuterium to more than five times the temperature of the Sun’s surface, scientists create a reaction that could eventually produce electricity. Turns out, however, that confining the engine of a star to a manmade vessel and using it to produce energy is tricky business.

Big problems, such as this one, require big solutions. Luckily, few solutions are bigger than Titan, the Department of Energy’s flagship Cray XK7 supercomputer managed by the Oak Ridge Leadership Computing Facility.

Titan allows advanced scientific applications to reach unprecedented speeds, enabling scientific breakthroughs faster than ever with only a marginal increase in power consumption. This unique marriage of number-crunching hardware enables Titan, located at Oak Ridge National Laboratory (ORNL), to reach a peak performance of 27 petaflops to claim the title of the world’s fastest computer dedicated solely to scientific research.

And fusion is at the head of the research pack. In fact, a team led by Princeton Plasma Physics Laboratory’s (PPPL’s) C.S. Chang increased the performance of its fusion XGC1 code fourfold on Titan using its GPUs and CPUs, compared to its previous CPU-only incarnation after a 6-month performance engineering period during which the team tweaked its code to best take advantage of Titan’s revolutionary hybrid architecture.

“In nature, there are two types of physics,” said Chang. The first is equilibrium, in which changes happen in a “closed” world toward a static state, making the calculations comparatively simple. “This science has been established for a couple hundred years,” he said. Unfortunately, plasma physics falls in the second category, in which a system has inputs and outputs that constantly drive the system to a nonequilibrium state, which Chang refers to as an “open” world.

Most magnetic fusion research is centered on a tokamak, a donut-shaped vessel that shows the most promise for magnetically confining the extremely hot and fragile plasma. Because the plasma is constantly coming into contact with the vessel wall and losing mass and energy, which in turn introduces neutral particles back into the plasma, equilibrium physics generally don’t apply at the edge and simulating the environment is difficult using conventional computational fluid dynamics.

Another major reason the simulations are so complex is their multiscale nature. The distance scales involved range from millimeters (what’s going on among the gyrating particles and turbulence eddies inside the plasma itself) to meters (looking at the entire vessel that contains the plasma). The time scales introduce even more complexity, as researchers want to see how the edge plasma evolves from microseconds in particle motions and turbulence fluctuations to milliseconds and seconds in its full evolution. Furthermore, these two scales are coupled. “The simulation scale has to be very large, but still has to include the small-scale details,” said Chang.

And few machines are as capable of delivering in that regard as is Titan. “The bigger the computer, the higher the fidelity,” he said, simply because researchers can incorporate more physics, and few problems require more physics than simulating a fusion plasma.

On the hunt for blobs

Studying the plasma edge is critical to understanding the plasma as a whole. “What happens at the edge is what determines the steady fusion performance at the core,” said Chang. But when it comes to studying the edge, “the effort hasn’t been very successful because of its complexity,” he added.

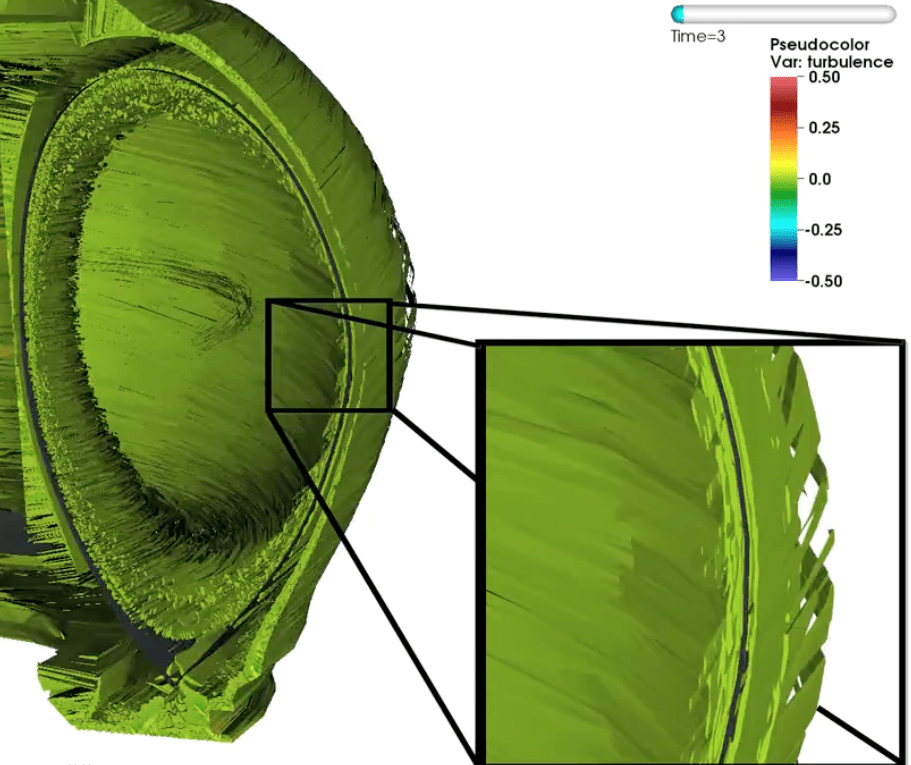

Chang’s team is shedding light on a long-known and little-understood phenomenon known as “blobby” turbulence in which formations of strong plasma density fluctuations or clumps flow together and move around large amounts of edge plasma, greatly affecting edge and core performance in the DIII-D tokamak at General Atomics in San Diego, CA. DIII-D-based simulations are considered a critical stepping-stone for the full-scale, first principles simulation of the ITER plasma edge. ITER is a tokamak reactor to be built in France to test the science feasibility of fusion energy.

The phenomenon was discovered more than 10 years ago, and is one of the “most important things in understanding edge physics,” said Chang, adding that people have tried to model it using fluids (i.e., equilibrium physics quantities). However, because the plasma inhabits an open world, it requires first-principles, ab-initio simulations. Now, for the first time, researchers have verified the existence and modeled the behavior of these blobs using a gyrokinetic code (or one that uses the most fundamental plasma kinetic equations, with analytic treatment of the fast gyrating particle motions) and the DIII-D geometry.

This same first-principles approach also revealed the divertor heat load footprint. The divertor will extract heat and helium ash from the plasma, acting as a vacuum system and ensuring that the plasma remains stable and the reaction ongoing.

These discoveries were made possible because the team’s XGC1 code exhibited highly efficient weak and strong scalability on Titan’s hybrid architecture up to the full size of the machine. Collaborating with Ed D’Azevedo, supported by the OLCF and by the DOE Scientific Discovery through Advanced Computing (SciDAC) project Center for Edge Physics Simulation (EPSi), along with Pat Worley (ORNL), Jianying Liand (PPPL) and Seung-Hoe Ku (PPPL) also supported by EPSi, this team optimized its XGC1 code for Titan’s GPUs using the maximum number of nodes, boosting performance fourfold over the previous CPU-only code. This performance increase has enormous implications for predicting fusion energy efficiency in ITER.

“We can now use both the CPUs and GPUs efficiently in full-scale production simulations of the tokamak plasma,” said Chang.

Furthermore, added Chang, Titan is beginning to allow the researchers to model physics that were just a year ago out of reach altogether, such as electron-scale turbulence, that were out of reach altogether as little as a year ago. Jaguar—Titan’s CPU-only predecessor— was fine for ion-scale edge turbulence because ions are both slower and heavier than electrons (for which the computing requirement is 60 times greater), but fell seriously short when it came to calculating electron-scale turbulence. While Titan is still not quite powerful enough to model electrons as accurately as Chang would like, the team has developed a technique that allows them to simulate electron physics approximately 10 times faster than on Jaguar.

And they are just getting started. The researchers plan on eventually simulating the full volume plasma with electron-scale turbulence to understand how these newly modeled blobs affect the fusion core, because whatever happens at the edge determines conditions in the core. “We think this blob phenomenon will be a key to understanding the core,” said Chang, adding, “All of these are critical physics elements that must be understood to raise the confidence level of successful ITER operation. These phenomena have been observed experimentally for a long time, but have not been understood theoretically at a predictable confidence level.”

Given the team can currently use all of Titan’s more that 18,000 nodes, a better understanding of fusion is certainly in the works. A better understanding of blobby turbulence and its effects on plasma performance is a significant step toward that goal, proving yet again that few tools are more critical than simulation if mankind is to use the engines of stars to solve its most pressing dilemma: clean, abundant energy.