Project goal is to help inform users

As high-performance computing (HPC) capability breaks petaflop boundaries and pushes toward the exascale to enable new scientific discoveries, the amount of data generated by large-scale simulations is becoming more difficult to manage. At the Oak Ridge Leadership Computing Facility (OLCF), users on the world’s most powerful supercomputer for open science, Titan, are routinely producing tens or hundreds of terabytes of data, and many predict their needs will multiply significantly in the next 5 years.

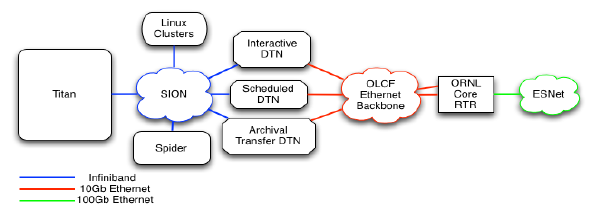

The OLCF currently provides users with 32 petabytes of scratch storage and hosts up to 34 petabytes of archival storage for them. However, individual users often need to move terabytes of data to other facilities for analysis and long-term use.

“We’re adding data at an amazing rate,” said Suzanne Parete-Koon of the OLCF User Assistance and Outreach Group.

To address this expanding load of outgoing data, three OLCF groups worked together to identify data transfer solutions and improve transfer rates for users by evaluating three parallel-streaming tools that can be optimized on upgraded OLCF hardware. Parete-Koon and colleagues Hai Ah Nam and Jason Hill shared their results in the paper “The practical obstacles of data transfer: Why researchers still love scp,” which was published in conjunction with the Third International Workshop on Network-Aware Data Management held at the SC13 supercomputing conference in November 2013.

Leadership computing facilities have long anticipated an increasing demand for data management resources. In a 2013 survey, OLCF staff asked users to rank hardware features in order of priority for their future computing needs. Archival storage ranked fourth and wide area network bandwidth for data transfers ranked seventh.

The OLCF revisited its data transfer capabilities and dedicated two nodes for outgoing batch transfers last year. At the beginning of 2014, that capability increased to 10 nodes.

“We saw the need for this service and have worked throughout the year to deploy these nodes to support the scientific data movement that our users demand,” Hill said.

These dedicated batch nodes provide a scheduled data transfer source determined by user requests.

“By scheduling transfers, users can maximize their transfer and get more predictable performance,” Hill said.

Data is moved from the OLCF via the Energy Sciences Network (ESNet)—the Department of Energy’s speedier lane of the Internet that is capable of transferring 10 times more bits per second than the average network. But in fall 2013, even with ESNet’s high bandwidth and OLCF’s new dedicated transfer nodes, users still complained of painfully slow transfer rates.

Parete-Koon, Nam, and Hill discovered researchers were often underutilizing the resources by using single-stream transfer methods such as scp rather than multiple-stream methods that break data into multiple, simultaneous streams, resulting in higher transfer rates.

“Using scp when multiple-stream methods are available is akin to drivers using a single lane of a 10-lane highway,” Nam said.

Staff tested three multiple-stream tools compatible with and optimized for OLCF systems—bbcp, GridFTP, and Globus Online—for ease of use and reliable availability. Results showed that bbcp and GridFTP transferred data up to 15 times faster than scp in performance tests.

“Although these multi-streaming tools were available to users, they were being grossly underutilized,” Parete-Koon said. “We wondered why researchers were not taking advantage of these tools.”

Through the survey and conversations with users, the team identified why researchers were stuck on scp.

Users were willing to sit on standby during long stretches of data transfer not only because they were typically more familiar with scp, but also because some multiple-stream methods require a lengthy setup process that can take several days, creating a high barrier to entry.

“Because of a high level of cybersecurity at the OLCF and other computing facilities, transferring files requires additional steps that seem inconvenient and overly complicated to users,” Parete-Koon said. “For example, to use GridFTP, OLCF users need an Open Science Grid Certificate, which is like a passport that users ‘own’ and are responsible for maintaining.”

Despite the improved transfer capabilities of GridFTP, only 37 Open Science Grid Certificates— out of 157 projects with about 500 users—had been activated on OLCF systems as of November 2013.

“We tested how fast these tools worked and asked ourselves if each tool would be convenient enough so people would go through the extra setup work,” Parete-Koon said.

“The results in our paper show that better transfer speed is worth it.”

Open grid certificates also enable users to launch an automated workflow from a batch script.

“The ability to seamlessly integrate data transfer into the scientific workflow with a batch script is where the benefit of the grid certificate authentication becomes even more apparent,” Nam said. “The certificates allow users to have uninterrupted productivity even when they’re not sitting in front of a computer screen.”

Based on the performance test results for bbcp, GridFTP, and Globus Online, the OLCF is recommending users with large data transfers apply for the certificate and take advantage of the specialized data transfer nodes.

In an effort to help users navigate the complicated setup process, Parete-Koon extended the research from the paper to establish documentation and user support.

“Suzanne [Parete-Koon] presented this information during a user conference call to a packed audience, which shows that users are interested in this topic and are looking for solutions,” Nam said.

Parete-Koon shared a sample, automated workflow that uses three scripts: the first to schedule a data transfer node, the second to launch an application, and the third to transfer files to longer-term data storage or a remote site for analysis. She is now working on a specialized data transfer user’s guide.

“After the conference call, one user thanked us because he was able to transfer 2 years of data—almost 19 terabytes—in five streams with an average rate of 1,290 megabits per second,” Nam said. “That is about five times faster than an optimized scp transfer.” —Katie Elyce Jones