ORNL and the University of Tennessee’s Joint Institute for Computational Sciences (JICS) hosted a workshop, “Electronic Structure Calculation Methods on Accelerators“, at ORNL February 5-8 to bring together researchers, computational scientists, and industry developers.

The 80 participants attended presentations and training sessions on the advances and opportunities that accelerators–dedicated, massively parallel hardware capable of performing certain limited functions faster than central processing units–bring to high-performance computing (HPC). The workshop’s purpose was to respond to the challenge of using innovative accelerator hardware and multicore chips in electronic-structure-theory research, which investigates the atomic structure and electronic properties of materials.

“This workshop is important because we are in the middle of an architectural revolution to a new type of HPC machine,” said Jacek Jakowski, computational scientist at JICS. “Programming hardware accelerators is nontrivial, and learning new programing models is a significant investment for the science community. A well-defined standard will help us to make the software sustainable for future changes.”

Several high-performance computers are being upgraded to include accelerators such as graphics processing units (GPUs). For example, an ORNL supercomputer, Jaguar, recently received an extensive hardware installation of 960 NVIDIA Tesla 20-series GPUs. This upgrade increased its performance speed from 2.3 to 3.3 petaflops. Additional upgrades, scheduled for completion in fall 2012, will enable a peak performance between 10 and 20 petaflops as Jaguar transitions from a Cray XT5 machine into an XK6 and is renamed Titan. This architectural revolution also calls for user communities to create and optimize the software, algorithms, and theoretical models the new hardware demands.

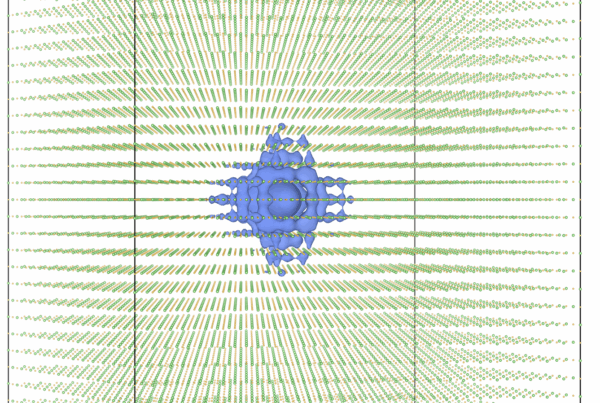

The February workshop featured 22 speakers lecturing on the innovative hardware and the new hardware-accelerated electronic-structure codes. On the first day, staffers from ORNL and JICS and industrial partners such as NVIDIA, PGI, Cray Inc., CAPS enterprise, and Intel Corporation spoke about programming on accelerators and compilers. At an evening poster session, NVIDIA donated a Tesla C2075 card for the winning poster, “Hybrid Scale-Invariant Feature Transform (SIFT) Implementation on CPU/GPU,” to Sen Ma, a University of Arkansas graduate student. On the second day, computational scientists and academic researchers from Argonne National Laboratory, Pacific Northwest National Laboratory, University of North Texas, Pennsylvania State University, University of Florida, Stanford University, and Ames National Laboratory spoke about electronic-structure applications including ACES III, NWCHem, GAMESS, Quantum ESPRESSO, QMCPACK, FlapW, and TeraChem. On the final day, software authors from the Commissariat a l’Energie Atomique led tutorial and hands-on training sessions about the GPU-based BigDFT program, a software code for electronic-structure calculations. A final summary session was also recorded and will be made available on the Oak Ridge Leadership Computing Facility website.

Erik Deumens, director of Research Computing at the University of Florida and guest lecturer, said, “At the very end of the workshop, there was a consensus that there is a new opportunity and confluence. The basic issues of how to write efficient code for GPUs have a very large overlap with those of how to make use of exascale computer systems, an important goal of the Department of Energy. In both cases no simple trick will suffice; some collaboration of computer science, compiler technology, and development of new domain theories will be necessary to fully exploit the potential of GPUs as well as exascale systems.” —by Sandra Allen McLean