Programming Methods for Summit’s Multi-GPU Nodes

Programming Methods for Summit’s Multi-GPU Nodes (Presented by Jeff Larkin & Steve Abbott – NVIDIA)

Oak Ridge National Laboratory

Building 5200, TN Rooms A,B

November 5, 2018

9:00 AM – 12:00 PM (ET)

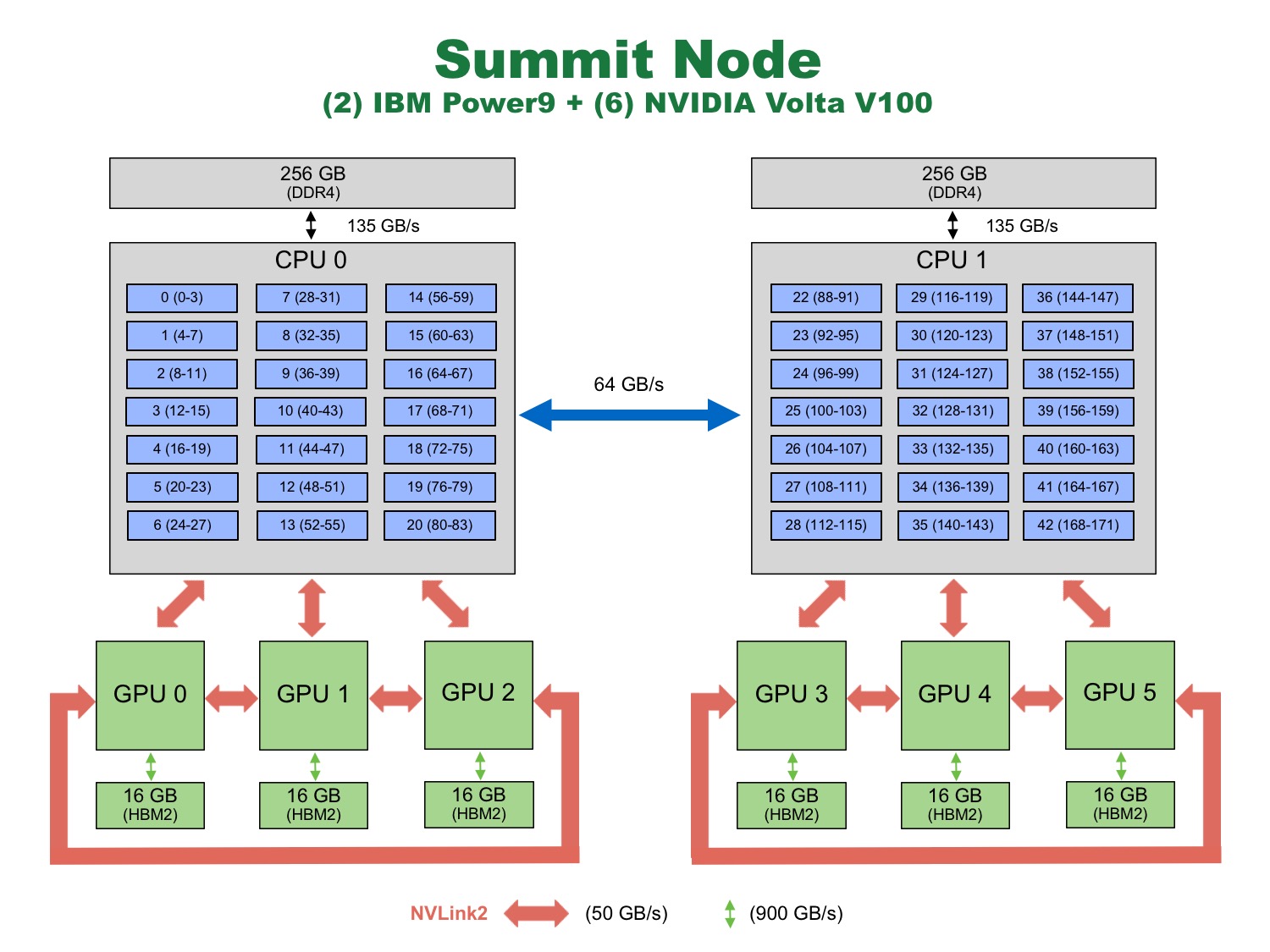

The Oak Ridge Leadership Computing Facility (OLCF) will host a workshop on programming methods for targeting Summit’s multi-GPU nodes. During the event, local NVIDIA representatives – Jeff Larkin and Steve Abbott – will demonstrate

- how using jsrun (job launcher on Summit) can make the transition from a single-GPU-per-node system (e.g., Titan) to a multi-GPU-per-node system (e.g., Summit) simpler,

- how this simple approach can lead to portability issues with other multi-GPU-per-node systems (and how to resolve such issues),

- and how it’s possible to fully take advantage of Summit’s nodes with CUDA-aware MPI and GPU Direct.

If you would like to attend on-site or remotely, please register using the form below. If you have any questions, please contact Tom Papatheodore ([email protected]).

[tw-tabs tab1=”Registration” tab2=”Remote Participation” tab3=”Presentation“]

[tw-tab]

[/tw-tab]

[tw-tab]

Please remember to register even if you are attending remotely.

To join the Meeting:

https://bluejeans.com/951603993

To join via Room System:

Video Conferencing System: bjn.vc -or-199.48.152.152

Meeting ID : 951603993

To join via phone:

1) Dial:

+1.408.740.7256 (US (San Jose))

+1.888.240.2560 (US Toll Free)

+1.408.317.9253 (US (Primary, San Jose))

(see all numbers – https://bluejeans.com/numbers)

2) Enter Conference ID : 951603993

[tw-tab]

Programming Methods for Summit’s Multi-GPU Nodes (Jeff Larkin & Steve Abbott, NVIDIA): slides, recording (part 1), recording (part 2)

These slides and the recording can also be found on the Training Archive Page.

[/tw-tab]

[/tw-tabs]