A team of researchers from the Georgia Institute of Technology conducted the largest-ever computational fluid dynamics, or CFD, simulation of high-speed compressible fluid flows. Using the Frontier supercomputer at the Department of Energy’s Oak Ridge National Laboratory, the team applied a new computational technique called information geometric regularization, or IGR. They combined that technique with a unified CPU-GPU memory approach — optimizing memory usage between traditional computer processors (CPUs) and graphics processors (GPUs) — to attain new levels of performance in CFD.

The Georgia Tech team simulated an array of 1,500 Mach 14 rocket engines and their interacting exhaust plumes — modeling airflow at 14 times the speed of sound, where gases behave violently and unpredictably due to extreme pressure and temperature shifts. This simulation achieved a resolution of over 100 trillion grid points, thereby surpassing former records of 10 trillion grid points for a compressible CFD simulation on previous CPU-only supercomputers and 35 trillion for the quite different challenge of incompressible fluid flow simulated on the GPU-equipped Frontier by Professor P.K. Yeung’s group, which is also at Georgia Tech.

In addition to the massive increase in resolution and scale, the methodology devised by the Georgia Tech researchers sped up the time to solution four times faster and increased energy efficiency by 5.7 times over current state-of-the-art numerical methods.

“Using this technique, we can simulate much larger problems than before. And not only can they be larger, but our current results suggest they are more accurate,” said Spencer Bryngelson, an assistant professor in Georgia Tech’s College of Computing who led the project with Georgia Tech colleague (and office neighbor) Florian Schäfer, who’s also an assistant professor in computing. “In contrast, P.K.’s incompressible flow simulations had quite different constraints than our compressible ones, and they were limited by costly network communication across all of Frontier. That’s a hard nut to crack. His simulations are, frankly, remarkable.”

The key to IGR’s ability to increase the scope and performance of CFD simulations is that it uses an entirely new strategy to model the behavior of shocks. Flowing fluid can sometimes form “shocks” when its flow speed exceeds the local sound speed. The shocks appear as discontinuous changes in fluid properties, such as pressure, temperature, and density. These discontinuities must be accounted for in CFD simulations to avoid numerical instabilities and to properly simulate the fluid flow.

Computational scientists have long attacked this issue by using various shock-capturing methods, such as introducing artificial viscosity or using numerical methods that average over the discontinuity to smooth out its effect on the simulation. IGR, developed by Schäfer and his former student Ruijia Cao, replaces discontinuities with near discontinuities that still preserve the main flow features, such as post-shock behavior.

“With IGR, one changes the underlying equations so these waves are living in a slightly different space. Just as they’re about to collide and form a shock, they instead slightly bend away from one another and avoid collision. So, they do not touch at the grid level. But the behavior after the bending matches the true shock behavior. The solutions we see are the solutions we expect,” Bryngelson said.

CFD simulations are often used to predict the behaviors of new aircraft designs, showing the potential interactions of proposed rockets and airplanes — and their engines — with the atmosphere. In this CFD study, Bryngelson and his team used their open-source Multicomponent Flow Code (available under the MIT license on GitHub) to examine rocket designs that feature clusters of engines, such as the 33 Raptor engines in the booster stage of SpaceX’s Starship. Predicting how all those engines’ exhaust plumes may interact upon launch will help rocket designers avoid mishaps — especially with the scale and speed afforded by the Georgia Tech team’s method.

“As the shock waves come down and interact with these different rocket engines, there’s back-heating because the shocks reflect partially up toward the bottom of the booster and heat it,” Bryngelson said. “So, CFD simulations can provide a predictive capability of how hot the shocks are going to make it and where they’re going to heat it. This can give rocket companies quick turnover for design, perhaps even preventing heat-induced explosions.”

The memory optimizations that IGR enables are a key factor that allowed the Georgia Tech team to scale up their simulation to record-breaking heights. These optimizations resulted in far less memory usage per grid point. In comparison, previous Multicomponent Flow Code versions, which leveraged an optimized implementation of the nonlinear numerical methods traditionally used for flows with shocks, required about 20 times more memory per grid point.

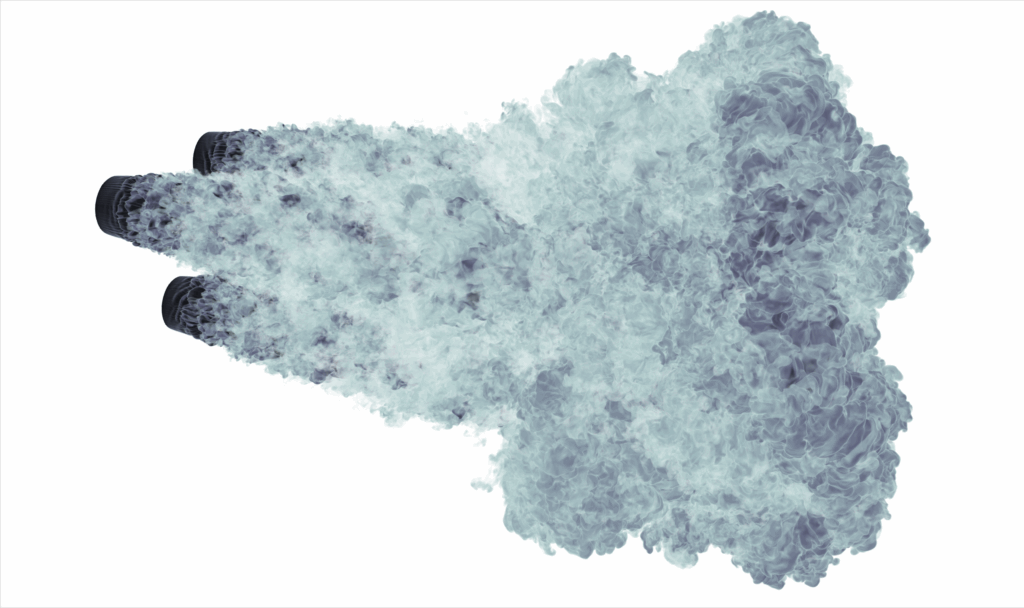

CFD simulations are often used to predict the behaviors of new aircraft designs. In this CFD study, the Georgia Tech team used their open-source Multicomponent Flow Code to examine rocket designs that feature clusters of engines, such as the 33 Raptor engines in the booster stage of SpaceX’s Starship. Predicting how all those engines’ exhaust plumes may interact upon launch will help rocket designers avoid mishaps. Image: Spencer Bryngelson, Georgia Institute of Technology

The researchers also employed a unified CPU-GPU shared-memory approach for their calculations. Moving data back and forth between CPUs and GPUs often causes a bottleneck, thereby leading scientific codes to keep all the data in the GPU memory. But that approach limits the simulation size to the amount of GPU memory.

“We use the node’s CPU to hold memory until we need it later. We store so many grid points on each GPU that it takes more time to crunch all the numbers. So, with the tight CPU-GPU on-node connection, it makes sense to give the CPU information to store as well,” Bryngelson said. “We can efficiently use all of the CPU and GPU memory on Frontier to further double the number of points we can accommodate while only sustaining a small performance hit.”

Bryngelson and his team also investigated another optimization for IGR — the use of mixed precision. Using the Swiss National Supercomputing Centre’s Alps supercomputer for their proof of concept, they combined half-precision (16 bit) floating point numbers for holding the computational results in device RAM and separate, single-precision (32 bit) floating point numbers for the intermediate computations whose results populate those registers. On Frontier, this approach doubles the number of potential grid points to over 200 trillion.

“The viability of holding large arrays of data in half precision is not well explored in the CFD community. But the limited literature on this, and our results, suggest it is sufficient for many typical use cases,” Bryngelson said.

Reuben Budiardja, group leader of Advanced Computing for Nuclear, Particle and Astrophysics, foresees this mixed-precision technique becoming more common on Frontier once the necessary compiler support is in place.

“In principle, this is something that we can also do on Frontier. We believe in the near future we’ll have compilers that can directly support lower-precision operations with programming models such as OpenMP and OpenACC, which is used in this particular simulation,” Budiardja said. “The key thing will be identifying what variables you can store in lower precision so that there are no accuracy or fidelity issues. If you can identify that, then that’s something you can leverage.”

But once a researcher has access to 100 trillion grid points — or even 200 trillion — how will their work benefit from it?

“For fluid dynamics problems, one almost always desires access to larger, more realistic simulations. For example, we need many grid points to represent turbulence. While 100 trillion won’t enable the simulation of a cruising Boeing 747 with perfect detail, it does enable a flurry of very large simulations. We start butting against grand-challenge problems that people envisioned some years ago, when exascale was a buzzword, not the capability of a computer you could log in to. Compressible CFD simulations with chemical reactions and multiple material phases — among other such things — can all benefit,” Bryngelson said.

The Frontier supercomputer is managed by the Oak Ridge Leadership Computing Facility, a DOE Office of Science user facility located at ORNL.

UT-Battelle manages ORNL for DOE’s Office of Science, the single largest supporter of basic research in the physical sciences in the United States. DOE’s Office of Science is working to address some of the most pressing challenges of our time. For more information, visit energy.gov/science.