The “Pioneering Frontier” series features stories profiling the many talented ORNL employees behind the construction and operation of the OLCF’s incoming exascale supercomputer, Frontier. The HPE Cray system was delivered in 2021, with full user operations expected in 2022.

Deploying the nation’s first exascale supercomputer is no easy feat.

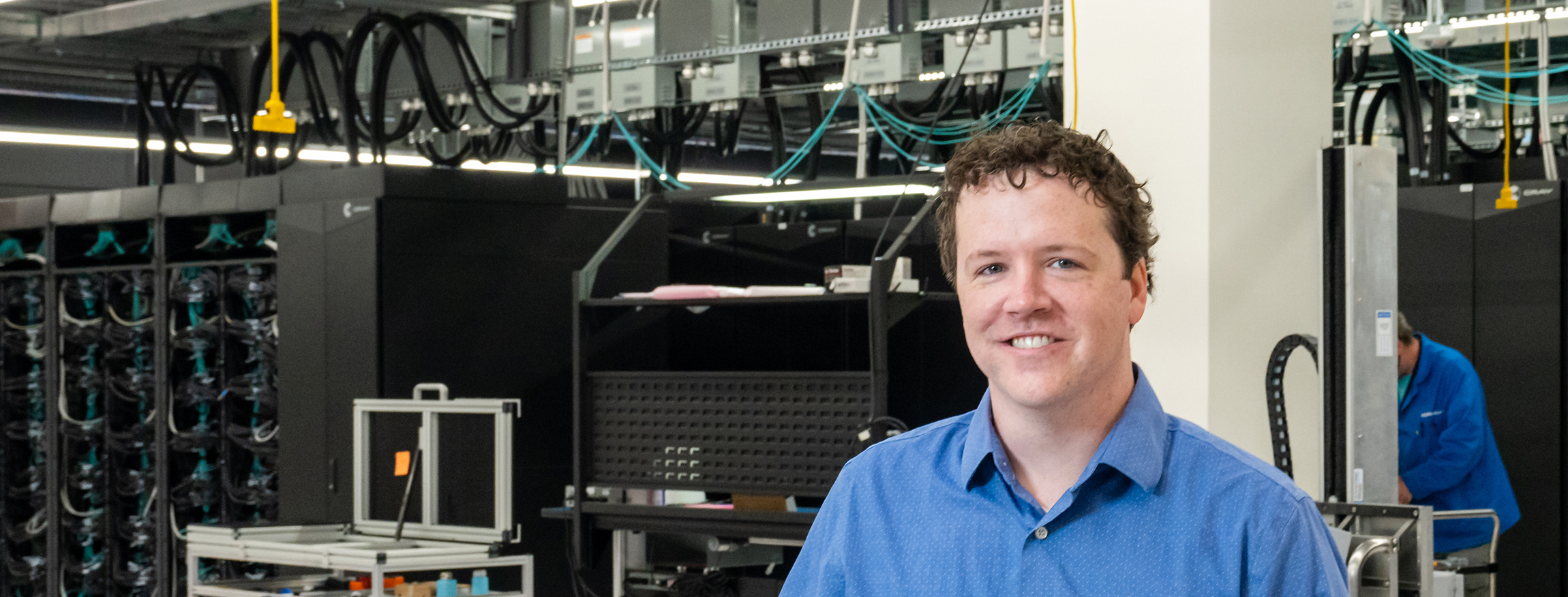

It’s an effort that Matt Ezell, a high-performance computing (HPC) systems engineer in the HPC Scalable Systems Group at the National Center for Computational Sciences (NCCS), knows well. As the system lead for the Oak Ridge Leadership Computing Facility’s (OLCF’s) HPE Cray EX Frontier, Ezell and his team have been meeting on a near-daily basis in coordination with the system vendor, HPE, to set up and optimize the system.

Frontier promises to be at least a 1.5-exaflop system, which means it will exceed speeds of 1.5 quintillion calculations per second—more than 1018 operations per second—and will accelerate the process of scientific discovery in fields such as engineering, physics, climate, nuclear energy, and more. The system is slated to be operational in 2022.

Matt Ezell is the system lead for the OLCF’s HPE Cray EX Frontier supercomputer. Image Credit: Carlos Jones, ORNL

Ezell has been the system lead for Frontier since 2018, when the US Department of Energy (DOE) announced its intention to procure three exascale supercomputers. Having served as one of the system administrators for the IBM AC922 Summit supercomputer at DOE’s Oak Ridge National Laboratory (ORNL), Ezell had the experience necessary to lead the team that’s standing up the system and confronting hardware challenges that arise in the brand new, large-scale architecture. Once all the hardware for Frontier had arrived late last year, his team began diligently working to ensure that Frontier will run smoothly and efficiently when it comes online.

“Over the past several months, it’s been a boots-on-the-ground effort,” Ezell said. “I’ve been working with the hardware team to get everything configured and installed. We are identifying and resolving any hardware snags, and we are also working through scaling challenges that are unique to world-class supercomputers.”

Ezell is a lead within the CORAL-2 (Collaboration of Oak Ridge, Argonne, and Livermore) System Software Working Group between ORNL, HPE, and Lawrence Livermore National Laboratory to ready DOE’s upcoming Frontier and El Capitan exascale systems. As the system configuration lead for Frontier, he regularly participates in meetings with Frontier’s vendors, reviews and resolves any hardware snags, and delegates tasks to his team.

“At the end of the day, the project team is going to ask me specifically how things are going,” Ezell said. “But that doesn’t mean there’s not a whole host of people working on this. It takes an army—a village—to raise a system.”

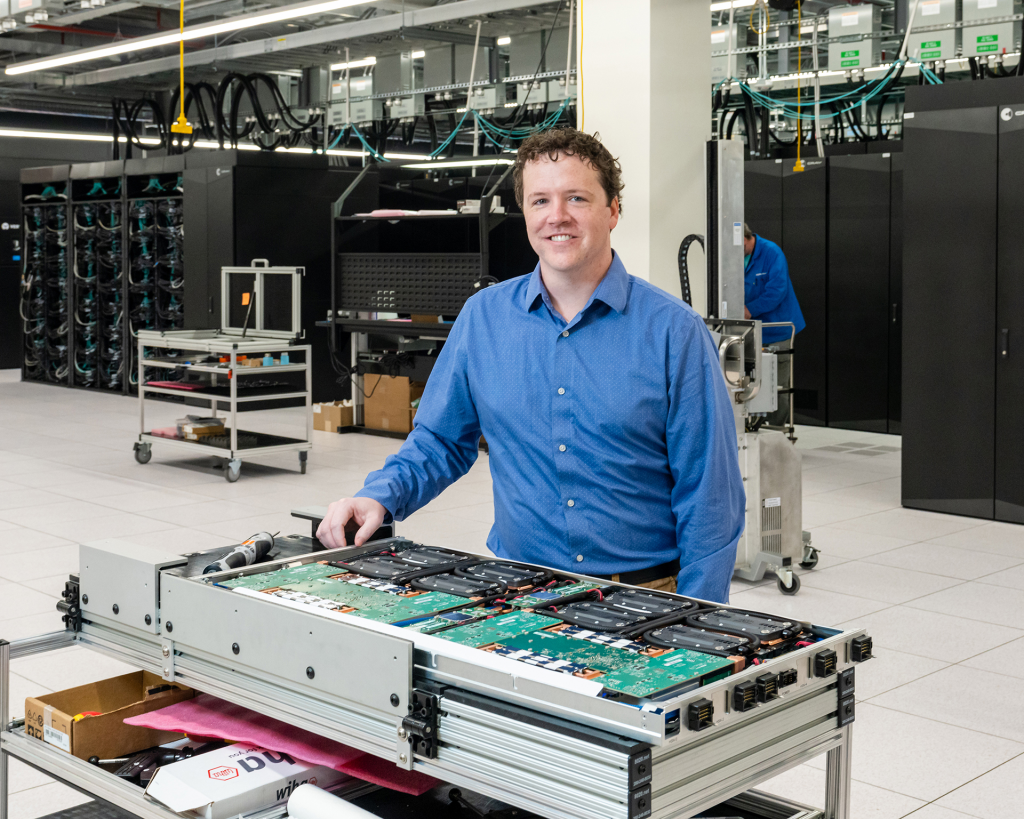

Matt Ezell discusses Frontier’s architecture with HPE staff members. Image Credit: Carlos Jones, ORNL

Before the hardware for Frontier began arriving at ORNL, Ezell worked with the HPE Performance Cluster Manager (HPCM) team, which is in charge of the system management software. Ezell’s team informed the HPCM group of best practices learned over the past decade so they could improve their products and make them more desirable for other customers as well.

Now that the system is on the floor, Ezell’s team has been working closely with the HPE developers who designed the hardware and software.

“When we run into issues, we call in the experts and work through these problems with them,” Ezell said.

Ezell works closely with Paul Peltz in the HPC Scalable Systems Group, Prashant Manikal of HPE, and Joe Glenski, a senior distinguished technologist at HPE who leads a benchmarking group for Frontier. The benchmarking group’s goal is to execute a broad, complete set of benchmarks to demonstrate that the system meets its contractual requirements. It also optimizes the High-Performance Linpack benchmark, or HPL, on the system. HPL is used to measure a computer’s floating-point performance.

“Because HPL is such a demanding application for the system, the benchmarking group is often the first to discover opportunities for optimization,” Ezell said.

Determining which challenges are hardware related and which ones are software related is one of the team’s biggest responsibilities. Because Frontier is a “serial number zero” system, Ezell’s group is sometimes optimizing and fine-tuning nearly from scratch. But the work, he says, is worth the effort.

“It’s really interesting to go through the troubleshooting process, and when you see something that wasn’t working before that you fixed, it’s really rewarding,” Ezell said.

Although the system has taken a great amount of effort to deploy, Ezell looks forward to Frontier’s operation and the scientific achievements that will result from its use. In 2018, Ezell entered and won the contest to name the system. The name “Frontier,” he said, represents the future of scientific discovery and a system that operates at the edge of what is currently possible.

“It was a big honor to have that name be selected, and now it’s neat to see this machine coming to life,” Ezell said. “It’s not just an idea anymore. It’s actually here, and that’s really exciting.”

Matt Ezell in the Exploratory Visualization Environment for Research in Science and Technology (EVEREST) at ORNL. Image Credit: Rachel McDowell, ORNL

After Ezell’s team has completed their work, the system will be handed over to Verónica Melesse Vergara, group leader of the System Acceptance and User Environment Group at the NCCS, and her team. This group will perform rigorous testing to gauge the functionality, stability, and efficiency of Frontier.

The OLCF aims to deliver Frontier to early science users in the second half of 2022.

UT-Battelle LLC manages Oak Ridge National Laboratory for DOE’s Office of Science, the single largest supporter of basic research in the physical sciences in the United States. DOE’s Office of Science is working to address some of the most pressing challenges of our time. For more information, visit https://energy.gov/science.