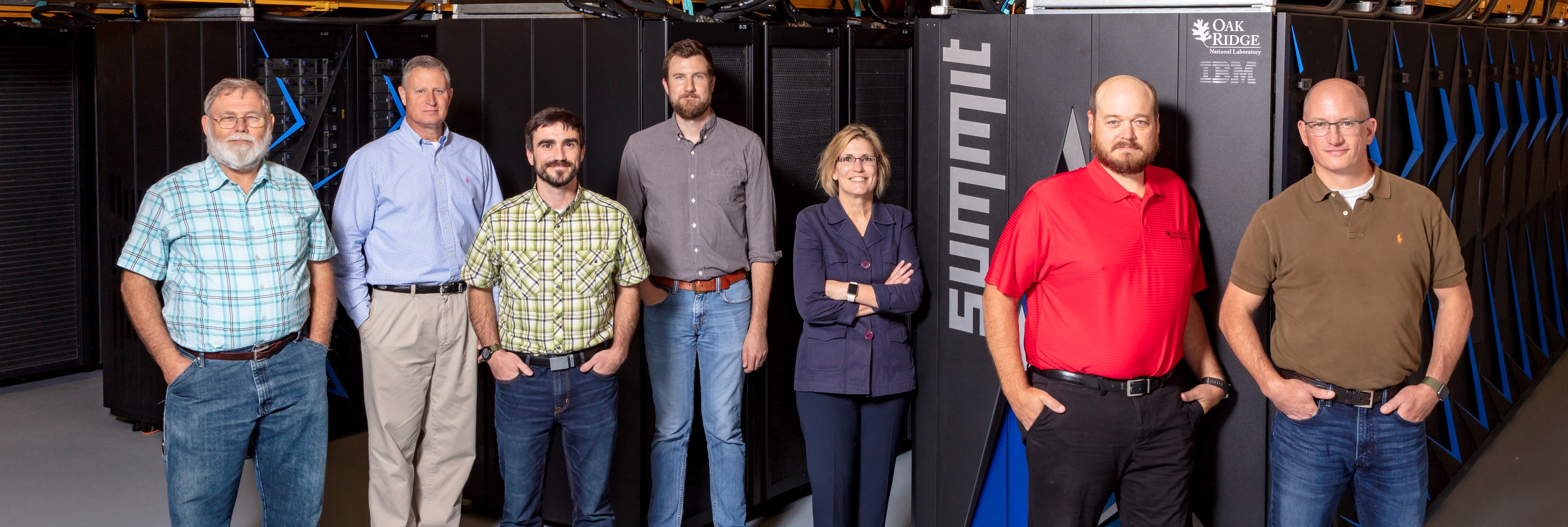

The project team for the design and construction of the Summit supercomputing facility at the US Department of Energy’s (DOE’s) Oak Ridge National Laboratory (ORNL) received the Tennessee Chamber of Commerce and Industry’s (TCCI’s) 2018 Energy Excellence Award.

Operations Manager Stephen McNally of the Oak Ridge Leadership Computer Facility (OLCF), a DOE Office of Science User Facility, accepted the award on the team’s behalf at the Tennessee Chamber’s Environment and Energy Awards Conference on Tuesday, October 30.

Team members were recognized for implementing significant energy improvements for the 11,000 square foot energy-efficient data center housing the world’s most powerful supercomputer.

“It’s great for the project to be recognized as being the most efficient high-performance computing (HPC) machine and facility out there,” said Bart Hammontree, project manager for the facility design and construction.

Award recipients include Hammontree; James (Paul) Abston, data center manager; David Grant, HPC mechanical engineer; Rick Griffin, electrical engineering specialist; Bryce Hudey, complex facility manager; Melissa Lapsa, ORNL Building Envelope and Urban Systems group leader; and Jim Rogers, ORNL National Center for Computational Sciences director of computing and facilities.

“We are honored to recognize UT-Battelle and Oak Ridge National Laboratory for their leading-edge energy efficiency solutions in designing the world’s fastest high-performance supercomputing systems,” TCCI Vice President of Environment and Energy Charles Schneider said in a statement.

Capable of 200 quadrillion calculations per second, Summit ranks No. 1 on the June and November 2018 Top500 lists of the most powerful supercomputers. Summit also ranked No. 3 on the June and November Green500 lists for energy efficiency, which is based on the number of calculations a computer can perform for a given amount of energy. Summit is over 100 times faster than higher-ranking computers on the Green500, underscoring its leap in energy efficiency for such a large system.

One metric of data center efficiency is the power usage effectiveness (PUE), which calculates the amount of energy required to remove waste heat from the computer. The lower the PUE value, the less infrastructure power required to run the supercomputer. The PUE measurement for the Summit facility during the system acceptance period was just 1.14, or 50 percent more efficient than its OLCF predecessor: the 27-petaflop Titan, the world’s fastest supercomputer in 2012. As Summit enters full production, facility engineers project the PUE will drop further, likely reflecting a sustained PUE average below 1.10.

Compared to Titan, Summit produces about eight times the processing power while consuming only 18 percent more electrical power. Combined with a very efficient cooling system, this significant increase in computing power with only a slight increase in electrical power consumption makes Summit the fastest and one of the most efficient supercomputers ever.

“It comes down to economics,” Hammontree said. “If they’re not spending more on power, they can spend more on supporting the science that the supercomputer makes possible.”

The facility features an innovative, warm-water cooling system that greatly reduces the use of chilled water; epoxy-coated pumps that increase pumping efficiency; oversized polypropylene piping that eliminates the need for costly stainless-steel piping; a very efficient electrical distribution system that also provides increased safety and reliability; and a variable refrigerant flow system that filters and dehumidifies the room air while removing any residual heat released into the room from IT equipment.

Each Summit computer cabinet uses a combination of two cooling technologies. First, water flows through cooling plates on each node, transferring waste heat from the CPUs and GPUs to water. Second, residual heat from other components on the node is captured by rear-door heat exchangers that also transfer waste heat to water. This cooling design removes nearly all the waste heat so that no heat is ejected into the facility. The waste heat is then transferred to a primary cooling loop using a series of large plate-and-frame heat exchangers and evaporative cooling towers.

“We’re using roughly half the energy with cooling towers instead of chillers,” Hammontree said. “That amounts to about $1 million per year in savings.”

To increase the efficiency of the electrical distribution system, large oil-filled transformers that are about 99.5 percent efficient are located next to the supercomputer, minimizing line losses (energy waste) and associated costs. Further, to improve electrical safety, Griffin incorporated very fast-acting fuses between the electrical branch breakers that supply each computer cabinet and the power distribution units in each cabinet.

“The Summit facility speaks to DOE’s mission to be energy efficient, and hopefully it can serve as a best-in-practice facility,” Grant said.

For the facility project team, Summit is ready and equipped for tough science problems. Now they’re on to the next “Frontier” in energy-efficient facilities for exascale HPC.

ORNL is managed by UT-Battelle, LLC for the Department of Energy’s Office of Science, the single largest supporter of basic research in the physical sciences in the United States. DOE’s Office of Science is working to address some of the most pressing challenges of our time. For more information, please visit https://science.energy.gov/.