Visiting teams representing academia, industry, and government participated in this year’s OLCF GPU Hackathon, held the week of October 22 in downtown Knoxville. Some teams used the time to prepare codes for the IBM AC922 Summit system.

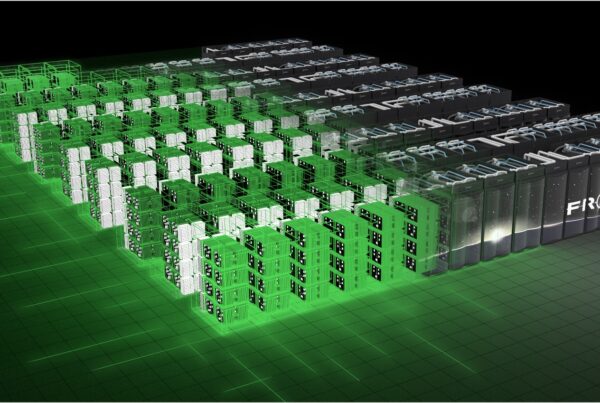

In less than 10 years, GPUs have become a proven engine of scientific computing due to their ability to perform many calculations simultaneously. According to a recent Nature article, of the 100 most powerful supercomputers in the world, 20 now incorporate GPUs, including the Oak Ridge Leadership Computing Facility’s (OLCF’s) Titan and Summit systems.

While many research teams have gone all in on GPUs, others are just starting to explore whether the technology can help advance their science. Groups representing both ends of this spectrum—and everything in between—traveled to Knoxville the week of October 22 to spend 5 days programming their applications for GPU architectures with assistance from experts at the OLCF’s fifth annual GPU Hackathon.

The event, headquartered at the Crowne Plaza hotel in downtown Knoxville, featured 10 teams and more than 80 participants representing academia, industry, and government. Hackathon teams in attendance in 2018 included groups from Clemson University, Lawrence Berkeley National Laboratory (LBNL), the software company NUMECA-USA, Carnegie Mellon University, and Tennessee Tech University.

This year’s OLCF GPU Hackathon marked the first time teams had access to a system with the same node-level architecture as Summit. For the duration of the week, teams developed and tested their codes on an 18-node auxiliary system, called Ascent, composed of the latest IBM Power CPUs and NVIDIA Volta GPUs. Access to such an architecture allowed teams to experiment with state-of-the-art hardware and tackle advanced programming issues related to multi-GPU programming.

“We chose teams with a clear plan on how their applications can be parallelized and mapped to GPUs,” said Tom Papatheodore, the event organizer and a member of the OLCF User Assistance and Outreach Group. “Some of the teams plan to run on Summit in the near future, so they took this opportunity to start preparing their codes.”

The OLCF is a US Department of Energy (DOE) Office of Science User Facility located at Oak Ridge National Laboratory (ORNL).

Because adapting applications for hybrid systems can be tricky for non-experts, GPU hackathons bring together scientists, programmers, and mentors for a week of collaboration.

Because adapting scientific applications for hybrid supercomputers can be a tricky process for non-experts, GPU hackathons bring scientists, programmers, and mentors together for an intense week of teamwork and collaboration. At the 2018 hackathon, experts from NVIDIA, IBM, PGI, ORNL, and universities, such as the University of Delaware, served as mentors to participants.

Maxence Thévenet, a postdoctoral researcher at LBNL, said the combination of singular purpose and time with team members made the hackathon worthwhile. “Being able to collaborate and focus on this work with the help of our mentors and with access to a machine that shares the same architecture as Summit was extremely helpful,” Thévenet said.

As an application developer for the electromagnetic particle-in-cell code WarpX, Thévenet is contributing to an effort under DOE’s Exascale Computing Project lead by Jean-Luc Vay at LBNL to advance simulations of laser-plasma interactions to exascale capabilities. During the hackathon, his team successfully ported WarpX to run on multiple GPUs and optimized memory management, which contributed to a threefold application speedup compared to a previous implementation on LBNL’s Cori supercomputer.

“When we’re using GPUs, our simulation runs 11 times faster than if we’re only using CPUs,” Thévenet said. “We’re really happy about that.”

ORNL research scientist Shih-Chieh Kao said the quality time between domain scientists and software engineers and the positive peer pressure that permeates the hackathon atmosphere made for a productive week. Kao’s team, funded by the US Air Force and composed of ORNL and Tennessee Tech collaborators, achieved a tenfold speedup of their 2D flood modeling application. The code, which previously ran on the Titan supercomputer, combines streamflow hydrograph and geographic elevation data to predict how floodwaters will affect an area of land.

“Before the hackathon, we needed 8 hours on Titan to complete a test case of flooding from Hurricane Harvey,” Kao said. “Now we can do this about 10 times faster on Summit. One key to that accomplishment is the reduction in data movement between nodes we can achieve on the new architecture.”

The OLCF GPU Hackathon marked the finale of a series that grew to seven events and three continents in 2018. Other GPU hackathon hosts this year included TU Dresden, the Pawsey Supercomputing Centre, the University of Colorado, the National Center for Supercomputing Applications, Brookhaven National Laboratory, and the Swiss National Supercomputing Centre. In 2018, a record-high of 64 teams and around 400 individuals participated in the series. The growth in GPU hackathons not only reflects the rapid maturation of GPU technology but also scientists’ strong interest in exploring GPU viability.

“This is why we started doing it in the first place—to grow the GPU computing community,” Papatheodore said. “Even if you’re not running on our systems, you’re learning how to program GPUs, which could then make our systems more relevant to you down the road.”

ORNL is managed by UT-Battelle, LLC for the Department of Energy’s Office of Science, the single largest supporter of basic research in the physical sciences in the United States. DOE’s Office of Science is working to address some of the most pressing challenges of our time. For more information, please visit https://science.energy.gov/.