Jim Rogers, computing and facilities director at the OLCF, has spent the last 30 years in the computing field. Over the course of his career, Rogers has taken part in a computing revolution, with systems increasing 1-million fold over the last three decades.

The Faces of Summit series shares stories of the people behind America’s top supercomputer for open science, the Oak Ridge Leadership Computing Facility’s Summit. The IBM AC922 machine launched in June 2018.

Shortly after its launch in June 2018, the Oak Ridge Leadership Computing Facility’s (OLCF’s) IBM AC922 Summit was named the world’s fastest supercomputer by TOP500, an organization that ranks the most powerful computing systems around the world.

Equally impressive, Summit also ranked No. 5 in energy efficiency on the Green500 list, a ranking of the top 500 supercomputers in the world based on energy efficiency. Summit was No. 1 among the systems that qualified using Level 3 fidelity, the Green500’s standards for highest measurement fidelity. The system demonstrated a sustained performance of 122.3 petaflops using 8.8 MW of power, yielding an average of 13.889 billion math operations per just 1 watt of power.

Much of the credit for the strong green ranking can be attributed to the strategic vision of Jim Rogers, computing and facilities director at the OLCF, who made Summit’s energy efficiency a priority going into the project.

Rogers entered the field of high-performance computing (HPC) as a software engineer more than 30 years ago, when “supercomputers” used only a few processors. Over the course of his career, he has written software, installed systems, and overseen the acquisition of multiple large HPC systems. He’s also taken part in a computing revolution, with system performance increasing a millionfold over the last 3 decades. His broad expertise in HPC provides the foundation for his role in the Summit project.

Rogers works across multiple organizations within the laboratory to define the requirements for and execute the acquisition of the computing resources and supporting infrastructure, from networks and services to underlying electrical and mechanical systems. During the Summit project, Rogers has collaborated with electrical and mechanical engineers from the Facilities and Operations Directorate (F&O) at the US Department of Energy‘s (DOE’s) Oak Ridge National Laboratory (ORNL) to make Summit as energy efficient as possible.

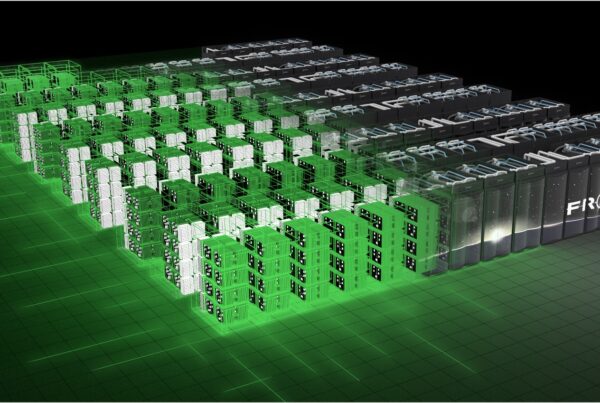

With the end of Summit’s acceptance in sight, Rogers is working on the next step of the project—a plan to make Summit self-reliant. He is also already deep into the facilities plan for Frontier, the future exascale system at the OLCF that will boast a performance 6–14 times greater than that of Summit.

“One of the best things about working on Summit has been seeing how far technology has come,” Rogers said. “Just 16 years ago, I installed a 3.2-teraflop Compaq AlphaServer SC ES45 supercomputer at NASA that consumed most of NASA Goddard’s data center power, space, and cooling budget. Now, the performance of that entire 1,300-core supercomputer can be replaced with a handful of commodity processors or just a single accelerator. It’s amazing.”

Getting to green

Throughout the Summit installation, Rogers has focused on making Summit a green machine, working closely with F&O personnel on details such as the mechanisms for delivering resilient electrical service and methods for minimizing system cooling costs.

Rogers and his team collected measurements during the High-Performance Linpack (HPL) benchmark run in June and found that Summit delivered nearly 14 gigaflops, or 14 billion operations, for each watt of electricity. This is 7 times the power performance of the 2012 Titan HPL run, which was No. 3 at the time on the same Green500 list. Many of the other energy-efficient systems on the Green500 are only a fraction of Summit’s size and can easily conceal ancillary power draws across their entire system, making Summit’s energy usage all the more impressive.

“It’s significant that we were able to produce so many gigaflops per watt on such a large system,” Rogers said. “We compare Summit to other accelerator-based systems that are close to the top of the list because it’s much more difficult to keep these big systems—with their network fabrics, file systems, and other components—energy efficient.”

Rogers also worked with engineers on many of Summit’s components to recommend more energy-efficient additions and ways of implementing them. David Grant, a mechanical engineer with F&O, contributed one of the key components to Summit’s unique overhead cooling system, which features an energy plant design that removes the machine’s waste heat via water measuring 70 degrees Fahrenheit. Water flows through Summit’s cabinets at a rate of 4,500–4,600 gallons a minute, shuttling heat from the computer to the evaporative cooling towers.

“We knew going into this project that we would need to provide energy-efficient cooling to match the energy consumption and that traditional air could not do it,” Rogers said. “We cool the two CPUs and six GPUs on each of Summit’s nodes with custom cold plates, which reject 75 percent of the total heat load on the whole system directly to water. Then, we sweep up the remainder of the heat load with rear-door heat exchangers, keeping the entire system room-neutral.”

Cold plates feature copper packaging that transfers heat from the hot processor surface to the relatively cooler water. Water’s thermal conductivity is 25 times greater than that of air, translating to exceptional efficiency.

During Summit’s 4-hour HPL run, Rogers and his team also collected around 180,000 power measurements from 12 devices. The team measured the machine’s power usage effectiveness (PUE) during the run as 1.03, which means that for every dollar spent on electricity, only 3 cents goes toward removing heat. A traditional air-cooled data center typically has a PUE of about 1.8, requiring nearly 80 cents to remove heat for every dollar spent.

Summit’s artificial intelligence

Today, Rogers continues to make Summit a green machine—but now, he hopes to use artificial intelligence to meet new goals in efficiency. He and a small team are integrating and optimizing disparate control systems that deliver thousands of gallons of warm water each minute to the 320 racks comprising both the compute and file systems.

Rogers is no stranger to new solutions. In the 1990s he helped build a storage system similar to the OLCF’s High-Performance Storage System—robotics, tape, readers, and all. He also helped design, buy, and install a new statewide research and education network for the state of Alabama.

The new project, though, enters an arena that remains largely untapped by current HPC systems. Goals include an automated framework between Summit and its mechanical control system; such a system would allow Summit to make recommendations to the plant controls to regulate and optimize pumping volume, valve position, and fan control without direct human intervention.

In a situation where the controls side—which has access to all the projected weather conditions, cooling, water pumps, and chillers—knows about scheduled maintenance and could tell Summit the maximum cooling load profile it could support, the supercomputer could use that information to appropriately schedule jobs. Conversely, Summit could also forecast its system load to the energy plant, so the plant could optimally position pumps, cooling towers, and other systems for specific load signatures.

“We are at a point where we anticipate computers like Summit will expand their own understanding through machine learning,” Rogers said. “Our goal is to have Summit understand enough about itself and the applications running on the system to optimize much of its own operation.”

The OLCF is a DOE Office of Science User Facility located at ORNL.

ORNL is managed by UT-Battelle for the Department of Energy’s Office of Science, the single largest supporter of basic research in the physical sciences in the United States. DOE’s Office of Science is working to address some of the most pressing challenges of our time. For more information, please visit https://science.energy.gov.