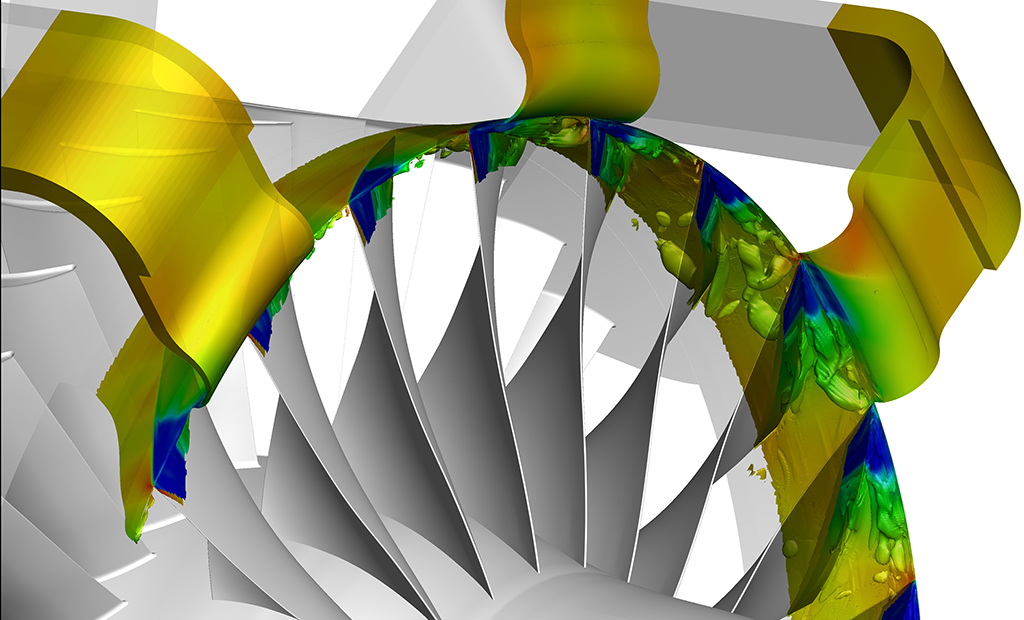

This image shows a visualization of a turbomachinery problem conducted by Ramgen Power Systems using Fine/Turbo, a computational fluid dynamics application created by software company Numeca and accelerated using OpenACC directives.

One of the biggest hurdles for users who want to take advantage of accelerated computing is the time required to write and update software. That’s especially true for industrial users, who must carefully evaluate the projected returns on such an investment.

OpenACC, a directive-based programming standard for accelerator-based systems, offers a potential alternative to labor-intensive code rebuilds, allowing programmers to adapt specific sections of an application for GPU acceleration while leaving other sections unchanged.

As home to one of the most powerful GPU-accelerated supercomputers in the world, the Oak Ridge Leadership Computing Facility (OLCF), a US Department of Energy (DOE) Office of Science User Facility located at DOE’s Oak Ridge National Laboratory (ORNL), has been instrumental in demonstrating the advantages of OpenACC for scientific computing users. Those benefits extend to the OLCF’s industrial users.

Two OpenACC successes that have emerged from industry projects at the OLCF are the computational fluid dynamics (CFD) codes HiPSTAR and Fine/Turbo, developed at the University of Melbourne in Australia and software company Numeca, respectively. In recent years, both applications have delivered performance gains to large-scale turbomachinery modeling projects, running on the OLCF’s Titan supercomputer to obtain key insights on product operating conditions that no laboratory can recreate. Developers can, in turn, use this information to implement design improvements.

HiPSTAR

When University of Melbourne professor Richard Sandberg visited ORNL in 2012, he knew HiPSTAR would need to be updated for running on hybrid systems like the Cray XK7 Titan, which debuted that same year.

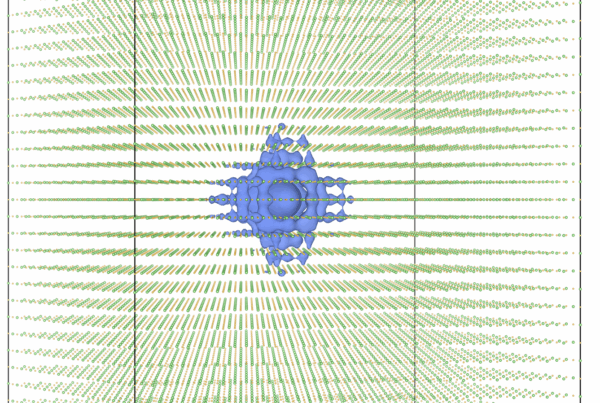

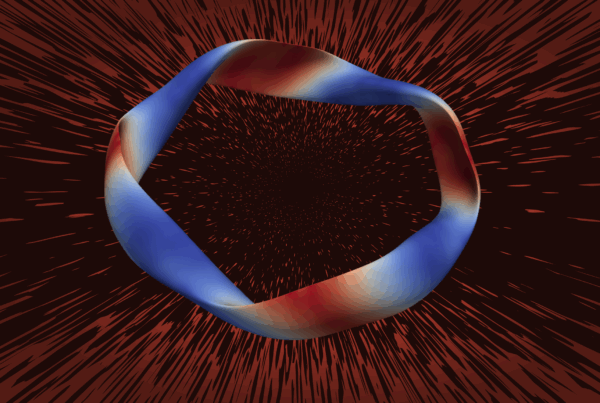

Sandberg created HiPSTAR in 2006 for modeling problems like jet noise and turbine flows. The code performed well on highly parallel CPU-only systems and was developed further to simulate turbomachinery scenarios for General Electric (GE). Selecting the right acceleration strategy presented a new challenge.

“We wanted portability of the code because we wanted to continue to be able to run on a number of CPU-only systems,” Sandberg said. “At the time, no one was certain whether OpenACC was the right choice, but it seemed like it was going to be straightforward to implement.”

The team’s goal was to obtain a twofold to threefold speedup by inserting several OpenACC loops around portions of code that had been parallelized with OpenMP, a common programming interface. To maximize GPU performance, Sandberg’s team eventually found it needed to restructure some of HiPSTAR’s core algorithms. The results, however, proved the investment worthwhile.

In 2017, HiPSTAR’s OpenACC implementation ran about five times faster than its CPU-only OpenMP version. That performance leap translates into more reliable results for companies that collaborate with Sandberg’s team to run high-fidelity simulations.

Researchers working on one such project involving GE used a recent time allocation on Titan to investigate the physics of highly turbulent flows in gas turbine designs. The results could lead to efficiency gains that translate into fuel savings and reduced carbon emissions for future gas turbine customers.

“We are more able to really tackle engine-relevant Reynolds and Mach numbers in our simulations,” Sandberg said. “It makes our results much more significant because you no longer need to extrapolate to observe relevant physics.”

With the OLCF’s new Summit supercomputer and its state-of-the-art GPUs coming online in 2018, HiPSTAR is well positioned to take advantage of the increased computing capability, according to Sandberg. In preparation for Summit, a team representing HiPSTAR attended a 2018 GPU hackathon event in Perth, Australia, sponsored in part by ORNL.

“With Summit, we’re looking to extend the capabilities of the code and simulate even more realistic configurations and operating conditions,” Sandberg said.

Fine/Turbo

At nearly 30 years old, Numeca’s Fine/Turbo software has survived the test of time for industrial users seeking high fidelity turbomachinery simulation and optimization.

Updating the legacy Fortran code for GPU acceleration with OpenACC has added extra value to the application without affecting maintenance or portability.

Several dozen of Fine/Turbo’s most computationally expensive routines were targeted for acceleration, with a goal of minimizing low-level adaptations for GPU acceleration. The results led to about a tenfold speedup in many of Fine/Turbo’s key routines compared with a CPU-only implementation, with overall application performance commonly reaching a twofold to threefold speedup.

“We were able to implement those specific routines with OpenACC directives but without fundamentally changing or rewriting the code,” said Numeca software engineer David Gutzwiller. “GPU acceleration has become part of our standard release of Fine/Turbo.”

On Titan, Dresser-Rand deployed Fine/Turbo to optimize designs for carbon capture compression and sequestration systems, reducing the time required to explore and validate compression designs computationally by a factor of 50. This speedup has allowed Dresser-Rand to explore more designs and more realistic physics for this promising green technology.

ORNL is managed by UT-Battelle for DOE’s Office of Science. The Office of Science is the single largest supporter of basic research in the physical sciences in the United States and is working to address some of the most pressing challenges of our time. For more information, please visit https://science.energy.gov/.