OLCF postdoctoral research associate Andreas Tillack joined the OLCF’s Center for Accelerated Application Readiness efforts in November 2016, bringing with him extensive experience in programming, physics, and chemistry.

The Faces of Summit series shares stories of the people behind America’s top supercomputer for open science, the Oak Ridge Leadership Computing Facility’s Summit. The IBM AC922 machine launched in June 2018.

Andreas Tillack remembers what first drew him to computers as a boy in Berlin. It was the same thing that convinced him he wanted to be a scientist early in life: a feeling of limitless possibility.

“If you knew what you were doing, you could do almost anything,” said Tillack, a postdoctoral research associate with the Oak Ridge Leadership Computing Facility (OLCF), a US Department of Energy (DOE) Office of Science User Facility located at DOE’s Oak Ridge National Laboratory (ORNL).

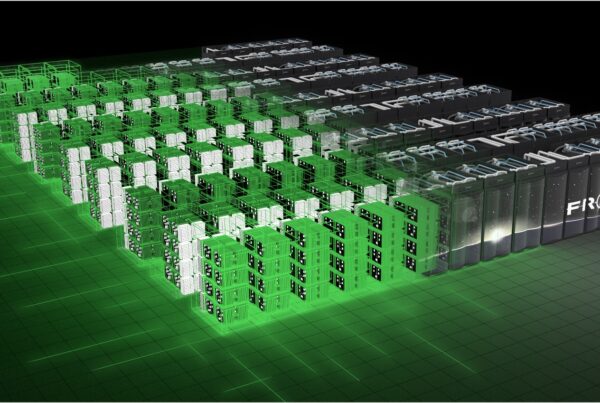

In Summit, the OLCF’s newest supercomputer, Tillack may have found a machine that matches his childhood expectations. At 200 petaflops, the IBM AC922 system is slated to be more powerful than any existing supercomputer. Installation and testing of the machine is scheduled to wrap up this summer, giving researchers unprecedented capability for exploring scientific problems—everything from bioenergy to artificial intelligence to galaxy evolution.

But like any finely tuned instrument, Summit’s reputation as a science machine largely depends on the inventiveness of the people who use it. Three years ago, the OLCF launched the Center for Accelerated Application Readiness (CAAR) to give research teams a head start toward the goal of running science on Summit the day it becomes available. The teams received access to technology experts, OLCF computational resources, and early development systems to test their science codes on architectures that closely resemble the 4,608-node system.

To succeed, teams must identify the best method to map their science to Summit’s hardware—navigating details such as how to use Summit’s multi-GPU nodes, how to choreograph communication between processors, and how to get the most out of Summit’s robust on-node memory. It’s a challenge that Tillack embraces.

“To me, when the work is interesting, the challenging bit doesn’t matter. It’s fun,” said Tillack, who joined CAAR efforts in 2016 through DOE’s Computational Scientists for Energy, Environment, and National Security (CSEEN) program.

As a computational scientist within CAAR, Tillack is working with team members to update a materials science code called QMCPACK, a quantum Monte Carlo code designed to explore complex materials at the subatomic level. Leveraging Summit, QMCPACK could help scientists better understand phenomena like high-temperature superconductivity, a state where electrons flow freely between atoms without energy loss at temperatures relatively higher than normal.

Early runs of QMCPACK on a partially completed Summit indicate the system will allow researchers to simulate materials around four times more complex than what’s possible on the OLCF’s current leadership-class supercomputer, Titan.

“This is quite amazing,” Tillack said. “Already, Summit is really cool.”

The Path to HPC

Born in East Germany, Tillack was 8 years old when his family moved to Berlin—just in time to witness the collapse of communism in Eastern Europe and the reunification of Germany.

“It couldn’t have happened at a better time for me,” Tillack said. “That opened up the entire world.”

Another momentous event in Tillack’s childhood was the day his father brought home the family’s first computer, a 12-megahertz 286 system.

“The thing was horribly expensive and enormously heavy,” he said. “The cool thing was that I learned to program since nobody knew how to use the darn thing.”

Tillack proved a natural at writing code—creating his own games and programming the machine to display advanced graphics. He even wrote a math library to visualize data in 3D.

“I felt enormously proud of myself,” Tillack said.

His first encounter with parallel computing came as a teenager through a friend’s computer science teacher. It was Tillack’s first exposure to programming languages C and C++, skills he would return to later in his computational science career.

As a physics major at Humboldt University in Berlin, Tillack studied optics—the branch of physics that deals with light—and colloids, which are mixtures of solids and liquids that include everyday items like jelly, stained glass, and milk. For his master’s thesis, he built a device called a flow cytometer to count particles in colloid samples from the optical pickup unit of a DVD burner—the part of the device that uses a laser to read and write information from disc. The final project included a fully threaded parallelized code that allowed the user to run experiments, control the optical unit, retrieve the data, and simultaneously analyze it.

“I felt this would be the last chance to do something crazy without anyone objecting, and, surprisingly, it worked out,” Tillack said.

After becoming the first person in his family to earn a master’s degree, Tillack moved to Seattle in 2007 to start a PhD in chemistry at the University of Washington (UW). Partway through the program, he decided to switch from experimental to theoretical chemistry, a change that reunited Tillack with C++ and took him a step closer to high-performance computing (HPC).

At UW, Tillack’s work centered on simulating large, coarse-grained molecular systems to predict the extent of acentric ordering during the processing of organic, nonlinear optical chromophores. To do their simulations, Tillack and his colleagues used university compute clusters and electronic structure calculations similar to the calculations QMCPACK uses.

“This is what got me interested in going to bigger and bigger systems,” Tillack said.

Problem-solving at supercomputer scales

At the OLCF, Tillack has landed upon one of the biggest systems anywhere.

To prepare QMCPACK to perform on Summit, Tillack and his colleagues began by porting the code to Summitdev, an early development system designed to approximate the architecture on Summit. Summitdev allowed the team to experiment with multi-GPU nodes and create logical groups of processes to be executed on the hardware. Code performance could then be analyzed using HPC diagnostic tools Score-P and Vampir to see how QMCPACK functioned in detail.

Working out performance issues on Summitdev made Tillack’s first experience on Summit surprisingly smooth. Preliminary results suggest QMCPACK will run 50 times faster on a Summit node than what is possible on a node on Titan. Now, Tillack and his QMCPACK teammates are turning their attention to making better use of Summit’s memory. In electronic structure calculations, memory typically limits the size of the systems scientists can pursue. That’s because the wave function, a description of the quantum state of a system, is an incredibly memory-intensive equation.

“Splitting this description between the six GPUs on a Summit node should make calculations possible that are now completely impossible,” Tillack said.

While eagerly anticipating access to the full machine as an early user later this year, Tillack said he has leaned heavily on the wisdom of his ORNL colleagues to grow into his current role. Discussions with OLCF scientific computing staff—including his mentor, OLCF computational scientist Ying Wai Li, and ORNL senior researcher and QMCPACK development lead Paul Kent—and staff from IBM and NVIDIA have all played a role in his development.

“You talk to so many people in the end,” Tillack said. “We are in an environment where openness and the sharing of knowledge are an essential part of the process. Everybody here is contributing to everyone else’s success.”

ORNL is managed by UT-Battelle for the Department of Energy’s Office of Science, the single largest supporter of basic research in the physical sciences in the United States. DOE’s Office of Science is working to address some of the most pressing challenges of our time. For more information, please visit https://science.energy.gov/.