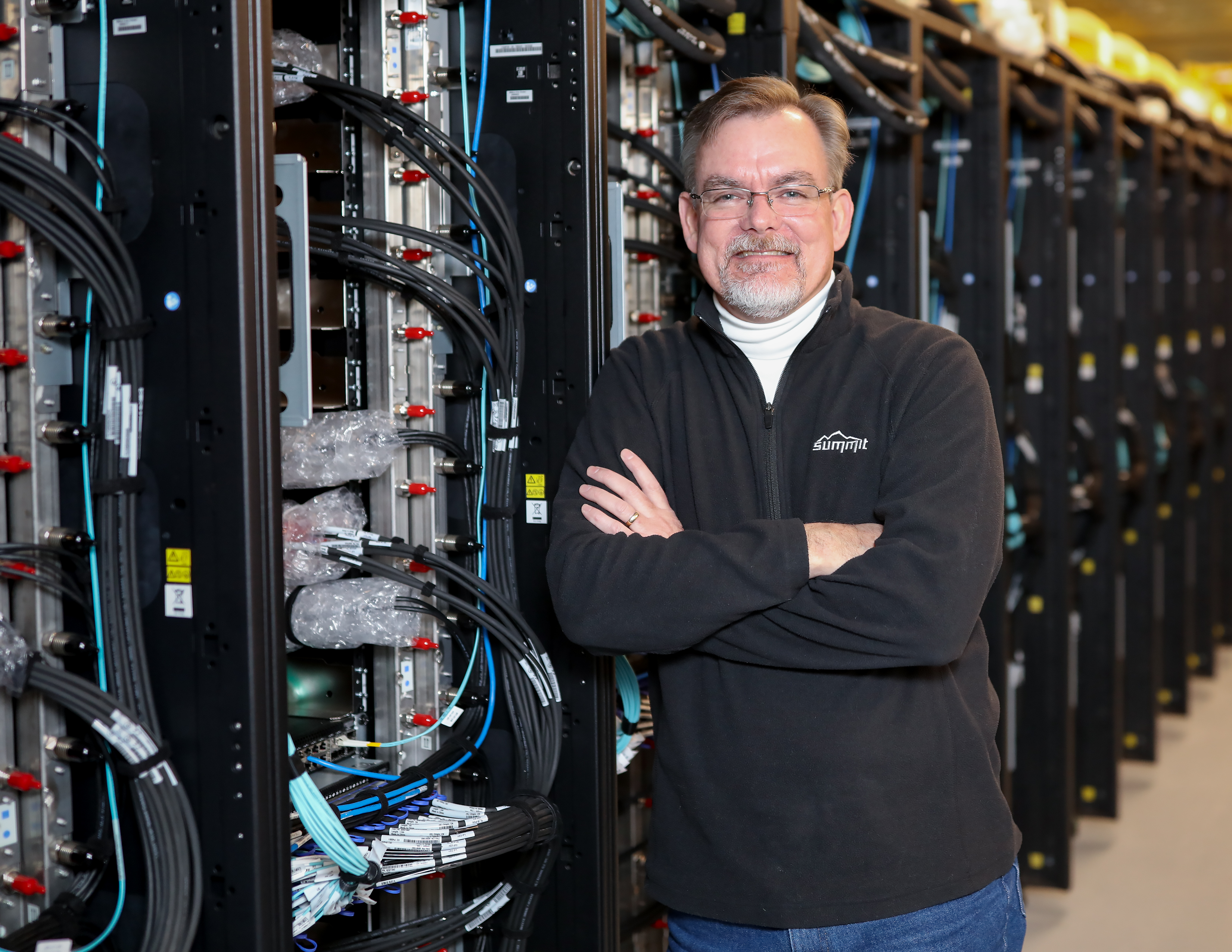

OLCF high-performance computing systems engineer Scott Atchley leads efforts to deploy Summit’s burst buffer, a reliable, high-speed storage layer that sits between the machine’s computing and file systems. Atchley’s track record for using technology to bolster productivity dates back to the early days of his career as a sales and marketing professional in his family’s boat manufacturing business.

The Faces of Summit series shares stories of the people behind America’s top supercomputer for open science, the Oak Ridge Leadership Computing Facility’s Summit. The IBM AC922 machine launched in June 2018.

Like a voyage into uncharted territory, new supercomputers bring unpredictable challenges. “All your assumptions are off the table,” said Scott Atchley, a high-performance computing (HPC) systems engineer at the the Oak Ridge Leadership Computing Facility (OLCF), a US Department of Energy (DOE) Office of Science User Facility located at Oak Ridge National Laboratory. “It’s an exploration.”

With Summit, the OLCF’s next leadership-class supercomputer, the journey to computing’s next peak requires a knack for realizing big ideas with practical solutions. It’s the kind of technical challenge that Atchley has made a career pursuing.

As a member of the OLCF’s Technology Integration Group, Atchley leads a team responsible for a key piece of Summit’s architecture called a burst buffer, a technology designed to bridge the widening disparity between supercomputers’ abilities to process and store data. “Computational performance is growing faster than file system performance,” Atchley said. “With each generation, the gap gets bigger.”

Like a slow drain under a fast-running faucet, the imbalance threatens to disrupt the pace of scientific research, forcing users to pause applications so data can be safely transferred to storage. To get around this scenario, the OLCF, along with Summit vendor IBM and Lawrence Livermore National Laboratory, conceived of a reliable, high-speed storage layer to sit between the machine’s computing and file systems. Consisting of flash memory—the same type of memory found in USB drives—Summit’s burst buffer will be able to take in data around four to five times faster than its file system. Bolstering the burst buffer’s performance is a storage protocol called NVM Express that allows users to read and write data quickly.

“Our main goal is to make it very easy for users to take advantage of this technology and get all the benefits,” Atchley said.

Building solutions

Using technology to seamlessly bolster productivity has been a theme in Atchley’s working life dating back to the start of his career as a sales and marketing professional in his family’s boat manufacturing business.

In the early days of PCs, Atchley convinced the business to adopt computers and software to facilitate everything from internal communications to marketing to sales. “It saved us a huge amount of money,” he said, “and figuring out how to use new stuff was always fun.”

Atchley’s interest in information technology eventually led him to return to the University of Tennessee, where he had previously received a bachelor’s degree in marketing, to pursue a master’s degree in computer science. The change in professional trajectory led to opportunities to write code, research blossoming internet technologies, and work as a programmer for a high-performance networking company, his first foray into the world of HPC.

“The thing I like about programming is that you’re building something—it’s just not a physical thing,” Atchley said. “I get just as much enjoyment from building software and seeing it be used as I did from designing boats.”

More burst for the buck

Since joining the OLCF in 2011, Atchley has continued to create useful solutions, most notably fine-tuning the job scheduling software of the OLCF’s current leadership-class supercomputer, Titan.

Taking on Summit’s burst buffer, Atchley’s team began testing various flash drives for performance, bandwidth, and longevity. Because flash wears out over time, the team—which includes the OLCF’s Chris Zimmer, Chris Brumgard, Sarp Oral, and Woong Shin—wanted to find how traditional data-saving techniques affected device durability. The results were encouraging.

“We found our workload is extremely well-suited for flash devices,” Atchley said. “With this information, we were able to work with IBM to increase the capacity of the device by decreasing durability that we didn’t need.”

Upon completion in 2018, Summit’s burst buffer is expected to boast an intake rate of around 10 terabytes per second. That gives the burst buffer ample opportunity to transfer the data to the hard disk–based parallel file system at a slower rate. Initially, the file system will be capable of ingesting data at 2 terabytes a second with a planned upgrade to 2.5 terabytes a second.

To make the burst buffer even more attractive to users, Atchley’s team wrote a library that allows applications to automatically take advantage of the technology without other changes.

In addition to serving as a quick off-ramp for application data, the burst buffer can also be employed as extended memory, adding additional capacity to Summit’s node-local memory of around 512 gigabytes. Though memory bandwidth limits the speed with which data can move from the burst buffer to the compute node, the capability could benefit machine-learning researchers, who need extra space for large input files.

“Pairing extended memory with local memory may help researchers who use machine learning improve their performance, and it will take a huge load off the parallel file system,” Atchley said.

With the launch date for Summit approaching, Atchley and his colleagues continue to familiarize themselves with the machine’s production environment by conducting tests on a development system called Peak, a single-cabinet system consisting of 18 nodes. As Summit’s servers begin to come online, burst buffer and file system testing will ramp up accordingly.

It’s demanding work, Atchley said, where new and unfamiliar problems aren’t just possible but likely. “That is the big challenge and the big appeal,” he said.

Oak Ridge National Laboratory is supported by the U.S. Department of Energy’s Office of Science. The single largest supporter of basic research in the physical sciences in the United States, the Office of Science is working to address some of the most pressing challenges of our time. For more information, please visit science.energy.gov.