The OLCF’s Fernanda Foertter (far left) moderates a panel on accelerated computing at the 2017 GPU Technology Conference in San Jose, California.

Oak Ridge Leadership Computing Facility (OLCF) staff members led talks, tutorials, and discussions on the present and future of GPU computing at the GPU Technology Conference (GTC) May 8–11 in San Jose, California.

The annual conference, hosted by technology vendor NVIDIA, brought together around 7,000 GPU developers from industry, academia, and government. Since its founding a decade ago, GTC has evolved into one of the largest meetings focused on artificial intelligence in the world. This development has coincided with the rise of GPUs from the tech world’s fringes to the computing engines of forward-looking technologies such as autonomous vehicles, virtual reality, and advanced robotics.

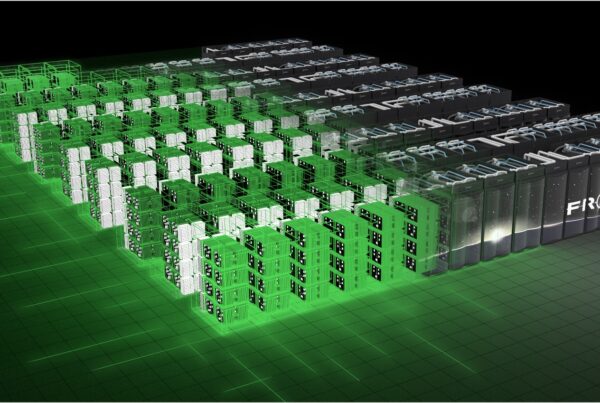

The OLCF, home to one of the world’s most powerful GPU-accelerated supercomputers, and its users shared knowledge and experience with conference attendees related to effective use of accelerator technology—including sessions covering simulation, visualization, programming, and data science. Additionally, the center helped organize talks addressing the growing role of machine learning, including deep learning, in scientific computing.

“For the science community, there’s a lot of excitement around the idea of using new methods like machine learning to explore problems that lack well-described, deterministic models for traditional simulation,” said Jack Wells, the OLCF’s director of science. “At GTC, several of our users presented on progress they’ve made using deep learning techniques on OLCF systems. We also organized a panel to engage the audience in understanding opportunities for scaling machine-learning workloads on high-performance computers.”

When the OLCF, a US Department of Energy (DOE) Office of Science User Facility located at DOE’s Oak Ridge National Laboratory, acquired the Cray XK7 Titan supercomputer in 2012, its hybrid CPU–GPU architecture provided researchers with unprecedented computing power for large-scale modeling and simulation. With more than 18,000 GPUs, the system has also proven to be a robust tool for training neural networks, the layered web of weighted inputs that inform the decisions of deep learning algorithms. With Summit—the OLCF’s next GPU-accelerated leadership-class machine, set to arrive next year—the center’s support of machine learning research is poised to expand.

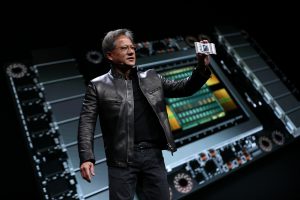

NVIDIA CEO Jensen Huang unveils the company’s newest GPU architecture, nicknamed Volta, at the 2017 GPU Technology Conference in San Jose, California. The OLCF’s Summit supercomputer will contain thousands of the next-generation GPU.

During the GTC keynote, NVIDIA CEO Jensen Huang revealed the company’s next-generation GPU, Volta, which will be installed in Summit. Volta offers a fivefold performance improvement over current-generation GPU technology. According to NVIDIA, Volta’s architecture is packed with 21 billion transistors and delivers the equivalent performance of 100 CPUs for deep learning.

For the science community, machine learning offers the promise of automating and accelerating tasks like data labeling, sorting, and management. When paired with simulation, machine learning could also lead to predictive methods that accelerate the pace of scientific discovery in domains as diverse as cancer research, fusion power, and cosmology, among other research areas.

At GTC, two OLCF users spoke in depth on the early results of deep learning projects conducted on OLCF resources. Bill Tang, a researcher at the Princeton Plasma Physics Laboratory, has scaled his deep learning fusion code to 6,000 GPUs on Titan. The method could help predict large-scale disruptions in experimental fusion reactors, such as the one being built in France under ITER. In another session, ORNL’s Arvind Ramanathan delivered a talk on his team’s use of deep learning to create text comprehension tools for cancer surveillance. Part of a joint effort between DOE and the National Institutes of Health, the project is contributing to the development of a neural network that will allow doctors to better predict cancer behavior and improve patient outcomes.

Beyond the buzz surrounding machine learning, OLCF staff continued to promote education and training for GPU developers. OLCF user support specialist Fernanda Foertter led a session on OpenACC, a directive-based programming model that simplifies programming with accelerator-based systems. Foertter also led a session on preparing applications for Summit and moderated a panel on the latest developments in accelerator programming.

In addition to Foertter, OLCF computational scientists Ramanan Sankaran and Wayne Joubert presented talks on the development of an accelerated, portable turbulent combustion code and on successes using Titan to carry out large-scale genomics computation, respectively. Additionally, OLCF computer scientist Ben Hernandez spoke on his experience using an NVIDIA DGX-1 for large-scale particle visualization.

Other users who showcased OLCF-related work include the San Diego Supercomputing Center’s Daniel Roten and Yifeng Cui, whose team is developing physics-based earthquake simulations to better understand hazards posed by seismic activity; University of California, Santa Cruz, professor Brant Robertson, who is simulating how galactic winds affect star and galaxy formation; and the University of Illinois at Urbana-Champaign’s Jim Phillips, who belongs to a team dedicated to carrying out some of the largest biomolecular simulations in the world.

For more information on the GTC conference, please visit: https://www.gputechconf.com/.

Oak Ridge National Laboratory is supported by the US Department of Energy’s Office of Science. The single largest supporter of basic research in the physical sciences in the United States, the Office of Science is working to address some of the most pressing challenges of our time. For more information, please visit science.energy.gov.