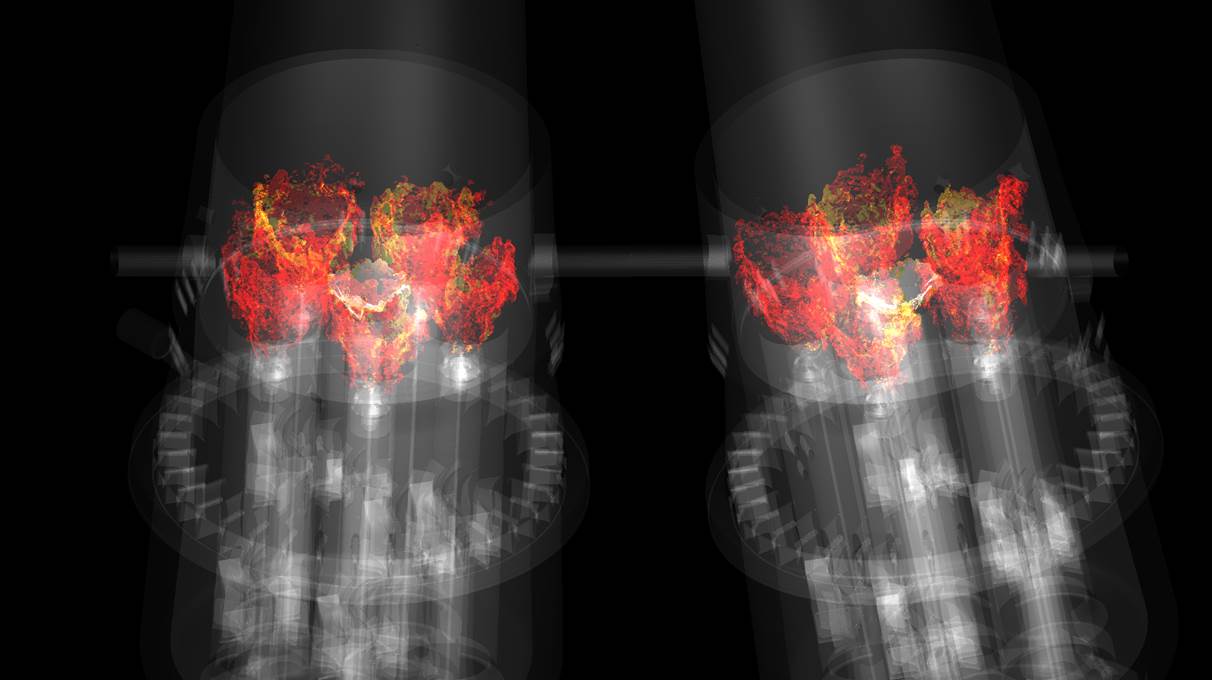

A simulation of combustion within two adjacent gas turbine combustors. GE researchers are incorporating advanced combustion modeling and simulation into product testing after developing a breakthrough methodology on the OLCF’s Titan supercomputer.

In the United States, the use of natural gas for electricity generation continues to grow. The driving forces behind this development? A boom in domestic natural gas production, historically low prices, and increased scrutiny over fossil fuels’ carbon emissions. Though coal still accounts for about a third of US electricity generation, utility companies are pivoting to cleaner natural gas to replace decommissioned coal plants.

Low-maintenance, high-efficiency gas turbines are playing an important role in this transition, boosting the economic attractiveness of natural gas-derived electricity. General Electric (GE), a world leader in industrial power generation technology and the world’s largest supplier of gas turbines, considers gas-fired power generation a key growth sector of its business and a practical step toward reducing global greenhouse gas emissions. When burned for electricity, natural gas emits half the carbon dioxide that coal does. It also requires fewer environmental controls.

“Advanced gas turbine technology gives customers one of the lowest installed costs per kilowatt,” said Joe Citeno, combustion engineering manager for GE Power. “We see it as a staple for increased power generation around the world.”

GE’s H-class heavy-duty gas turbines are currently the world’s largest and most efficient gas turbines, capable of converting fuel and air into electricity at more than 62 percent power-plant efficiency when matched with a steam turbine generator, a setup known as combined cycle. By comparison, today’s simple cycle power plants (gas turbine generator only) operate with efficiencies ranging between 33 and 44 percent depending on the size and model.

GE is constantly searching for ways to improve the performance and overall value of its products. A single percent increase in gas turbine efficiency equates to millions of dollars in saved fuel costs for GE’s customers and tons of carbon dioxide spared from the atmosphere. For a 1 gigawatt power plant, a 1 percent improvement in efficiency saves 17,000 metric tons of carbon dioxide emissions a year, equivalent to removing more than 3,500 vehicles from the road. Applying such an efficiency gain across the US combined-cycle fleet (approximately 200 gigawatts) would save about 3.5 million metric tons of carbon dioxide each year.

In 2015, the search for efficiency gains led GE to tackle one of the most complex problems in science and engineering—instabilities in gas turbine combustors. The journey led the company to the Titan supercomputer at the Oak Ridge Leadership Computing Facility (OLCF), a US Department of Energy (DOE) Office of Science User Facility located at DOE’s Oak Ridge National Laboratory.

Balancing act

Simultaneously increasing the efficiency and reducing the emissions of natural gas-powered turbines is a delicate balancing act. It requires an intricate understanding of these massive energy-converting machines—their materials, aerodynamics, and heat transfer, as well as how effectively they combust, or burn, fuel. Of all these factors, combustion physics is perhaps the most complex.

In an H-class gas turbine, combustion takes place within 6-foot-long chambers at high temperature and pressure. Much like a car engine has multiple cylinders, GE’s H-class turbines possess a ring of 12 or 16 combustors, each capable of burning nearly three tons of fuel and air per minute at firing temperatures exceeding 1,500 degrees Celsius. The extreme conditions make it one of the most difficult processes to test at GE’s gas turbine facility in Greenville, South Carolina.

At higher temperatures, gas turbines produce more electricity. They also produce more emissions, such as nitrogen oxides (NOx), a group of reactive gases that are regulated at the state and federal levels. To reduce emissions, GE’s Dry Low NOx combustion technology mixes fuel with air before burning it in the combustor.

“When the fuel and air are nearly perfectly mixed, you have the lowest emissions,” said Jin Yan, manager of the computational combustion lab at GE’s Global Research Center. “Imagine 20 tractor-trailers full of combustible fuel–air mixture. One combustor burns that amount every minute. In the process, it produces less than a tea cup (several ounces) of NOx emissions.”

Such precise burning can lead to other problems, specifically an unstable flame. Inside a combustor, instabilities in the flame can cause deafening acoustic pulsations—essentially noise-induced pressure waves. These pulsations can affect turbine performance. At their worst, they can wear out the machinery in a matter of minutes. For this reason, whenever a new pulsation is detected, understanding its cause and predicting whether it might affect future products becomes a high priority for the design team.

Testing limits

In 2014, one such pulsation caught researchers’ attention during a full-scale test of a gas turbine. The test revealed a combustion instability that hadn’t been observed during combustor development testing. The company determined the instability levels were acceptable for sustained operation and would not affect gas turbine performance. But GE researchers wanted to understand its cause, an investigation that could help them predict how the pulsations could manifest in future designs.

The company suspected the pulsations stemmed from an interaction between adjacent combustors, but they had no physical test capable of confirming this hypothesis. Because of facility airflow limits, GE is able to test only one combustor at a time. Even if the company could test multiple combustors, access-visibility and camera technology currently limit the researchers’ ability to understand and visualize the causes of high-frequency flame instabilities. So GE placed a bet on high-fidelity modeling and simulation to reveal what the physical tests could not.

The company asked its team of computational scientists, led by Yan, to see if it could reproduce the instability virtually using high-performance computers. GE also asked Yan’s team to use the resulting model to determine whether the pulsations might manifest in a new GE engine incorporating DOE-funded technology and due to be tested in late 2015, less than a year away. GE then challenged Yan’s team, in collaboration with the software company Cascade Technologies, to deliver these first-of-a-kind results before the 2015 test to demonstrate a truly predictive capability.

“We didn’t know if we could do it,” Yan said. “First, we needed to replicate the instability that appeared in the 2014 test. This required modeling multiple combustors, something we had never done. Then we needed to predict through simulation whether that instability would appear in the new turbine design and at what level.”

Such enhanced modeling and simulation capabilities held the potential to dramatically accelerate future product development cycles and could provide GE with new insights into turbine engine performance earlier in the design process instead of after testing physical prototypes.

But GE faced another hurdle. To meet the challenge time frame, Yan and his team needed computing power that far exceeded GE’s internal capabilities.

A computing breakthrough

In the spring of 2015, GE turned to the OLCF for help. Through the OLCF’s Accelerating Competitiveness through Computational Excellence (ACCEL) industrial partnerships program, Yan’s team received a Director’s Discretionary allocation on Titan, a Cray XK7 system capable of 27 petaflops, or 27 quadrillion calculations per second.

Yan’s team began working closely with Cascade Technologies, based in Palo Alto, California, to scale up Cascade’s CHARLES code. CHARLES is a high-fidelity flow solver for large eddy simulation, a mathematical model grounded in fluid flow equations known as Navier-Stokes equations. Using this framework, CHARLES is capable of capturing the high-speed mixing and complex geometries of air and fuel during combustion. The code’s efficient algorithms make it ideally suited to leverage leadership-class supercomputers to produce petabytes of simulation data.

Cascade’s CHARLES solver can trace its technical roots back to Stanford University’s Center for Turbulence Research and research efforts funded through DOE’s Advanced Simulation and Computing program. Many of Cascade’s engineering team are alumni of these programs. Although the CHARLES solver was developed to tackle problems like high-fidelity jet engine simulation and supersonic jet noise prediction, it had never been applied to predict combustion dynamics in a configuration as complex as a GE gas turbine combustion system.

Using 11.2 million hours on Titan, members of Yan’s team and Cascade’s engineering team executed simulation runs that harnessed 8,000 and 16,000 cores at a time, achieving a speedup in code performance 30 times greater than the original code. Cascade’s Sanjeeb Bose, an alumnus of DOE’s Computational Science Graduate Fellowship Program, provided significant contributions to the application development effort, upgrading CHARLES’ reacting flow solver to work five times faster on Titan’s CPUs.

Leveraging CHARLES’ massively parallel grid generation capabilities—a new software feature developed by Cascade—Yan’s team produced a fine-mesh grid composed of nearly 1 billion cells. Each cell captured microsecond-scale snapshots of the air–fuel mix during turbulent combustion, including particle diffusion, chemical reactions, heat transfer, and energy exchange.

Working with OLCF visualization specialist Mike Matheson, Yan’s team developed a workflow to analyze its simulation data and view the flame structure in high definition. By early summer, the team had made enough progress to view the results: the first ever multicombustor dynamic instability simulation of a GE gas turbine. “It was a breakthrough for us,” Yan said. “We successfully developed a model that was able to repeat what we observed in the 2014 test.”

The new capability gave GE researchers a clearer picture of the instability and its causes that couldn’t be obtained otherwise. Beyond reproducing the instability, the advanced model allowed the team to slow down, zoom in, and observe combustion physics at the sub-millisecond level, something no empirical method can match.

“These simulations are actually more than an experiment,” Citeno said. “They provide new insights which, combined with human creativity, allow for opportunities to improve designs within the practical product cycle.”

With the advanced model and new simulation methods in hand, Yan’s team neared the finish line of its goal. Applying its new methods to the 2015 gas turbine, the team predicted a low instability level in the latest design that was acceptable for operation and would not affect performance. These results were affirmed during the full-scale gas turbine test, validating the predictive accuracy of the new simulation methods developed on Titan. “It was very exciting,” Yan said. “GE’s leadership put a lot of trust in us.”

With the computational team’s initial doubts now a distant memory, GE entered a world of new possibilities for evaluating gas turbine engines.

The path forward

Validation of its high-fidelity model and the predictive accuracy of its new simulation methods are giving GE the ability to better integrate simulation directly into its product design cycle. “It’s opened up our design space,” Yan said. “We can look at all kinds of ideas we never thought about before. The number of designs we can evaluate has grown substantially.”

Coupled with advancements in other aspects of gas turbine design, Citeno projects the end result will be a full percentage-point gain in efficiency. This is important to GE’s and DOE’s goal to produce a combined-cycle power plant that operates at 65 percent efficiency, a leap that translates to billions of dollars a year in fuel savings for customers. A 1 percent efficiency gain across the US combined-cycle fleet is estimated to save more than $11 billion in fuel over the next 20 years.

“The world desperately needs higher-efficiency gas turbines because the end result is millions of tons of carbon dioxide that’s not going into the atmosphere,” said Citeno, noting that in the last 2 years, more than 50 percent of gas turbines manufactured at GE’s Greenville plant were exported to other countries. “The more efficient the technology becomes, the faster it gets adopted globally, which further helps to improve the world’s carbon footprint.”

Internally, GE’s experience with the OLCF’s world-class computing resources and expertise helps the company understand and evaluate the value of larger-scale high-performance computing, supporting the case for future investment in GE’s in-house capabilities. “Access to OLCF systems allows us to see what’s possible and de-risk our internal computing investment decisions,” Citeno said. “We can show concrete examples to our leadership of how advanced modeling and simulation is driving new product development instead of hypothetical charts.”

Building on its success using Titan, GE is continuing to develop its combustion simulation capabilities under a 2016 allocation awarded through the DOE Office of Advanced Scientific Computing Research (ASCR) Leadership Computing Challenge, or ALCC, program. As part of the project, GE’s vendor partner Cascade is continuing to enhance its CHARLES code so that it can take advantage of Titan’s GPU accelerators.

“A year ago these were gleam-in-the-eye calculations,” Citeno said. “We wouldn’t do them because we couldn’t do them in a reasonable time frame to affect product design. Titan collapsed that, compressing our learning cycle by a factor of 10-plus and giving us answers in a month that would have taken a year with our own resources.”

Oak Ridge National Laboratory is supported by the US Department of Energy’s Office of Science. The single largest supporter of basic research in the physical sciences in the United States, the Office of Science is working to address some of the most pressing challenges of our time. For more information, please visit science.energy.gov.