ORNL’s Bronson Messer assists local and international astrocomputing students during a code session workshop.

Photo by Trudy E. Bell/UC-HiPACC

ORNL researcher provides expertise to astrocomputing students in San Diego

High-performance computing (HPC) simulations have conquered many challenges surrounding supernovas. But like trying to control the energy produced from a dying star, controlling the power consumption of a supercomputer is not without challenges.

Oak Ridge National Laboratory’s (ORNL’s) Bronson Messer shared his knowledge on this subject during the 2014 International Summer School on AstroComputing (ISSAC), sponsored by the University of California’s High-Performance AstroComputing Center (UC-HiPACC), and held at the San Diego Supercomputer Center on the campus of UC San Diego, from July 21 to August 1.

Accompanying Messer were University of Tennessee (UT) students Austin Harris and Ryan Landfield, who work in ORNL’s Physics Division; and Konstantin Yakunin, a UT physics postdoctoral student working at the Joint Institute for Heavy Ion Research at ORNL. Also joining Messer was University of California–Santa Cruz student Elizabeth Lovegrove, noted for her work with Stan Woosley, longstanding principal investigator for the Innovative and Novel Computational Impact on Theory and Experiment (INCITE) program.

The aim of this year’s event, titled “Neutrino and Nuclear Astrophysics,” was to gather students from around the world to examine issues involving core-collapse supernovasig Bang nucleosynthesis, and other high-energy nuclear astrophysical phenomena. Each ISSAC student was given access to San Diego’s Gordon supercomputer to participate in hands-on daily code sessions.

“We want to give these students the opportunity to see what doing computational astrophysics is going to look like in their future career,” said Messer, a senior research and development staff member at the OLCF.

Messer played an invaluable role for the students, offering interpersonal guidance during several code session workshops, along with presenting a lecture on how astrophysics problems are solved today using HPC tools, how those tools work, and how they are expected to work in the future.

In his lecture, “Mapping Multi-Physics Problems to Today’s Architectures,” he discussed how supercomputers are being used to solve these complex problems through simulations. Understanding how the universe works is clearly one such complex problem, and digging into it requires an enormous amount of computational resources.

In fact, over the last 5 years or so, nearly 300 million INCITE hours have been awarded to nuclear astrophysics—almost 10 percent of the total amount of time provided to all fields of science under the program. Why so much?

“We formulate large, important problems. We do that consistently,” Messer said. “We’ve been able to build simulation models that are big and important, and we can demonstrate the ability to efficiently exploit these large computers. That’s why we get such a large chunk.”

This explanation, however, raises a question: As the age of the exaflop gets closer, will astrophysics be able to retain such a large share of computing resources? Messer’s answer: Only if it continues to solve the big problems.

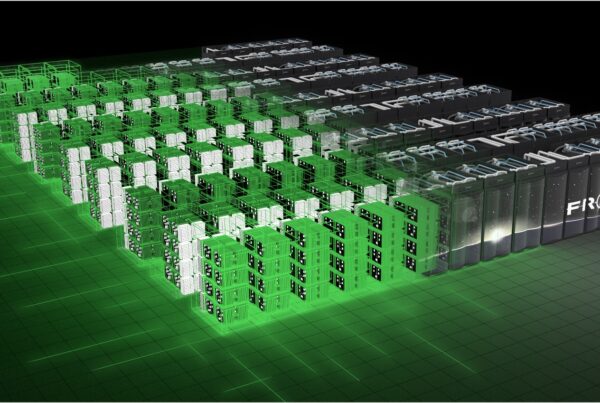

That answer is contingent not only on how efficiently these machines are being used but also—and perhaps more importantly—on how they are being designed. Messer explained in his lecture that computers are no longer getting faster, and Dennard Scaling—a 1970s observation that basically stated that computer speeds double every 2 years—is no longer the law of the supercomputing land. It’s a power issue, he said. To accommodate more cores, transistor sizes continue to get smaller, but that does not mean a CPU’s performance per watt is increasing. This is a big problem for simulation sciences.

Messer used Titan’s predecessor as an example: 1 Jaguar hour consumed 7 megawatts of electricity—an amount of energy equal to 6 tons of exploding TNT. To run just one supernova simulation requires 0.6 Jaguar months, which equals approximately 2,600 tons of TNT—a “preposterous” amount, Messer said.

And that is exactly why Titan added GPUs to its architecture—this change resulted in a 1,000 percent increase in peak performance over Jaguar with a mere 20 percent increase in peak power consumption.

But really, according to Messer, the key to meeting supercomputing power needs going forward is finding as much parallelism as possible. As long as big jobs can be broken down into many small jobs and distributed among the processors, the kind of accelerator used—whether an Intel Xeon Phi, CPU, or GPU—will not make much difference.

Although the architectural details surrounding cost and energy still pose a challenge, there is much to be excited about because, Messer said, “Stellar astrophysics is rife with unrealized parallelism.”

—Jeremy Rumsey