Since the Oak Ridge Leadership Computing Facility’s (OLCF’s) Titan supercomputer began accepting its full suite of users on May 31st, science has been picking up steam.

With its hybrid architecture featuring traditional CPUs alongside GPUs, Titan represents a revolutionary paradigm in high-performance computing’s quest to reach the exascale with only marginal increases in power consumption for the world’s leading systems.

But while peak-performance numbers approaching 30 petaflops are indeed jaw-dropping, Titan is only as powerful as the applications that use its unique architecture to solve some of our greatest scientific challenges.

“The real measure of a system like Titan is how it handles working scientific applications and critical scientific problems,” said Buddy Bland, project director at the OLCF. “The purpose of Titan’s incredible power is to advance science, and the system has already shown its abilities on a range of important applications.”

In an effort to ensure that users could make the most of Titan when it came online and get the most science out of their allocations, the OLCF launched the Center for Accelerated Application Readiness (CAAR), a collaboration among application developers; Titan’s manufacturer, Cray; GPU manufacturer NVIDIA; and the OLCF’s scientific computing experts. CAAR identified five applications that might be able to harness the potential of the GPUs quickly and realize significant improvements in performance, fidelity, or both.

CAAR applications included the combustion code S3D; LSMS, which studies magnetic systems; LAMMPS, a bioenergy and molecular dynamics application; Denovo, which investigates nuclear reactors; and CAM-SE, a code that explores climate change.

These codes will greatly benefit as they scale up to take advantage of Titan’s unprecedented computing power. For instance, the S3D code will move beyond modeling simple fuels to tackle complex, larger-molecule hydrocarbon fuels such as isooctane (a surrogate for gasoline) and biofuels such as ethanol and butanol, helping America to achieve greater energy efficiency through improved internal combustion engines. And the climate change application CAM-SE will be able to increase the simulation speed to between 1 and 5 years per computing day, compared to just three months per computing day on Jaguar, Titan’s Cray XT5 predecessor. This speed increase is needed to make ultra-high-resolution, full-chemistry simulations feasible over decades and centuries and will allow researchers to quantify uncertainties by running multiple simulations.

One code in particular is already taking off. LAMMPS, a molecular dynamics code that simulates the movement of atoms through time and is a powerful tool for research in biology, materials science, and nanotechnology, has seen more than a sevenfold speedup on Titan compared to its performance on the comparable CPU-only Titan system (before the GPUs were available).

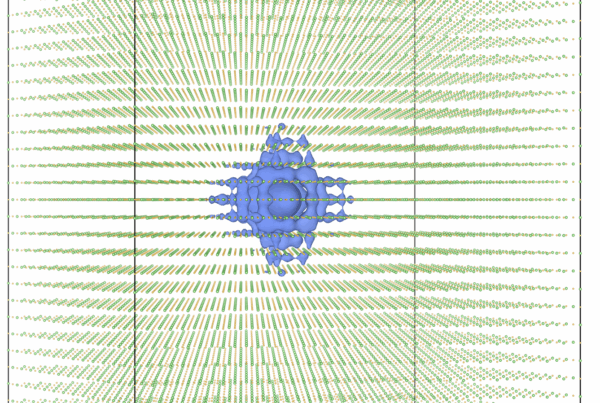

WL-LSMS, another CAAR application, has also proven to be an excellent match for Titan’s hybrid architecture. It calculates the magnetic properties of promising materials, including its Curie temperature, from basic laws of physics rather than from models that must incorporate approximations. WL-LSMS ran 3.8 times faster on the GPU-enabled Cray XK7 Titan than its XE6 CPU-only predecessor on a problem that consumed 18,600 of Titan’s compute nodes, 88 short of the full machine. Equally impressive is the fact that even with this dramatic increase in performance, the GPU version of Titan also consumed 7.3 times less energy than the CPU-only incarnation. This combination of accelerated performance and reduced energy consumption is precisely what the addition of the GPUs was intended to accomplish.

All of the CAAR applications achieved significant speedups using Titan’s GPUs, paving the way for the rest of Titan’s users, who are now beginning to ramp up their individual codes to scales never dreamed of just a few years ago.

To GPU or Not to GPU

ORNL had the option of building an equivalent Cray system that did not incorporate GPUs, using those 18,688 sockets instead to hold additional 16-core CPUs. Essentially, whereas each Titan node contains one NVIDIA Kepler GPU plus an AMD 16-core Opteron CPU, the other option would have simply replaced the GPU with another Opteron CPU. The resulting system would have contained nearly 600,000 processing cores, but it would nevertheless have paled in comparison to Titan.

The benefits of incorporating the GPUs are becoming more apparent with each application development, validating the OLCF’s philosophy and giving computational scientists unprecedented firepower with which to tackle some of nature’s largest, most complex questions.

But as with any sea change, Titan’s success is about more than the addition of the GPUs. Titan’s hybrid architecture presented obstacles on multiple fronts, obstacles the OLCF overcame in time for Titan’s official unveiling in June of 2013.

“We’ve made several improvements that don’t concern the GPUs but do affect the bottom line,” said OLCF Director of Science Jack Wells.

Take the individual codes, for instance. Many had to be restructured so that they could efficiently offload chunks of work to the GPUs, said Wells. In the case of S3D, the combustion code, that meant taking an MPI-based code and incorporating some OpenMP messaging. “When we did that, many codes saw a twofold speedup just by improving the code on the old machine,” said Wells.

Beyond the codes, the OLCF also reinvigorated large portions of the entire computing ecosystem. For instance, the center upgraded its Spider file system, now known as Atlas, to include more than double the number of routers from Titan to Atlas than was available from Jaguar to Spider. The OLCF also took a close look at where the routers were in the network, spreading them out so that I/O and communication traffic would conflict as little as possible.

Together with the addition of the GPUs, these ecosystem improvements represent a paradigm shift in high-performance computing. While the challenges that remain are great, they are dwarfed by the promise already evident after Titan’s first few months of use.