Ushering in the next generation of parallel file systems

Already one of the largest parallel file systems on the planet, Spider—ORNL’s Lustre-based parallel file system—is about to get even bigger.

Since 2008, Spider has been the primary production file system responsible for providing disk space for Jaguar, and now Titan, as well as other OLCF systems such as the LENS visualization cluster, the Smoky development cluster, and the lab’s GridFTP servers. Spider serves these and other systems by sharing data between OLCF systems and its 26,000-plus compute nodes (clients).

Spider was the first of its kind, a center-wide shared resource that served all major OLCF platforms while providing the highest performance with simultaneous accessibility.

But because of Jaguar’s recent upgrade to Titan, the input/output (I/O) demands on Spider increased drastically. As a result, Spider is now becoming Spider II. The new system will feature 32 petabytes of storage and an aggregate bandwidth of 1TB/second, allowing it to better keep pace with Titan.

“Once in production we will be providing OLCF users with more than 1 terabyte per second file system performance at the top end,” said Sarp Oral, the task lead for File and Storage Systems projects in the Technology Integration Group, within the National Center for Computational Sciences (NCCS). “At that speed we expect Spider II to be safely in league with the top three parallel file systems in the world.”

Currently, acceptance testing for the block storage subsystem of Spider II has been completed. The file system is expected to be production-ready in fall 2013.

Arachnid anatomy

Assembling Spider II required an OLCF-wide effort. A diverse team that spanned several OLCF groups, such as Technology Integration and High-Performance Computing Operations, came together with ORNL procurement to work on the hardware acquisition, deployment, installation, and software updates and integration.

Assembling Spider II required an OLCF-wide effort. A diverse team that spanned several OLCF groups, such as Technology Integration and High-Performance Computing Operations, came together with ORNL procurement to work on the hardware acquisition, deployment, installation, and software updates and integration.

OLCF staff (Sarp Oral, David Dillow, Dustin Leverman, Douglas Fuller, Ross Miller, Feiyi Wang, James Simmons, Jason Hill, Willy Besancenez, Jim Rogers, and Sudharshan Vazhkudai) is working closely with partners Data Direct Networks (DDN), Cray, Mellanox, and Dell to bring Spider II online.

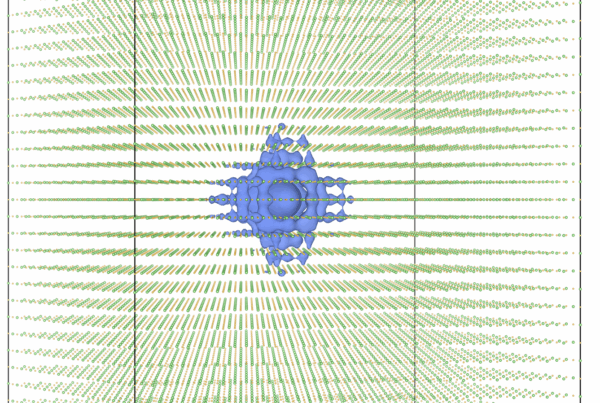

After construction was complete, Spider II grew to 672 square feet. Inside its four rows of cabinets is a clustered file system architecture, with I/O servers sitting in front of, and managing, high-end storage arrays, which in turn manage Spider II’s 20,160 disks. The cluster is connected to the OLCF systems via a very fast InfiniBand-based system area network. Every existing and future OLCF system will connect to this upgraded system area network, and data stored in Spider II will be instantly accessible to all.

With the new hardware and added space, the OLCF staff was able to increase the aggregate bandwidth from 240 gigabytes per second to well over 1 terabyte, in addition to increasing the total storage capacity from 10 petabytes to 32.

“In essence,” said Oral, “Spider II is at least four times more powerful in terms of I/O performance and provides three times more capacity than the old Spider.”

However, he noted, having top-of-the-line hardware is just one aspect of a powerful system; top-of-the-line software is the other.

Spider senses

“In addition to offering the newest and most capable hardware, we wanted Spider II to run the latest and greatest version of Lustre,” said Oral. “Lustre 2.4 will improve our scalability and metadata performance and will also provide us with unique features which we wanted and worked for quite some time to get.”

The newest version, Lustre 2.4—the premier open-source software for high-performance computing parallel file systems—offers vast improvements and stability, especially since it will be the latest maintenance branch.

For example, the number of object storage targets for single shared files will increase from 160 to 2,000. The distributed namespace enhancement will allow a single namespace to span multiple metadata servers, increasing the number of users and improving metadata performance and scalability. The imperative recovery feature will cut full-scale recoveries for Titan down to just a few minutes—a huge reduction from previous times.

“Spider II allows our parallel file system to keep pace with the newly increased size and computational horsepower of Titan,” said Bronson Messer of the NCCS Scientific Computing Group. “The anticipated metadata improvement, in particular, should enable our users to produce and analyze the kind of large, complex datasets we anticipate being produced on Titan. Spider II should be both bigger, and better.”—by Jeremy Rumsey