Industry, academia, and government join forces to address next-generation computing challenges

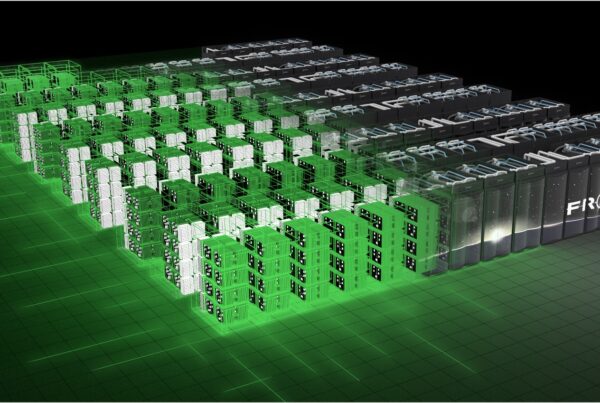

ORNL is upgrading its Jaguar supercomputer to become Titan, a Cray XK6 that will be capable of 10 to 20 petaflops by early 2013. To prepare users for impending changes to the computer’s architecture, OLCF staff held a series of workshops January 23 through 27.

“The purpose of the workshops was to provide an opportunity for users to be exposed to the suggested methods of porting their codes to the new hybrid architecture,” said OLCF user assistance specialist Bobby Whitten.

The majority of supercomputers perform most calculations solely on central processing units (CPUs), such as the ones used in today’s personal computers. Titan, however, will use a mix of CPUs and graphics processing units (GPUs). GPUs are typically found in modern video game systems and will be employed to blaze through repetitive calculations by streaming similar data sets. CPUs, on the other hand, perform only one calculation at a time, yet are capable of shuffling and connecting information, suiting them for more complex computation. Together these complementary processing types will provide Titan with unprecedented computing power.

Attendees of the workshops—who could take part in person or virtually—started each day listening to lectures about the various performance tools available for scaling up their codes. The afternoons were spent putting these tools to use. “I was very impressed with the level of detail,” said Bogdan Vacaliuc, an electronics and embedded systems researcher at ORNL who participated in the conference. “The hands-on work on the machine has been really helpful, and these tools and techniques benefit the embedded multicore processor community as well.” Whitten noted that the Titan workshop had a great turnout, with 94 attendees on the first day alone.

The OLCF recommended that users use debuggers, compilers, and performance analysis tools to fully integrate their codes to these new architectures. Performance analysis tools track application performance on both a macroscopic and microscopic lens. Debuggers help identify programming glitches in users’ application codes. And compilers play the role of translators, taking programming languages such as C++ and Fortran and converting them to serial-based languages—binaries—that a computer can understand.

The first day focused on exposing parallelism, or performing multiples tasks simultaneously, in codes. Vendors explained their respective compiler technologies on the second day. The third day was devoted to performance analysis tools, and the last day of instruction focused on debuggers. The conference rounded out with an open session for user questions on the fifth day.

Representatives of software companies PGI, CAPS Enterprises, and Allinea as well as Cray, the company that built Jaguar and Titan, were on hand to discuss various tools being used to help transition computer codes to the new architecture. A representative from the Technical University of Dresden was also on hand to present information about the Vampir suite of performance analysis tools.

“There is a lot of interest [in Titan], and people are starting to agree that the approach of using these tools is gaining more traction,” Whitten said. —by Eric Gedenk