Supercomputing efficiency shows nearly three-fold increase with new scheduling technique

Am I responsible for the actions of my brother? According to Oak Ridge National Laboratory (ORNL) computer scientist Terry Jones, the answer may be yes for computer nodes. As the number of processors continues to increase in leadership-class supercomputers, their ability to perform parallel computation—doing multiple calculations simultaneously—becomes increasingly important.

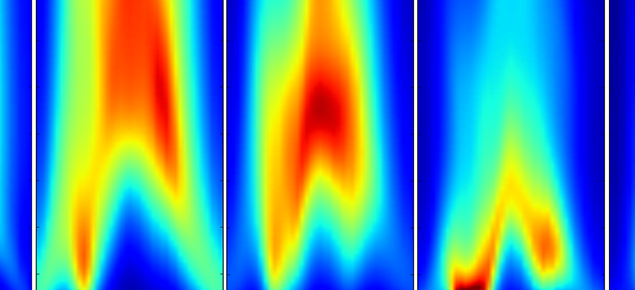

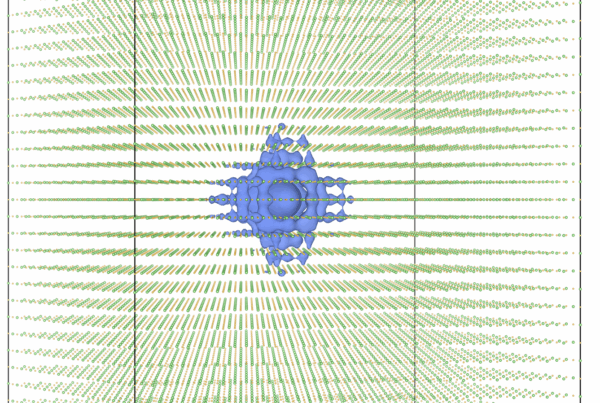

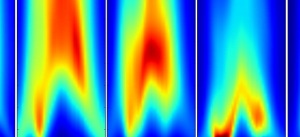

The Colony kernel organizes computer work into scientific applications (blue) and normal background processes (red) across all nodes in a supercomputer. Then, the red normal processes are scheduled such as not to randomly interrupt the blue scientific application allowing for maximum computation time (green). Image credit: Terry Jones, ORNL.

The surge in machine size and complexity has led the Oak Ridge Leadership Computing Facility (OLCF) computer scientists to try new and interesting strategies to keep large systems running at the highest efficiency. Their efforts have nearly tripled performance for synchronizing collective operations.

Jones and a team of researchers at the OLCF developed a software component bridging between applications and hardware—a modified operating system kernel—in hopes of scheduling the many tasks that run on Linux-based supercomputing systems in ways that minimize interruption during scientific applications. Jones’s Colony kernel, as it is called, includes collaborators at IBM and Cray.

“The Colony kernel achieves high scalability through coordinated scheduling techniques,” Jones said. “Currently, these applications run randomly with respect to other nodes, and there is no coordination of when to favor scientific applications and when to favor other necessary activities.”

In a typical operating system, various “helper” applications run alongside scientific applications. These programs—known as daemons—must run to assure that all other required functions are working correctly on the supercomputer. Problems arise when daemon activity interrupts the flow of scientific applications on supercomputers.

“What the Colony kernel does differently involves the Linux scheduling algorithm,” Jones said. “Instead of scheduling the set of processes on one node without consideration to its neighbor nodes, the Colony kernel makes scheduling decisions with a global perspective. This allows us to ensure that activities that would normally happen randomly with respect to other nodes can be scheduled to achieve better performance for parallel workloads running on many nodes.”

The new “parallel awareness information” gives each individual node’s scheduler the information it needs to work efficiently in unison. All nodes cycle between a period when the scientific application is universally favored, and a period when all other processes are universally favored. This minimizes random interruptions.

Colony’s coordinated scheduling could prove very helpful to the scientists and engineers who utilize leadership-class machines. When researchers divide, or decompose, their simulations among many processors in supercomputers and structure their simulations in cycles, or time steps, a synchronizing collective such as a barrier or reduction is usually present at least once per cycle. As with many other team-related activities, though, performance is often only as fast as the slowest process.

Jones used a simulation of a bumper of a car deforming as it runs into a tree as an example. A scientific application like Dyna3d or Nike3d would typically divide up the bumper into small pieces, and processors would be assigned to a specific area of the bumper. Time steps would begin before the car hit the tree, and all processors would be doing roughly the same amount of work. With each time step advance in simulation time, data is exchanged between neighboring nodes and certain global values are updated.

“For example, say I want to find the maximum temperature or pressure across the entire simulation,” Jones posited. “This leads to what is called a reduction synchronizing collective, because before I can know the maximum temperature or pressure, every node working on the problem must check in with their temperature or pressure.” For these common operations, the Colony kernel provides significant benefit by eliminating most random interruptions common to Linux daemons.

The Colony team increased performance 2.85 times at 30,000 cores on the Cray XT5 Jaguar, the OLCF’s premier research tool, capable of 2.3 thousand trillion calculations per second.

For further information about the Colony kernel, visit the Colony Project website at https://www.hpc-colony.org or contact Terry Jones at [email protected].—by Eric Gedenk