User Meeting 2025

AI-Powered Acceleration of SR-72 Darkstar Hypersonic Simulation on OLCF Frontier

The Lockheed Martin SR-72 "Darkstar" is a hypersonic reconnaissance-strike UAV concept using turbine-based combined-cycle engines to cruise at Mach 6 (~4,600 mph) above 80,000 ft.

This work advances DOE Office of Science priorities by automating high-fidelity CFD simulations via hybrid AI, integrating symbolic reasoning and ML for autonomous geometry prep, meshing, solver setup, and adaptive refinement on exascale systems. It boosts engineer and scientist productivity, democratizes HPC access, and aligns with energy, security, and DOE’s AI-driven science goals.

AI-Powered Acceleration of YF-23 Transonic Simulations on OLCF Frontier

The Northrop YF-23 is a single-seat, twin-engine stealth fighter prototype that supercruises at Mach 1.6 and reaches a top speed of Mach 1.8 (with afterburners), with estimates exceeding Mach 2.2.

This work advances DOE Office of Science priorities by automating high-fidelity CFD simulations via hybrid AI, integrating symbolic reasoning and ML for autonomous geometry prep, meshing, solver setup, and adaptive refinement on exascale systems. It boosts engineer and scientist productivity, democratizes HPC access, and aligns with energy, security, and DOE’s AI-driven science goals.

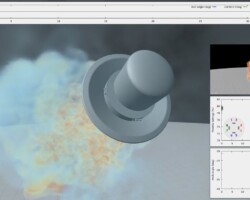

Closed-Loop Control of a Human-Scale Mars Lander

Composite animation showing autonomous flight trajectory using closed-loop flight control computed on Frontier. The vehicle descends from approximately seven kilometers altitude to one kilometer and decelerates from Mach 2.4 to approximately Mach 0.8 during this 35-second period. The top inset shows the RCS firing sequence and associated roll angle, which can also be observed as a subtle +-1-degree vehicle rotation in the main image. The top-right inset shows a farfield view of the trajectory, where the Martian surface is indicated by a Cartesian grid with a 1-kilometer spacing. The middle-right inset shows the throttle settings for each of the eight main engines, which decelerate the vehicle and also control pitch and yaw. The bottom-right image shows the vehicle pitch angle, which can also be observed in the main image. The main engine plumes are visualized using density weighted by the H2O mass fraction, while the RCS jets are shown using density weighted by the N2 mass fraction with separate red/green colormaps depending on thrust orientation.

The NASA CFD solver FUN3D was used to perform the fluid dynamics on Frontier, while coupled in real-time with the NASA flight mechanics package POST2 executing on a dedicated NASA system located 500 miles away. The visualization was produced using NVIDIA IndeX and several additional tools developed in-house at NASA.

Digital Twin of the Frontier Supercomputer Visualized in Unreal Engine 5

This video demonstrates a high-fidelity digital twin of the Frontier supercomputer, developed using the ExaDigiT framework. The system integrates both real-time operational telemetry and simulated outputs from custom-built power and cooling simulation tools. Real-world telemetry is sourced from the Frontier supercomputer and accessed through a custom FastAPI server, providing a flexible and scalable interface for data visualization and simulation-driven analysis.

Visualization is implemented in Unreal Engine 5, leveraging its advanced rendering and interaction capabilities to create an immersive, interactive experience. Custom simulation modules, developed in Python and C++, generate predictive models of power consumption and cooling dynamics, which are visualized alongside live system data in the digital twin.

The novelty of this work lies in its seamless integration of real telemetry, predictive simulation, and interactive visualization at exascale, enabling users to explore "what-if" scenarios, diagnose system behavior, and uate optimization strategies without impacting live operations. By combining operational and synthetic data streams in real time, ExaDigiT advances the use of digital twins for high-performance computing.

Exascale simulation of multi-jet rockets on OLCF Frontier

This visualization shows the development and interaction of seven high-speed jets of hot gas into ambient air at a Reynolds number of 250,000. Each jet is resolved with 170 grid cells in its diameter, and the computational domain is comprised of twelve billion total cells. The visualization is colored by the volume fraction of the jet fluid, with darker colors indicating higher concentrations. The simulation was performed using the open-source multiphase compressible flow solver MFC, which uses high-order finite volume methods and OpenACC acceleration to perform exascale simulations on Frontier. This simulation was run on three thousand Frontier nodes in ~40 minutes, and visualized using 20 Andes nodes with Paraview in ~20 hours. High-fidelity simulations of multi-engine rockets like this one allow for observations of stream recirculation and base heating in multi-jet rocket configurations that are difficult to capture experimentally. MFC is capable of simulating multi-engine spacecraft with up to 100 trillion grid cells on Frontier by using novel numerical methods, mixed precision, and shared memory architectures.

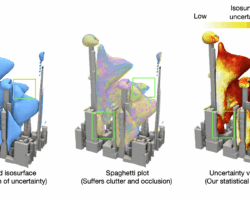

Ensemble visualizations of turbulent flow over Manhattan, computed on Frontier, comparing mean, spaghetti plot, and UQ-based isosurface techniques to reveal spatial uncertainty.

We visualize the results for the 3D turbulent flow ensemble simulation. This data comes from a Large Eddy Simulation (LES) of flow through part of Manhattan, NYC, just south of Central Park. To investigate uncertainty with regard to the subgrid-scale (SGS) turbulence parameterization, three different parameters (total dissipation, shear production, and dissipation rate) are run at default values, as well as, half their default values, tensored into a total of 2 × 2 × 2 = 8 simulations. The simulations are performed on the Frontier supercomputer at the Oak Ridge National Laboratory. The image shows isosurface visualizations of a vertical component of wind among the eight simulations. The isosurface extracted from the mean of the ensemble data (the leftmost image) does not portray spatial uncertainty of the isosurface. Visualizing uncertainty, however, is critical for avoiding data misrepresentation and mitigating errors in analysis. The spaghetti plot of isosurfaces of the simulation runs (the center image) portrays spatial uncertainty, but it significantly suffers from occlusion and clutter issues in 3D and leads to new undesired surface colors due to color blending. Our statistical uncertainty quantification (UQ) of isosurface vertices enables us to clearly communicate positions of relatively high uncertainty through colormapping (the rightmost image), thereby providing more robust visual representation of the data. The Viskores library and ParaView are used to create visualizations.

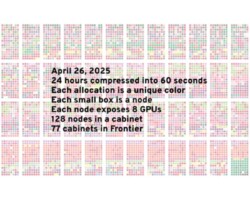

One Day on Frontier

This is a visualization of the work done by Frontier in a 24-hour period, from 12:01am to midnight, April 26, 2025. Each square is a compute node, and each cluster is a rack. All nodes from each individual slurm allocation are the same color, drawn from an RYB colorspace. The video runs for 60 seconds at 60 fps, so each frame represents 24 seconds of real time. A simple slurm command generated the raw data, and https://github.com/markstock/switchboard created the frames.

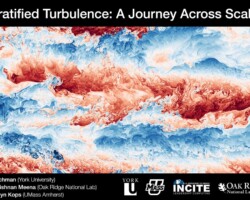

Stratified Turbulence: A Journey Across Scales

We visualize a snapshot in time from one of the largest numerical simulations of stratified turbulence performed to date, enabled by the Frontier supercomputer through an INCITE award. Stratified turbulence refers to chaotic motion arising in a fluid of variable density, where buoyancy forces strongly influence the flow dynamics. This type of turbulence plays a central role in a variety of natural and industrial processes, such as influencing the dispersion of heat and pollutants in the ocean and atmosphere, but remains poorly understood due to the vast range of interacting length scales that must be resolved.

The 6 trillion grid point simulation shown here, performed by directly simulating the Navier Stokes equations on Frontier, was performed to yield unprecedented resolution into the turbulent structures underpinning such flows. Energy is injected at large scales to drive the turbulence, which cascades down through progressively smaller structures until eventually being dissipated by viscosity. The colors in the visualization show perturbations to the fluid’s density relative to the stable background gradient: red and blue indicate lighter and denser fluid, respectively.

This simulation is one of the first to fully resolve stratified turbulence at high Prandtl number, meaning that momentum diffuses significantly faster than density, as is characteristic of ocean flows. A challenge in simulating high Prandtl flows is that the density field develops extremely fine structures that require immense resolution to capture, necessitating the use of Frontier.

Further details are available through our group’s STRATA turbulence database (https://stratified-turbulence.github.io/web/).