In preparation for the Frontier supercomputer, OLCF has selected eight research projects to participate in the Frontier Center for Accelerated Application Readiness (CAAR) program. Through Frontier CAAR, the OLCF are partnering with application core developers, vendor partners, and OLCF staff members to optimize simulation, data-intensive, and machine learning scientific applications for exascale performance, ensuring that Frontier will be able to perform large-scale science when it opens to users in 2022. Consisting of application core developers and staff from the OLCF, the partnership teams are receiving technical support from Cray and AMD—Frontier’s primary vendors—and are accessing multiple early-generation hardware platforms prior to system deployment.

The Frontier CAAR teams include:

Code: Cholla

PI: Evan Schneider, University of Pittsburgh

Description: Massive amounts of new data about the Milky Way through surveys such as GALFA HI and GAIA mean that a more powerful computing system like Frontier will be necessary to reproduce all the data in a simulation, as well as make detailed astrophysical predictions. Schneider’s team is working to simulate a Milky Way-like galaxy using Cholla, an astrophysical simulation code developed for the extreme environments encountered in astrophysical systems.

Code: CoMet (Combinatorial Metrics)

Code: CoMet (Combinatorial Metrics)

PI: Daniel Jacobson, Oak Ridge National Laboratory

Description: Using the CoMet application, researchers can conduct, among other things, large scale Genome-Wide Epistasis Studies (GWES), co-evolution and pleiotropy studies. Current work with CoMet is being used in projects ranging from climatype clustering and bioenergy to clinical genomics, including the study of the genetics of opioid addiction and toxicity, chronic pain, Alzheimer’s, and autism. With Frontier, Jacobson’s team will be able to solve scientific problems within much more complex biological systems than was previously possible on Summit. In addition, the ability to perform climatype comparisons for every year over a decades of time rather than a single comparison based on the mean of 30 years of data will allow researchers to gain a better understanding of the biological systems related to bioenergy, agriculture, and medicine.

Code: GESTS (GPUs for Extreme-Scale Turbulence Simulations)

Code: GESTS (GPUs for Extreme-Scale Turbulence Simulations)

PI: P. K. Yeung, Georgia Institute of Technology

Description: Understanding fluid turbulence and the potential universal properties of turbulence is a grand challenge in many fields of science and engineering, from pollutant dispersion and combustion to ocean dynamics and astrophysics. Using the GESTS code, P. K. Yeung’s team performs direct numerical simulations of turbulent fluid flows by computing instantaneous velocity fields in time and 3D space with a wide range of scales according to the Navier-Stokes equations, which express the exact physical principles of conservation of mass and momentum. On Frontier, the team will be able to produce a simulation of forced isotropic turbulence with nearly 35 trillion grid points, compared to 6 trillion they’ve achieved on Summit.

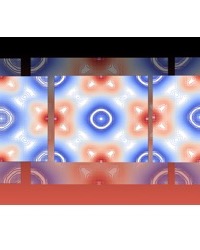

Code: LBPM (Lattice Boltzmann Methods for Porous Media)

Code: LBPM (Lattice Boltzmann Methods for Porous Media)

PI: James Edward McClure, Virginia Polytechnic Institute and State University

Description: To study the behavior of physical processes within rock structures, specifically to model multiphase flow processes, James McClure and his team have to first understand heterogeneous wettability. Wettability refers to the surface energy between fluids and solids and can vary due to the roughness and mineral composition of the material. McClure’s team can use LBPM to read the volumetric maps of mineral composition and assign local wetting properties accordingly. With Frontier, they hope to train neural networks to predict the future geometric configuration of fluids.

Code: LSMS(Locally-Selfconsistent Multiple Scattering)

Code: LSMS(Locally-Selfconsistent Multiple Scattering)

PI: Markus Eisenbach, Oak Ridge National Laboratory

Description: To understand states that go beyond a periodic crystalline lattice structure, researchers like Markus Eisenbach must be able to simulate the many thousands of atoms needed to describe extended electronic and magnetic orderings. In previous research it has been necessary to employ classical models due to the scaling issues that arise with first principles (FP) calculations. With LSMS—a code for FP calculations of alloys and magnetic systems—in conjunction with the computational power of Frontier, however, Eisenbach’s team can run previously inaccessible calculations of realistic condensed matter systems from FP.

Code: NAMD (Nanoscale Molecular Dynamics )

Code: NAMD (Nanoscale Molecular Dynamics )

PI: Emad Tajkhorshid, University of Illinois at Urbana-Champaign

Description: Using Frontier in conjunction with NAMD, a molecular dynamics code designed for the simulation of large biomolecular systems, Emad Tajkhorshid and his team can study the entry process of the Zika Virus (ZIKV). A greater understanding of the way viruses like ZIKV enter host cells through clathrin-dependent endocytosis may pave the way for new drugs and vaccines to prevent future outbreaks. Frontier’s enormous computational power means that these simulations can be run with a great degree of accuracy across multiple models.

Code: NuCCOR (Nuclear Coupled-Cluster Oak Ridge)

Code: NuCCOR (Nuclear Coupled-Cluster Oak Ridge)

PI: Morten Hjorth-Jensen, Michigan State University

Description: NuCCOR is a nuclear physics application that uses coupled cluster techniques to describe many-body systems, or microscopic systems consisting of a large number of interacting particles. Using Frontier, Hjorth-Jensen’s team will no longer be limited to performing computations only on atomic nuclei that exhibit subshell closures for neutrons and protons, and instead can take an alternative approach using symmetry-projection techniques. They will now be able to study complex time-dependent phenomena such as nuclear reactions and fission.

![]() Code: PIConGPU (Particle-in-cell on Graphics Processing Units)

Code: PIConGPU (Particle-in-cell on Graphics Processing Units)

PI: Sunita Chandrasekaran, University of Delaware

Description: PIConGPU is an Open Source simulations framework for plasma and laser-plasma physics used to develop advanced particle accelerators for radiation therapy of cancer, high energy physics and photon science. With Frontier, Chandrasekaran’s team will significantly improve both on the predictive capabilities of simulations and on reaching particle energies suitable for application. The complex plasma dynamics that govern the final particle beam properties can now be studied at an unprecedented temporal and spatial resolution. This will provide accelerator development with the high quality data needed to advance plasma accelerators towards application.