2019 OLCF System Decommissions and Notable Changes

As the OLCF begins preparations for Frontier, you should be aware of plans that will impact Titan, Eos, Rhea, and the Atlas filesystem in 2019.

Please pay attention to the following key dates as you plan your science campaigns and data migration for the remainder of the year:

| Date | Event |

|---|---|

| March 01 | The OLCF will no longer accept Director’s Discretionary proposals for the Titan and Eos computational resources |

| March 12 | Rhea’s OS upgraded to RHEL7 |

| June 30 | Last day to submit jobs to Titan and Eos |

| July 16 | Rhea will begin the transition from Lustre to GPFS by mounting the Alpine GPFS filesystem |

| August 01 | Titan and Eos will be decommissioned |

| August 05 | Atlas becomes read-only. Please do not wait, begin transferring your Lustre data now. |

| August 06 | Purge Policy implemented on Alpine, the center-wide GPFS filesystem |

| August 13 | Rhea and the DTNs will transition a portion of the scheduled nodes from the Moab scheduler to the Slurm scheduler to aid in the Sep 03 full system transition. |

| August 19 | Atlas decommissioned; ALL REMAINING DATA on ATLAS will be PERMANENTLY DELETED |

| September 03 | All Rhea and DTN nodes will transition to the Slurm scheduler |

Event Details

March 01, 2019 — DD projects no longer accepted for Titan and Eos

Due to the limited remaining compute time on Titan and Eos, the OLCF will no longer accept Director’s Discretion projects as of March 01, 2019.

March 12, 2019 — Rhea RHEL7 upgrade

Rhea will be upgraded to RHEL7 on March 12. Please note the following:

- Recompile recommended

- Due to the OS upgrade and other software changes, we recommend recompiling prior to running on the upgraded system.

- FreeNX decommissioning

- FreeNX will be no longer be available from Rhea following the upgrade. VNC will remain available from Rhea’s compute nodes.

- More information on using VNC on OLCF systems, can be found here .

- Environment Modules transition to Lmod

- The environment module management system will be updated to Lmod. Lmod is a Lua-based module system for dynamically altering shell environments. Typical use of Lmod is very similar to that of interacting with modulefiles on other OLCF systems. The interface to Lmod is still provided by the module command. However; there are a number of changes, including hierarchical structure, that help interaction but should be noted.

- More information on using Lmod on Rhea can be found here.

- Default software version changes

- Default versions of a number of software packages, including compilers, OpenMPI, and CUDA, will be changing during the upgrade. Due to the OS upgrade and software changes, users should rebuild prior to running on the upgraded system.

- The list of new defaults can be found here. If you do not see a required package on the list, please notify [email protected]

- Python runtime management

- The python runtime will change to python/X.Y.Z-anacondaX-5.3.0. python/x.y.z-anacondax-5.3.0 should be used instead of python/x.y.z for a complete environment. Conda environments can be used for packages not already installed on system.

June 30, 2019 — Last day to submit jobs on Titan and Eos

The last day to submit batch jobs on Titan and Eos will be June 30, 2019.

July 16, 2019 — Rhea transition from Atlas to Alpine

On July 16, Rhea will begin the process of transitioning from Lustre to GPFS by mounting the new GPFS scratch filesystem, Alpine. Both Atlas and Alpine will remain available from Rhea to aid in the transition. Please note:

- Data should be transferred between Atlas and Alpine from the DTN, not Rhea

- GPFS requires up-to 16GB of memory per node and will reduce the max available compute node memory from 128GB to ~112 GB.

- All data on Atlas will be permanently deleted on August 15.

More information on transferring data from Atlas to Alpine can be found here.

August 01, 2019 — Titan and Eos will be decommissioned

Titan and Eos will be decommissioned on August 01. On this date logins to Titan and Eos will no longer be allowed.

August 05, 2019 — Atlas becomes read-only

In preparation for Atlas’ decommission the Lustre scratch filesystem, Atlas, will become read-only from all OLCF systems on August 05. Please do not wait, begin transferring your Lustre data now.

- Moving data off-site

- Globus is the suggested tool to move data off-site

- Standard tools such as rsync and scp can also be used through the DTN, but may be slower and require more manual intervention than Globus

- Copying data directly from Atlas (Lustre) to Alpine (GPFS)

- Globus is the suggested tool to transfer needed data from Atlas to Alpine. Globus should be used when transfer large amounts of data.

- Standard tools such as rsync and cp can also be used. The DTN mounts both filesystems and should be used when transferring with rsync and cp tools. These methods should not be used to transfer large amounts of data.

- Copying data to the HPSS archive system

- The hsi and htar utilities can be used to to transfer data from the Atlas filesystem to the HPSS. The tools can also be used to transfer data from the HPSS to the Alpine filesystem.

- Globus is also now available to transfer data directly to the HPSS

More information on transferring data from Atlas to Alpine can be found here.

August 6, 2019 — The OLCF will begin implementing the advertised purge policy on Alpine, the new center wide GPFS filesystem

The 90-day purge policy for the Alpine GPFS filesystem will be enabled on August 6, 2019. File purging will begin on that date. On that date, any file over the purge threshold will be eligible for deletion (even if that file was created before August 6).

August 13, 2019 — Rhea and DTNs will transition a portion of scheduled nodes to the Slurm batch scheduler

To aid in the migration from Moab to Slurm, 196 Rhea compute nodes and 2 DTN scheduled nodes will migrate from the Moab scheduler to the Slurm batch scheduler.

More information can be found on the Rhea running jobs page.

| Task | Moab | LSF | Slurm |

|---|---|---|---|

| View batch queue | showq |

jobstat |

squeue |

| Submit batch script | qsub |

bsub |

sbatch |

| Submit interactive batch job | qsub -I |

bsub -Is $SHELL |

salloc |

| Run parallel code within batch job | mpirun |

jsrun |

srun |

August 19, 2019 — All remaining data on Atlas will be permanently deleted

On August 19, data remaining on the Lustre filesystem, Atlas, will no longer be accessible and will be permanently deleted . Following this date, the OLCF will no longer be able to retrieve data remaining on Atlas. Due to the large amount of data on the filesystems, we strongly urge you to start transferring your data now, and do not wait until later in the year. More information on transferring data from Atlas to Alpine can be found here.

September 03, 2019 — Rhea and DTNs will transition all scheduled nodes to the Slurm batch scheduler

All nodes on Rhea and the DTNs will migrate from the Moab scheduler to the Slurm batch scheduler.

More information can be found on the Rhea running jobs page.

Atlas to Alpine Data Transfer

Below are instructions on how to copy your files from Atlas to Alpine. We are going to mention three approaches. The first one is Globus, the second one HPSS, and the third one are the DTN servers using your own scripts. If you would like to backup your files or you don’t want your files to be transferred through the network outside the ORNL, then use HPSS. However, in many cases Globus is faster in transferring files from Atlas to Alpine.

Globus

Initially, you can see a video with instructions, and below screenshots for step by step instructions for using Globus.

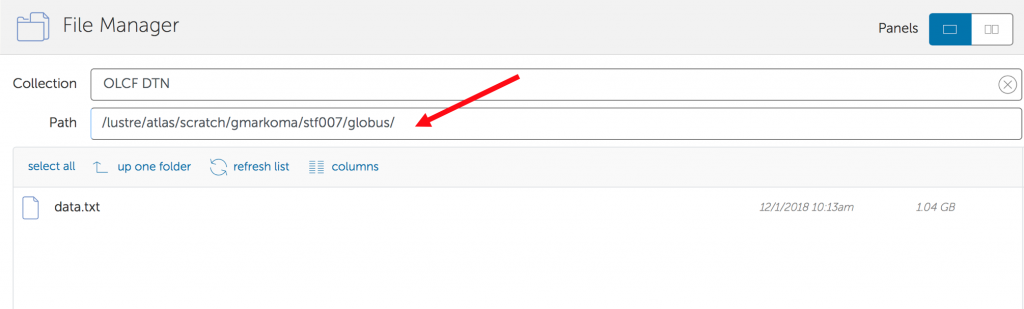

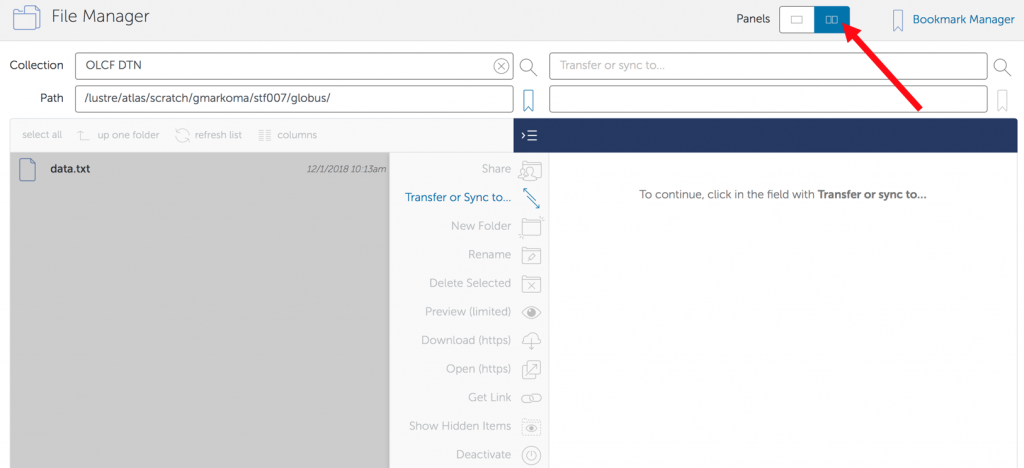

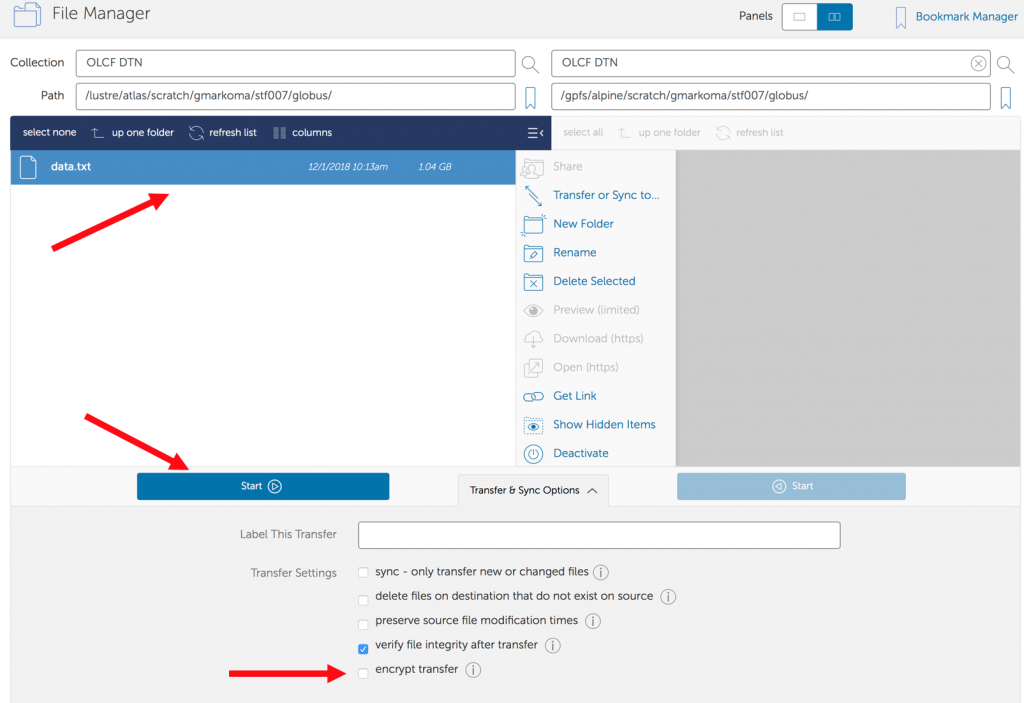

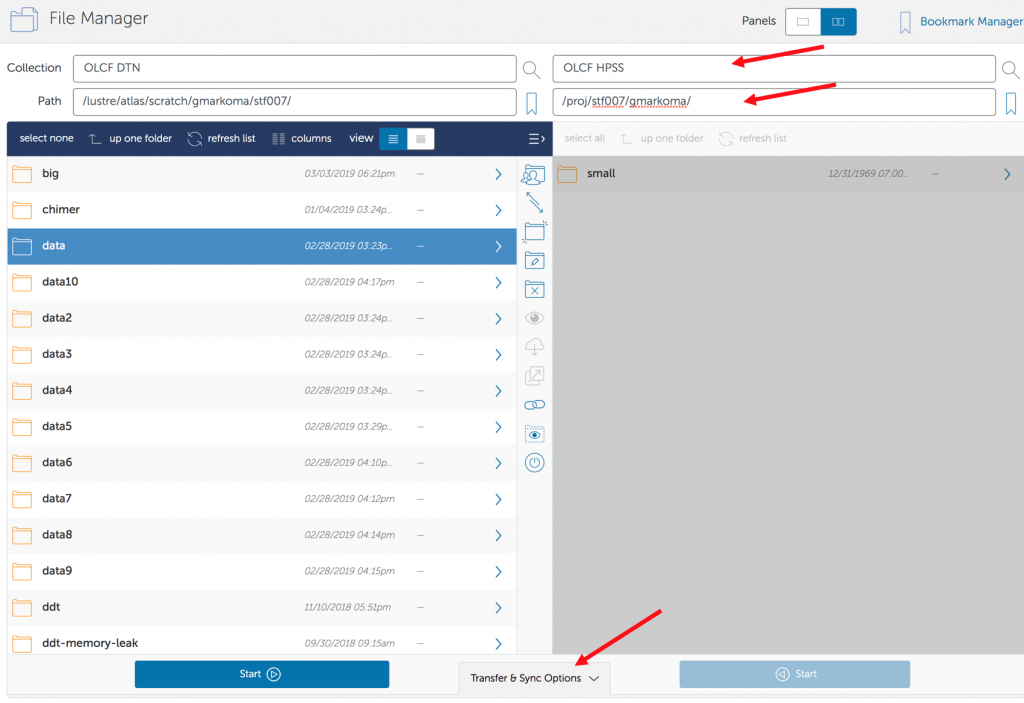

Follow the steps:

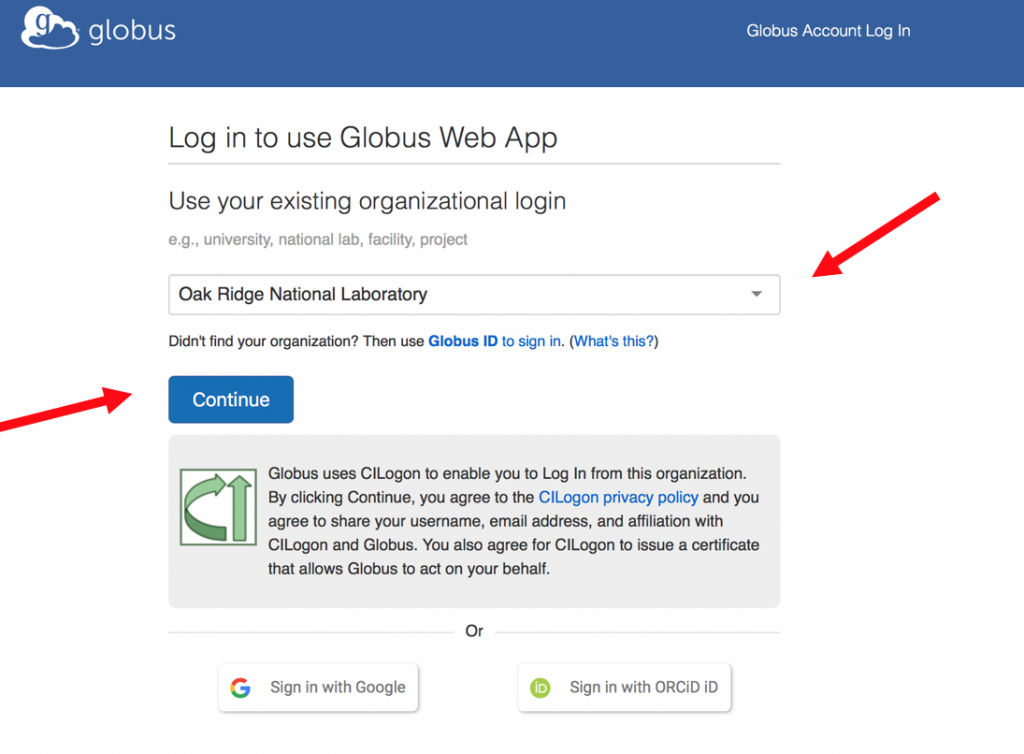

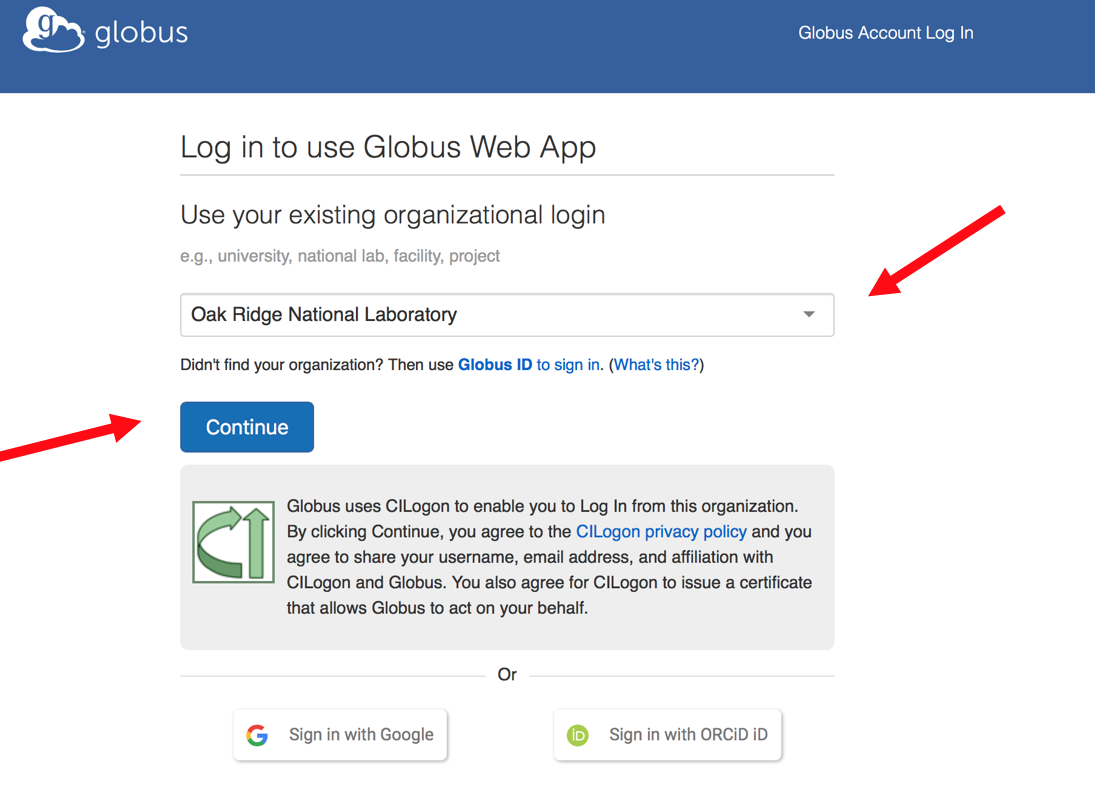

- Visit www.globus.org and login

- Then select your organization if it is in the list or you need to create a Globus account

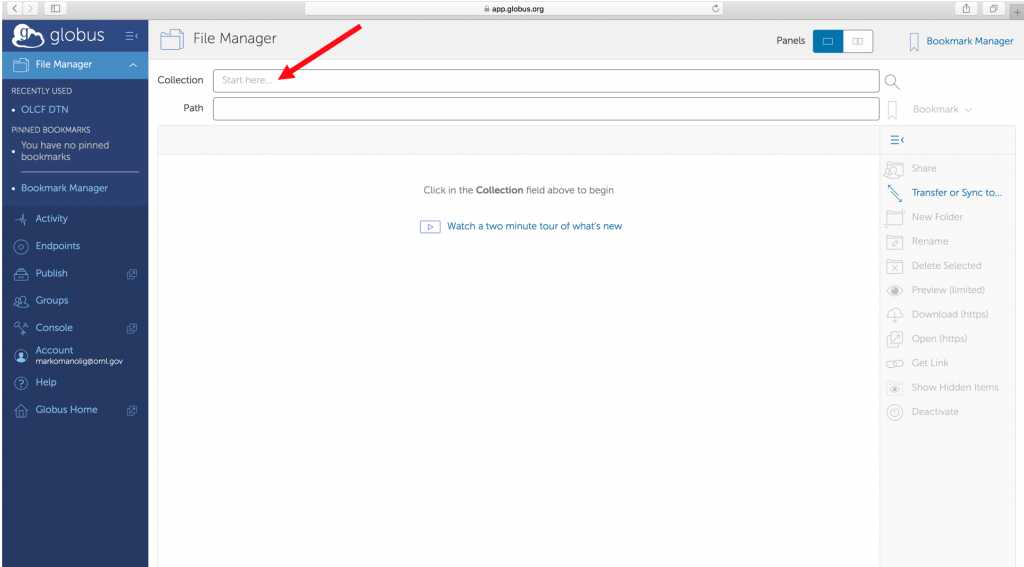

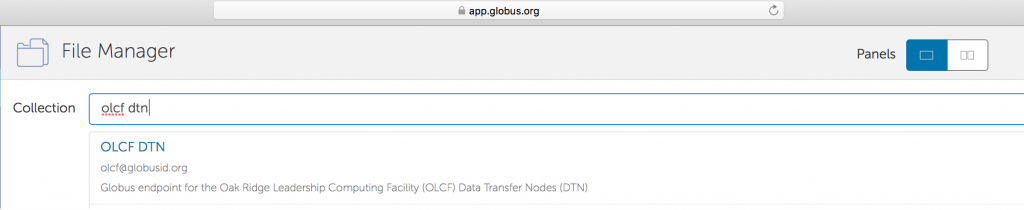

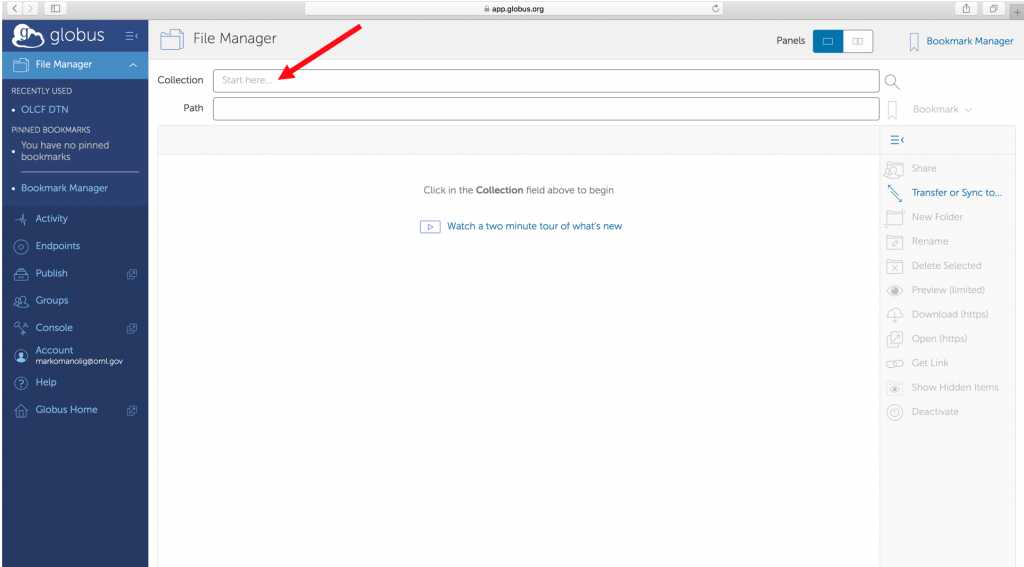

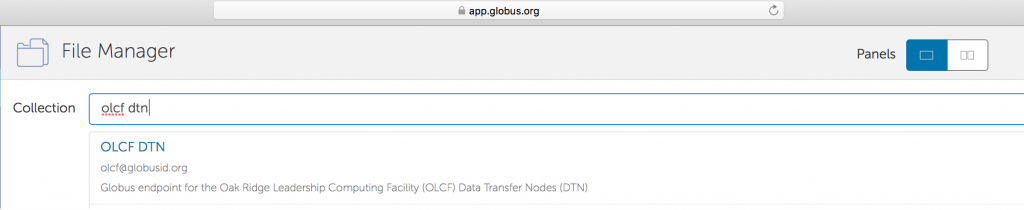

- Search for the endpoint OLCF DTN

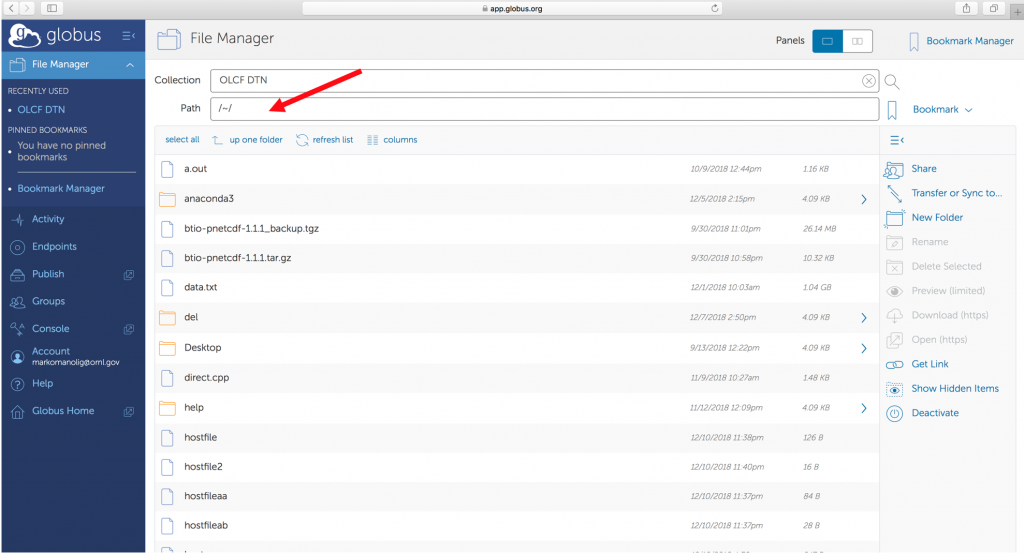

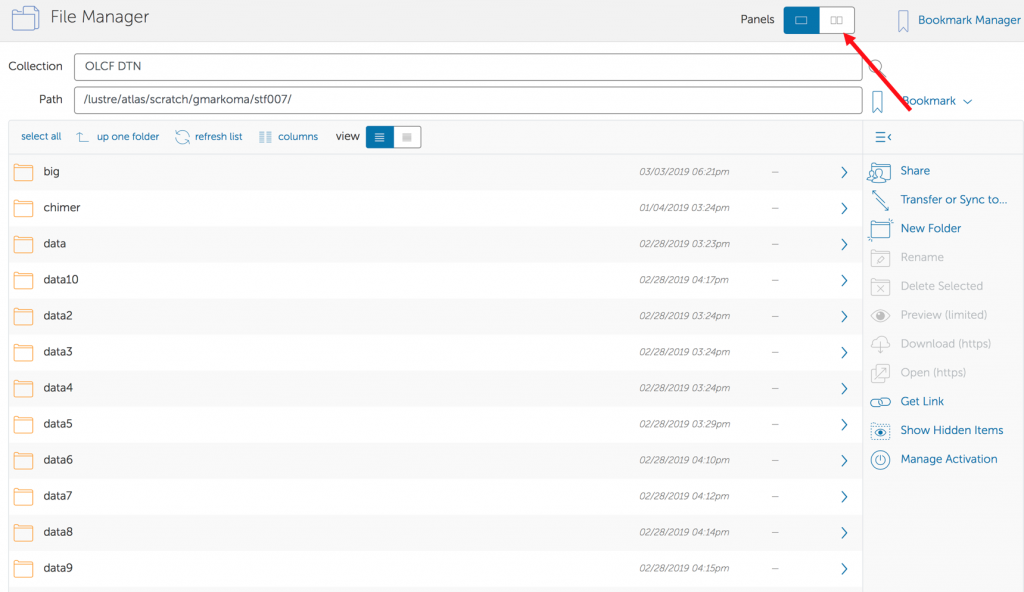

- Declare path

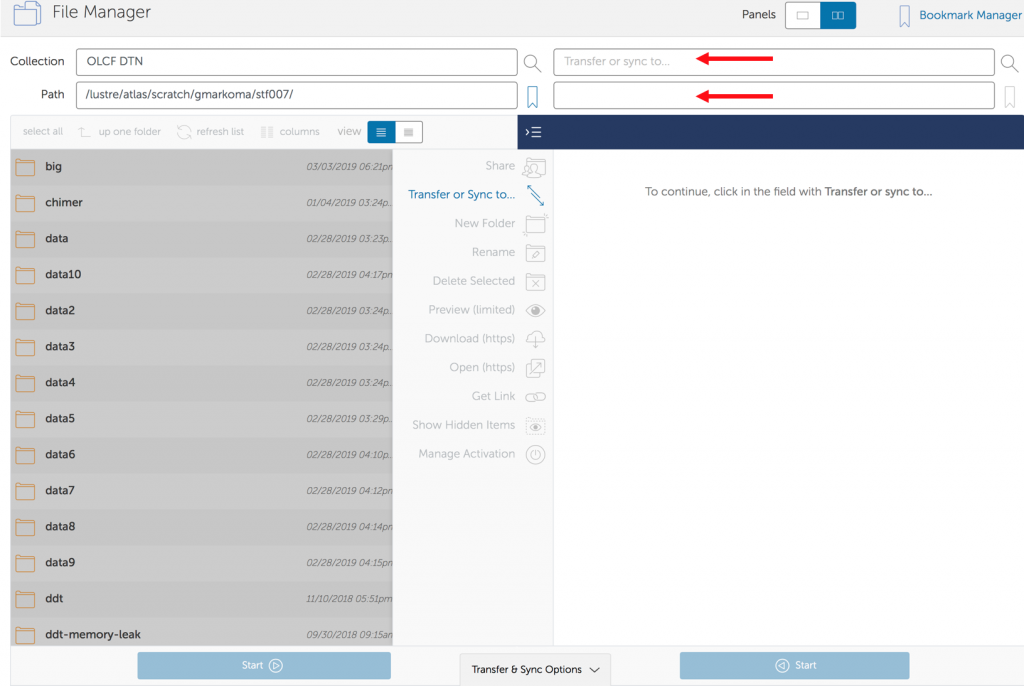

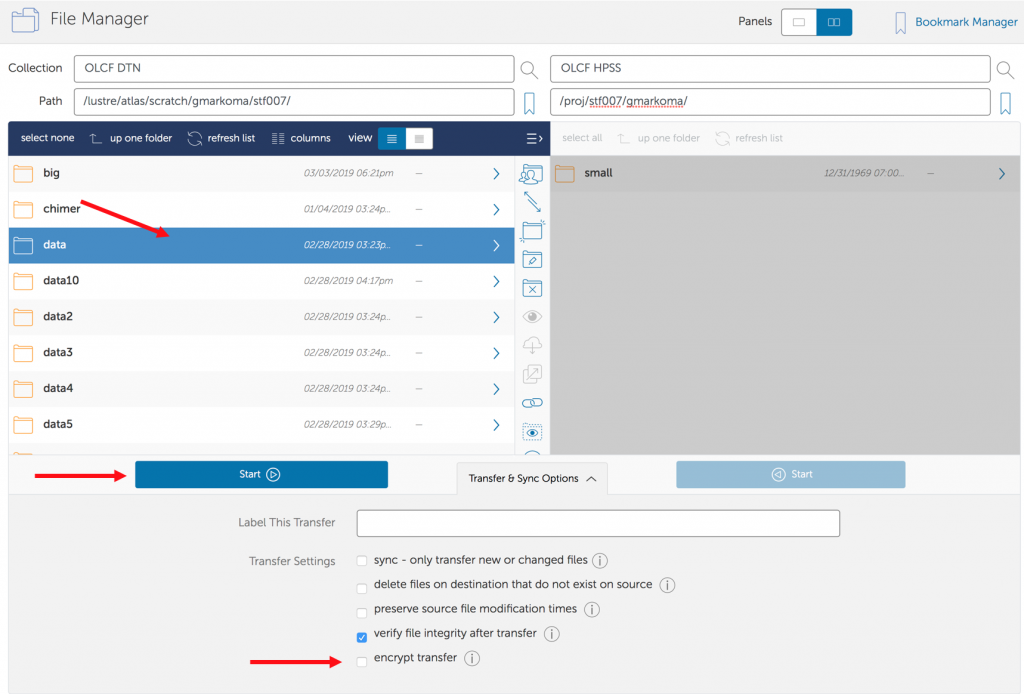

- Open a second panel to declare where your files would like to be transferred, select if you want an encrypt transfer, select your file/folder and click start

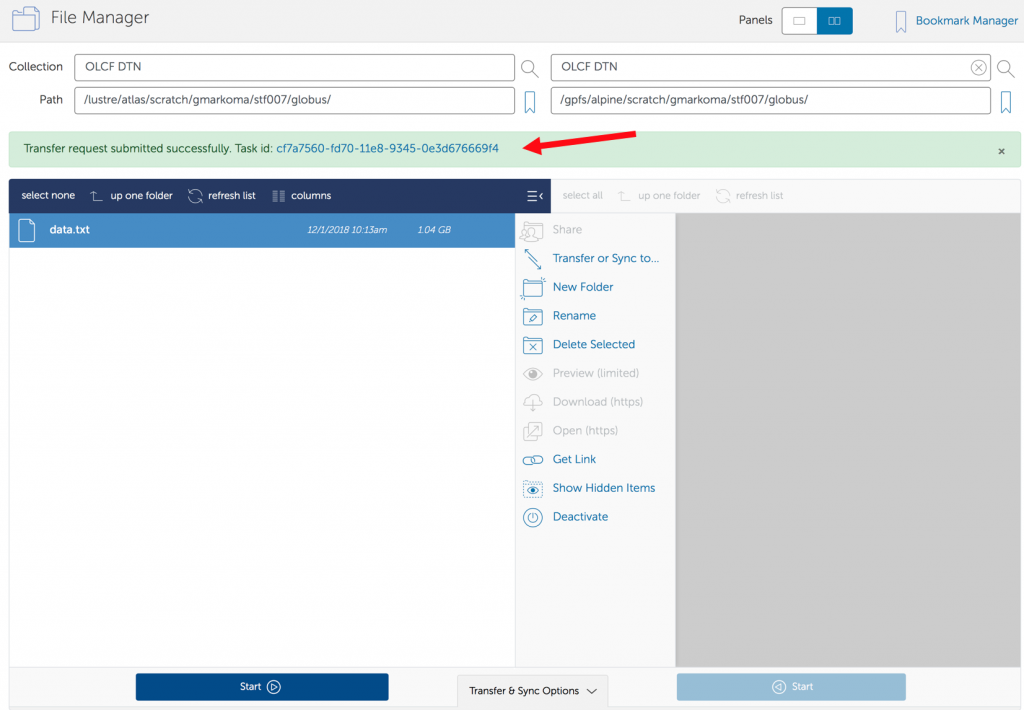

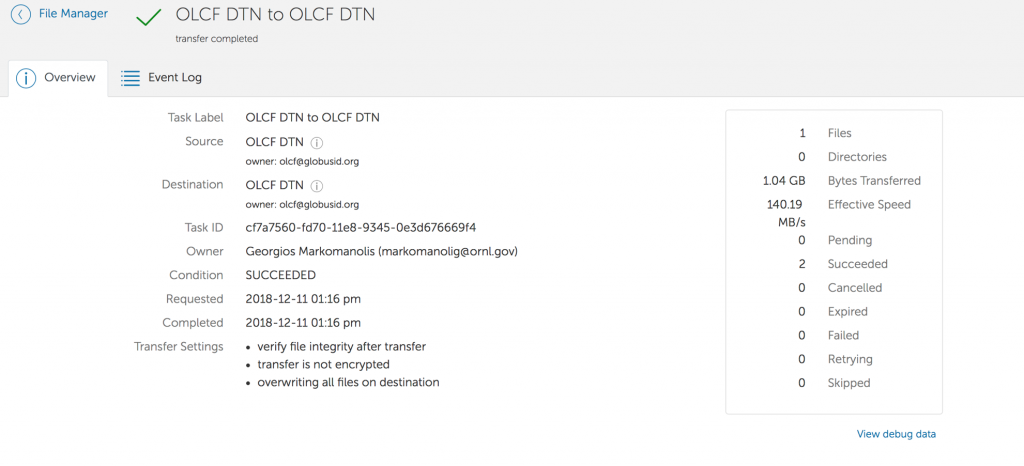

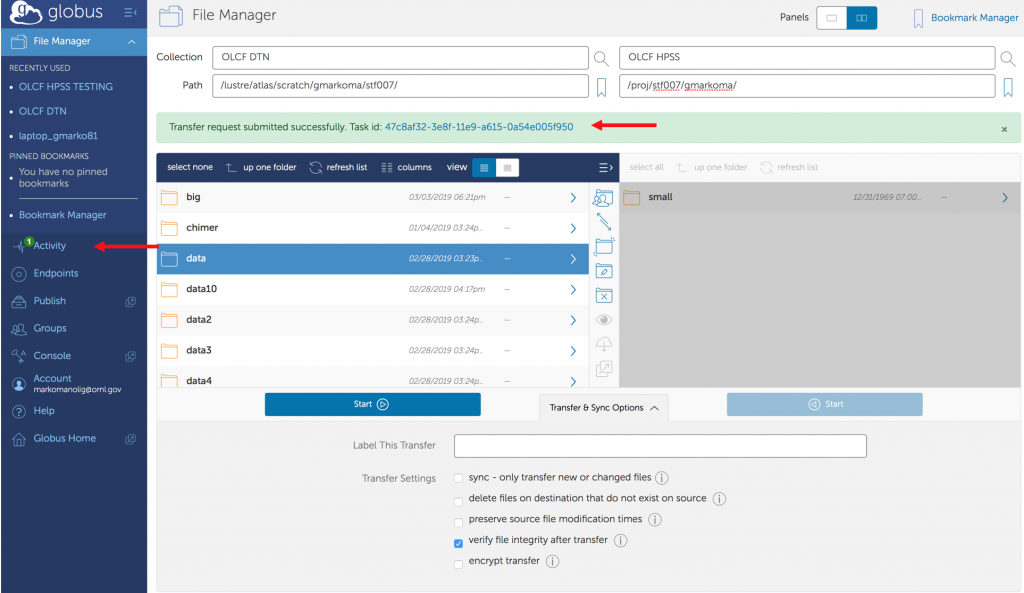

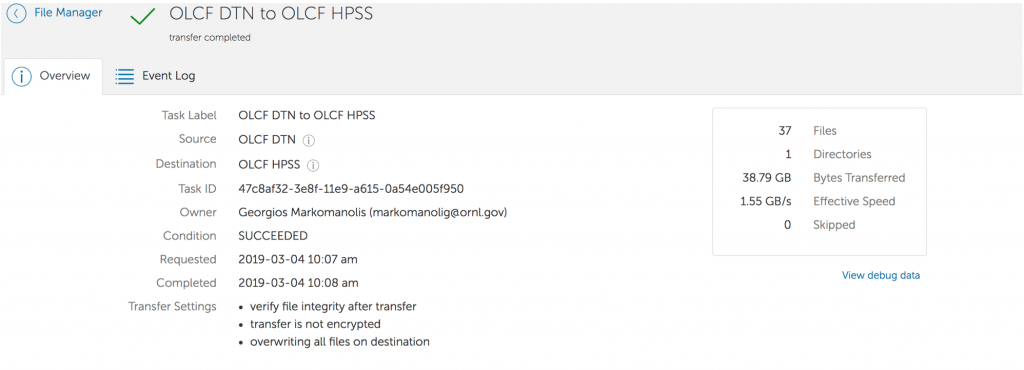

- Then an activity report will appear and you can click on it to see the status. When the transfer is finished or failed, you will receive an email

When the transfer starts, you could close your browser, if you transfer a lot of data, it will take some time. You will receive email with the outcome. For any issue contact [email protected]

When the transfer starts, you could close your browser, if you transfer a lot of data, it will take some time. You will receive email with the outcome. For any issue contact [email protected]

HPSS

Using Globus

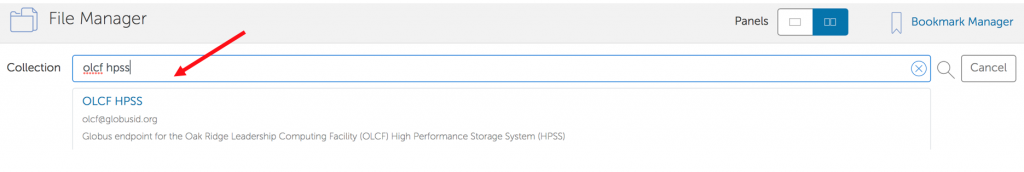

The OLCF users have access to a new functionality, using Globus to transfer files to HPSS through the endpoint “OLCF HPSS“. Globus has restriction of 8 active transfers across all the users. Each user has a limit of 3 active transfers, so it is required to transfer a lot of data on each transfer than less data across many transfers. If a folder is constituted with mixed files including thousands of small files (less than 1MB each one), it would be better to tar the small files. Otherwise, if the files are larger, Globus will handle them.

To transfer the files, follow the steps which some are identical with the instructions above regarding transfer files from Atlas to Alpine:

- Visit www.globus.org and login

- Choose organization:

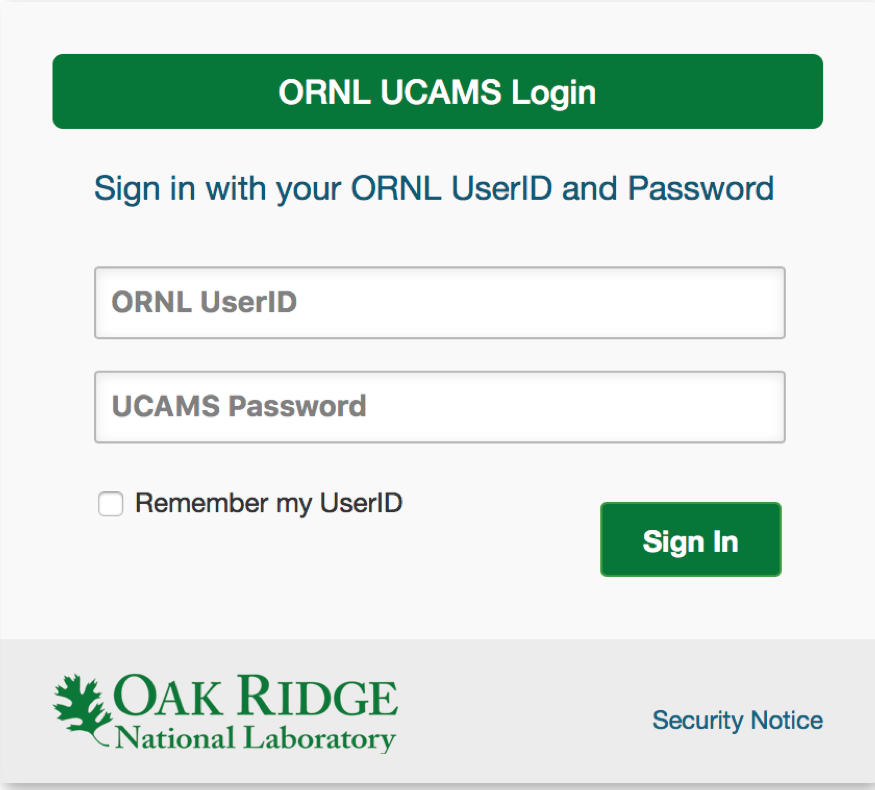

- Use your credentials to login

- Search for the endpoint OLCF DTN

- Declare path

- Open a second panel to declare where your files would like to be transferred

- Declare the new endpoint called OLCF HPSS and use the appropriate path for HPSS

- Select if you want an encrypt transfer, select your file/folder and click start

- Then an activity report will appear and you can click on it to see the status. When the transfer is finished or failed, you will receive an email

- When you click on the activity of the corresponding transfer, you can see the status or the results when it is finished

Using the tools

Currently, HSI and HTAR are offered for archiving data into HPSS or retrieving data from the HPSS archive. For better performance connect to DTN and execute the hsi/htar commands from there.

For optimal transfer performance, we recommend sending a file of 768 GB or larger to HPSS. The minimum file size that we recommend sending is 512 MB. HPSS will handle files between 0K and 512 MB, but write and read performance will be negatively affected. For files smaller than 512 MB we recommend bundling them with HTAR to achieve an archive file of at least 512 MB.

When retrieving data from a tar archive larger than 1 TB, we recommend that you pull only the files that you need rather than the full archive. Examples of this will be given in the htar section below.

Using HSI

Issuing the command hsi will start HSI in interactive mode. Alternatively, you can use:

hsi [options] command(s)

…to execute a set of HSI commands and then return.

To list you files on the HPSS, you might use:

hsi ls

HSI get and put commands also retrieve and store multiple files; mget and mput were

added for compatibility with ftp. In addition, both get and put can use csh-style wildcard patterns

and support recursion, so that entire directory trees can be retrieved or stored with a simple command

such as:

hsi get -R hpss_directory

or

hsi put -R local_directory

To send a file to HPSS, you might use:

hsi put a.out

To put a file in a pre-existing directory on hpss:

hsi “cd MyHpssDir; put a.out”

To retrieve one, you might use:

hsi get /proj/projectid/a.out

Here is a list of commonly used hsi commands.

| Command | Function |

|---|---|

| cd | Change current directory |

| get, mget | Copy one or more HPSS-resident files to local files |

| cget | Conditional get – get the file only if it doesn’t already exist |

| cp | Copy a file within HPSS |

| rm mdelete | Remove one or more files from HPSS |

| ls | List a directory |

| put, mput | Copy one or more local files to HPSS |

| cput | Conditional put – copy the file into HPSS unless it is already there |

| pwd | Print current directory |

| mv | Rename an HPSS file |

| mkdir | Create an HPSS directory |

| rmdir | Delete an HPSS directory |

Scenario 1:

Transfer file test.tar from the path /lustre/atlas/path/ to /proj/projid while the current directory is the /lustre/atlas/path/

hsi "cd /proj/projid; put test.tar"

Scenario 2:

Transfer file test.tar from the path /lustre/atlas/path/ to /proj/projid while the current working directory is not the /lustre/atlas/path/

hsi "put /lustre/atlas/path/test.tar : /proj/projid/test.tar"

Additional HSI Documentation

There is interactive documentation on the hsi command available by running:

hsi help

Additionally, documentation can be found at the Gleicher Enterprises website, including an HSI Reference Manual and man pages for HSI.

Using HTAR

The htar command provides an interface very similar to the traditional tar command found on UNIX systems. It is used as a command-line interface. The basic syntax of htar is:

As with the standard Unix tar utility the -c, -x, and -t options, respectively, function to create, extract, and list tar archive files. The -K option verifies an existing tarfile in HPSS and the -X option can be used to re-create the index file for an existing archive.

For example, to store all files in the directory dir1 to a file named allfiles.tar on HPSS, use the command:

htar -cvf allfiles.tar dir1/*

To retrieve these files:

htar -xvf allfiles.tar

htar will overwrite files of the same name in the target directory.

When possible, extract only the files you need from large archives.

To display the names of the files in the project1.tar archive file within the HPSS home directory:

htar -vtf project1.tar

To extract only one file, executable.out, from the project1 directory in the Archive file called project1.tar:

htar -xm -f project1.tar project1/ executable.out

To extract all files from the project1/src directory in the archive file called project1.tar, and use the time of extraction as the modification time, use the following command:

htar -xm -f project1.tar project1/src

HTAR Limitations

The htar utility has several limitations.

Apending data

You cannot add or append files to an existing archive.

File Path Length

File path names within an htar archive of the form prefix/name are limited to 154 characters for the prefix and 99 characters for the file name. Link names cannot exceed 99 characters.

Size

There are limits to the size and number of files that can be placed in an HTAR archive.

| Individual File Size Maximum | 68GB, due to POSIX limit |

|---|---|

| Maximum Number of Files per Archive | 1 million |

For example, when attempting to HTAR a directory with one member file larger that 64GB, the following error message will appear:

[titan-ext1]$htar -cvf hpss_test.tar hpss_test/ INFO: File too large for htar to handle: hpss_test/75GB.dat (75161927680 bytes) ERROR: 1 oversize member files found - please correct and retry ERROR: [FATAL] error(s) generating filename list HTAR: HTAR FAILED

Scenario 3:

The command htar fails because of the limitations of the tool, so we tar the folder and create a pipeline with hsi to created a tar file called test.tar in /proj/projid/

tar cf - directory_to_compress | hsi "cd /proj/projid; put - : test.tar"

You can use the previously mentioned command htar -xvf to retrieve the file from Alpine, however, consider to extract only the necessary files.

Additional HTAR Documentation

The HTAR user’s guide can be found at the Gleicher Enterprises website Gleicher Enterprises website, including the HTAR man page.

DTN

You can use Data Transfer Nodes (DTN) to transfer files with scp and rsync, however, probably the other proposed approaches could be faster if you have not prepared and optimized script for efficient performance.

ssh [email protected]

Then you have access to /lustre/atlas and /gpfs/alpine in order to use a script to transfer your files.