Sarp Oral, the storage team lead for the TechInt group at the OLCF, works with other storage team members to deliver a file system that meets the requirements for reliability, capacity, and performance.

The Faces of Summit series shares stories of the people behind America’s top supercomputer for open science, the Oak Ridge Leadership Computing Facility’s Summit. The IBM AC922 machine launched in June 2018.

Big supercomputers bring big challenges—especially when it comes to storing all the data such large-scale systems generate. Sarp Oral, the storage team lead for the Technology Integration Group (TechInt) at the Oak Ridge Leadership Computing Facility (OLCF), knows well the struggles and rewards associated with the delivery of a capable file system.

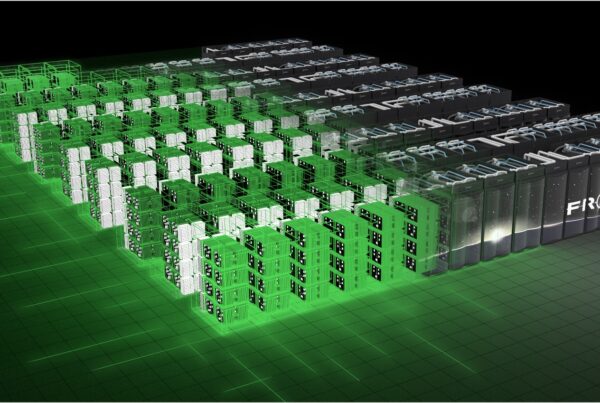

The OLCF’s IBM AC922 Summit, predicted to be one of the fastest supercomputers in the world when it comes online this year, will require a file system that can store and retrieve the scientific data generated by researchers at the OLCF, a US Department of Energy (DOE) Office of Science User Facility located at DOE’s Oak Ridge National Laboratory (ORNL). Members of various groups at the OLCF, including TechInt and the High-Performance Computing Operations (HPC Ops) Group, have risen to the task.

Oral is responsible for the delivery of Summit’s I/O subsystem, an environment that handles the work for the input and output of the system. Other subsystems manage the network, the compute nodes, and the scheduler.

“The I/O subsystem on Summit is different than what we had on Titan or Jaguar,” Oral said, referencing the OLCF’s current Cray XK7 machine and its predecessor, respectively. “The I/O requirements driven by current scientific applications surpass the cost-effective technological capabilities that previously existed.”

Oral enjoys navigating these problems at a never-before-seen scale. In fact, he said, the most enjoyable part of working on Summit is dealing with problems that, when scaled up, become greater challenges.

Thriving at scale

Oral has always enjoyed working at ever-challenging HPC scales. He earned a PhD in high-performance computer networks from the University of Florida in 2003 and then transitioned to I/O parallel file systems when he came to ORNL as a subcontractor the same year. He became a staff member in TechInt at the OLCF in 2006.

Oral has worked with Summit’s file system since 2014. During that period, he has helped write the request for proposals for the file system, negotiate with IBM, and develop the I/O acceptance plan.

Although the OLCF’s previous Spider II file system used a single layer of magnetic disks formatted as a parallel file system to serve the entire center, Oral saw the need for changes for Summit. Emphasizing the necessity of reliability, capacity, and performance, the OLCF storage team decided to implement a two-layer I/O system that would include the file system as well as something called the burst buffer. The top layer of Summit’s I/O system is the burst buffer, which can take in data four to five times faster than the file system, thus meeting the performance requirements. The bottom layer is the file system itself, Spider III, which satisfies the capacity and reliability requirements.

“You have to split the responsibilities to achieve a cost-effective solution,” Oral said. “We want applications to be spending 90 percent of their time on computation and no more than 10 percent on I/O.”

Oral is now eagerly working alongside the other storage team members toward acceptance of the file system. Acceptance requires two steps: first, exercising the vendor’s file system to verify it is meeting the expectations outlined in the contract and the acceptance document; and second, ensuring that all components are functioning and performing correctly. Oral works closely with Dustin Leverman, storage team lead for HPC Ops, whose team deals primarily with installation and ongoing operations of storage components. His team oversaw the installation of the file system hardware and software and the system’s integration with Summit.

“Sarp and I have complementary roles,” Leverman said. “We both worked on and reviewed the request for proposals, and we both work very closely with acceptance now. We just approach these from a different standpoint.”

When it comes online, Summit’s Spider III file system will provide 250 petabytes (PB)—or the equivalent of 74 years of high-definition video—of usable storage space at 2.5 terabytes per second. The 32,494 disks in Spider III will operate as a single file system that can write the same data to multiple disks, write pieces of data on separate disks, or do a combination of both. This enables the system to achieve satisfactory performance and reliability.

“Concurrent deployment of Summit and Spider III created some dependency issues in terms of acceptance,” Oral said. “At the end of the day, though, as a center our mode of operation won’t change. When we deploy Spider III, it will be the file system for the whole center.”

The most interesting aspect of working with Summit, Oral said, is that most of its problems become small when their scale is reduced. Even seemingly common aspects of our everyday lives, he said, become huge challenges when they are scaled up.

“Supercomputers and their surrounding ecosystems are complex,” Oral said. “They are just like complex organisms. But when Summit is completed, we aren’t going to be interested in the pieces—we’re going to be interested in this computing platform that we can do science on. When we fit all of these pieces together, the system will truly be alive, and that never ceases to amaze me.”

ORNL is managed by UT-Battelle for DOE’s Office of Science. The Office of Science is the single largest supporter of basic research in the physical sciences in the United States and is working to address some of the most pressing challenges of our time. For more information, please visit https://science.energy.gov/.